foijsaofijewoidffdsoho

Author: dayoungc@andrew.cmu.edu

dayoungc- Project-03- Dynamic Drawing

//Dayoung Chung

//SECTION E

//dayoungc@andrew.cmu.edu

//Project-03

function setup() {

createCanvas(640, 480);

//background(0);

}

function draw() {

/*

stroke(255);

strokeWeight(3);

for (var i = 0; i < 20; i++) {

for (var j = 0; j < 20; j++) {

line(i*30+i, j*30+j, i*30-i, j*30-j);

}

}

*/

background(0);

//i: x j:y

for(var i= 0; i<20; i++) {

for (var j= 0; j<20; j++) {

stroke((255 - (i+j)*5)*mouseX/100, (255 - (i+j)*5)*mouseX/400, (255 - (i+j)*5)*mouseX/600);

//stroke(202,192,253);

strokeWeight(6 * mouseY/600);

line(i*30 + (30*mouseX/600), j*30+(30*mouseY/600), i*30+30-(30*mouseX/600), j*30+30-(30*mouseY/600));

}

}

}I made a large number of lines to make a pattern of my own. Wave your mouse around to adjust the color and shape of lines.When you move your mouse to right or down, the lines get thicker. And when you move your mouse to left or up, then the lines disappear. In future, I would like to learn more about mapping and making angles to make a complicated pattern.

dayoungc- Looking Outwards -03

“Making Information Beautiful”

This week, I was inspired by the work of David Wicks <sansumbrella.com>, who explores data visualization. I was struck specifically by his project “Drawing Water” <http://sansumbrella.com/works/2011/drawing-water/>, first because of its beauty and second because of how effective it is in helping people experience information in a new way.

http://sansumbrella.com/works/2011/drawing-water/winter2011.jpg

“A representation of rainfall vs. water consumption in Winter 2001.”

In the print representation above, lines going from blue to black represent the general direction of rainfall toward where it is consumed. Although Wicks cautions that the pathways themselves are imagined, he uses real data: Wicks uses water consumption data from the United States Geological Survey and rainfall data from the NOAA National Weather Service. He inputs this information into code that visualizes rain sources going toward areas of water consumption.

<iframe src=”https://player.vimeo.com/video/24157130″ width=”640″ height=”360″ frameborder=”0″ webkitallowfullscreen mozallowfullscreen allowfullscreen></iframe>

“A video showing a dynamic and interactive representation of the Drawing Water project.”

This is fascinating, as it allows viewers to see and understand data that would otherwise be mere numbers on a page. Only scholars would be able to understand the information properly. But Wicks allows normal people to comprehend it through a printed image generated through computation.

dayoungc-LookingOutwards-02

Combining Concepts and Computations into Reality

In my exploration of generative artists, I stopped at Michael Hansmeyer’s <www.michael-hansmeyer.com> work, mesmerized. Active from 2003 to the present, Hansmeyer uses existing architectural or natural forms but modifies them—sometimes drastically—or explores their boundaries using what he calls “computational architecture.

http://www.michael-hansmeyer.com/images/reade_street/reade_street3.jpg

“Seemingly a normal building renovation.”

One of his more recent projects, “Reade Street” (2016) is a prime example of this combination of existing concepts and generated computations. At first glance, the building is your typical classic building in Manhattan. Upon closer glance at the columns, however, one can see that the architectural design has been computer generated from a combination of existing forms.

http://www.michael-hansmeyer.com/images/reade_street/reade_street5.jpg

“But combining classical architecture and computation to create something new.”

This is fascinating because through computation, Hansmeyer is able to explore beyond the boundaries of ancient through modern architecture while still retaining all of the history involved in the creation of these designs. Itis an eerie blend of past, present, and future that may itself become established in architectural history.

http://www.michael-hansmeyer.com/images/inhotim/inhotim2.jpg

“Hansmeyer even combines nature and computation to create an eerie blend.”

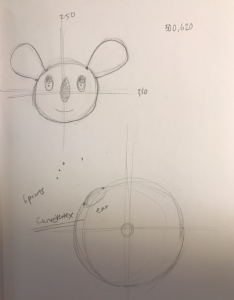

dayoungc-Project-02-Variable-Face

//Dayoung Chung

//Section E

//dayoungc@andrew.cmu.edu

//Project-02

var eyeSize = 30;

var faceWidth = 200;

var faceHeight = 180;

var noseSize = 15;

var mouthSize = 30;

var background1 = (194);

var background2 = (94);

var background3 = (150);

var nose= 200

var earSize= 200

function setup() {

createCanvas(500, 620);

}

function draw() {

background(background1, background2, background3);

background(mouseX,mouseX,mouseY,mouseY)

ellipse(mouseX, mouseY,0,0);

//face

noStroke(0);

fill(107,104,100);

ellipse(width /2 , height /2 +30, faceWidth*1.2, faceHeight*1.1);

var eyeLX = width / 2 - faceWidth * 0.3;

var eyeRX = width / 2 + faceWidth * 0.3;

//eyes

fill(238,232,219);

ellipse(eyeLX, height / 2, eyeSize, eyeSize+10);

ellipse(eyeRX, height / 2, eyeSize, eyeSize+10);

//pupil

fill(64, 60, 58);

ellipse(eyeLX, height/2-5.5, eyeSize-3 , eyeSize-2);

ellipse(eyeRX, height/2-5.5, eyeSize-3, eyeSize-2);

//mouth

stroke(0);

strokeWeight(3);

noFill();

arc(width/2 ,height/2+80 ,mouthSize+10,mouthSize-10,0,PI);

//nose

noStroke(0);

fill(0);

ellipse(width/2,height/2+10,nose/7,nose/3);

//nostrills

noStroke(0);

fill(255);

ellipse(width/2-6,height/2+35,noseSize-10,4)

ellipse(width/2+6,height/2+35,noseSize-10,4)

//body

noStroke(0);

fill(107,104,100);

ellipse(width/2, height*3/4+100,180,300);

fill(238,232,219);

ellipse(width/2, height*3/4+150,100,300)

//ear

beginShape();

fill(107,104,100)

curveVertex(180, 290);

curveVertex(180,290);

curveVertex(150, 160);

curveVertex(125, 140);

curveVertex(100, 240);

curveVertex(140, 280);

curveVertex(160,290);

curveVertex(160,290);

endShape();

//ear2

beginShape();

fill(107,104,100)

curveVertex(310, 290);

curveVertex(310, 290);

curveVertex(340, 160);

curveVertex(365, 140);

curveVertex(390, 240);

curveVertex(360, 280);

curveVertex(340,290);

curveVertex(340,290);

endShape();

}

function mousePressed() {

// when the user clicks, these variables are reassigned

faceWidth = random(140, 200);

faceHeight = random(180, 150);

eyeSize = random(14, 40);

nose = random(120,300);

mouthSize = random(10,30);

background1 = random(0, 255);

background2 = random(0, 255);

background3 = random(0, 255);

}For this project, I made a character with my favorite animal, which is koala. I sketched first before starting with actual p5js. In the end, I decided to use “curveVertex” forming the ears, and arc for the lips. I had fun learning how to use variables and why they are used.

dayoungc- LookingOutwards01

Looking Outwards #1: Volume (Interactive cube of responsive mirrors that redirects light and sound)

This interactive cube of responsive mirrors are made by the NY based art and architecture collective, Softlab. I was fascinated by this project because of its work in combining sound and light into the same place. Especially how the sound and light were used as the building elements of the project. It took the artist a study in the visualization of extremely small particles that compose the air. The human eye cannot see them, but it was made possible with the implementation of the sound and the light.

The responsive mirrors are regulated and controlled by a specific set of computer commands. This would not differ very much from the ordinary software we use in our daily lives. The lights are LED bulbs as well, which is the major source of light in modern buildings. This project opens the door to many visual opportunities for the “invisible”, and the abstract, tangible.

Below is a link to the website: http://www.creativeapplications.net/processing/volume-interactive-cube-of-responsive-mirrors-that-redirects-light-and-sound/

dayoungc – Project01

//Dayoung Chung

//Section E

//dayoungc@andrew.cmu.edu

//Project-01

function setup() {

createCanvas (600,600);

}

function draw(){

background(29,113,71);

strokeWeight(5);

fill(211,159,38);

ellipse(300,300,500,500);

//hair

strokeWeight(0);

fill(61,40,24);

ellipse(299,230,303,280);

rect(148,240,302,200);

//head

strokeWeight(0);

fill(252,226,215);

ellipse(300,320,270,330);

//eyes

strokeWeight(0);

fill(255);

ellipse(240,302,40,40);

ellipse(350,302,40,40);

//pupils

strokeWeight(0);

fill(0);

ellipse(240,302,20,20);

ellipse(350,302,20,20);

//nose

noStroke();

fill(241,165,157);

triangle(280,355,295,310,315,355);

//cheeks

strokeWeight(0);

fill(253,112,141);

ellipse(220,355,66,66);

ellipse(372,355,66,66);

//mouth

stroke(199,42,37);

strokeWeight(10);

noFill();

arc(300,400, 100, 50, 50, 15);

// left ear

strokeWeight(0);

fill(252,226,215);

ellipse(160,320,25,39);

// right ear

strokeWeight(0);

fill(252,226,215);

ellipse(440,320,25,39);

//eyebrow left/right

noFill();

stroke(61,40,24);

strokeWeight(8);

arc(236, 250, 30, 1, PI, 0);

arc(350, 250, 30, 1, PI, 0);

//left glasses

strokeWeight(5)

fill (260, 255, 3, 30);

ellipse(240,302,65,65);

//right

strokeWeight(5);

fill(260, 255, 3, 30);

ellipse(350,302,65,65);

//line(glasses)

strokeWeight(0);

fill(0);

rect(270,300,49,7);

}For my self-portrait, I wanted to create very simple and bold character. I first started off with the head and then built off of that. Before working with p5js, I sketched my character on illustrator to make the process faster and simpler. I really had fun choosing the color palette to make it more balanced. Also, in the process, it was fascinating to understand and utilize graphical primitives into my own piece.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)