hyt-02-Minions

var eyeSize = 50;

var mouthSize = 30;

var bodyWidth = 140;

var bodyHeight = 130;

var eyeballSize = 40;

var mouthAngle = 100;

var x = 0;

function setup() {

createCanvas(480, 640);

}

function draw() {

angleMode(DEGREES); background(x, 194, 239); stroke(0);

strokeWeight(4.5);

fill(255, 221, 119); arc(width / 2, height / 2, bodyWidth, bodyHeight, 180, 360, OPEN); rect(width / 2 - bodyWidth / 2, height / 2, bodyWidth, bodyHeight); fill(68, 100, 133); arc(width / 2, height / 2 + bodyHeight, bodyWidth, bodyHeight - 30, 0, 180, OPEN);

var eyeLX = width / 2 - bodyWidth * 0.23; var eyeRX = width / 2 + bodyWidth * 0.23; fill(256); ellipse(eyeLX, height / 2, eyeSize, eyeSize); ellipse(eyeRX, height / 2, eyeSize, eyeSize);

fill(60, 40, 40); noStroke();

ellipse(eyeLX, height / 2, eyeballSize, eyeballSize);

ellipse(eyeRX, height / 2, eyeballSize, eyeballSize);

fill(0);

arc(width / 2, height / 2 + bodyHeight - 50, mouthSize, mouthSize, 0, mouthAngle);

var a = atan2(mouseY - height / 2, mouseX - width / 2);

fill(255, 221, 119); translate(width / 2 - bodyWidth / 2, height / 2 + bodyHeight);

push();

rotate(a);

rect(0, - bodyHeight / 12, bodyHeight / 3, bodyHeight / 6); fill(0); ellipse(bodyHeight / 3, 0, bodyHeight / 5, bodyHeight / 5); pop();

translate(bodyWidth, 0);

push();

rotate(a);

rect(0, - bodyHeight / 12, bodyHeight / 3, bodyHeight / 6); fill(0); ellipse(bodyHeight / 3, 0, bodyHeight / 5, bodyHeight / 5); pop();

}

function mousePressed() {

bodyWidth = random(100, 180);

bodyHeight = random(90, 180);

eyeSize = random(30, 50);

eyeballSize = eyeSize - 12;

mouthSize = eyeSize;

mouthAngle = random(10, 220);

x = random(81, 225);}

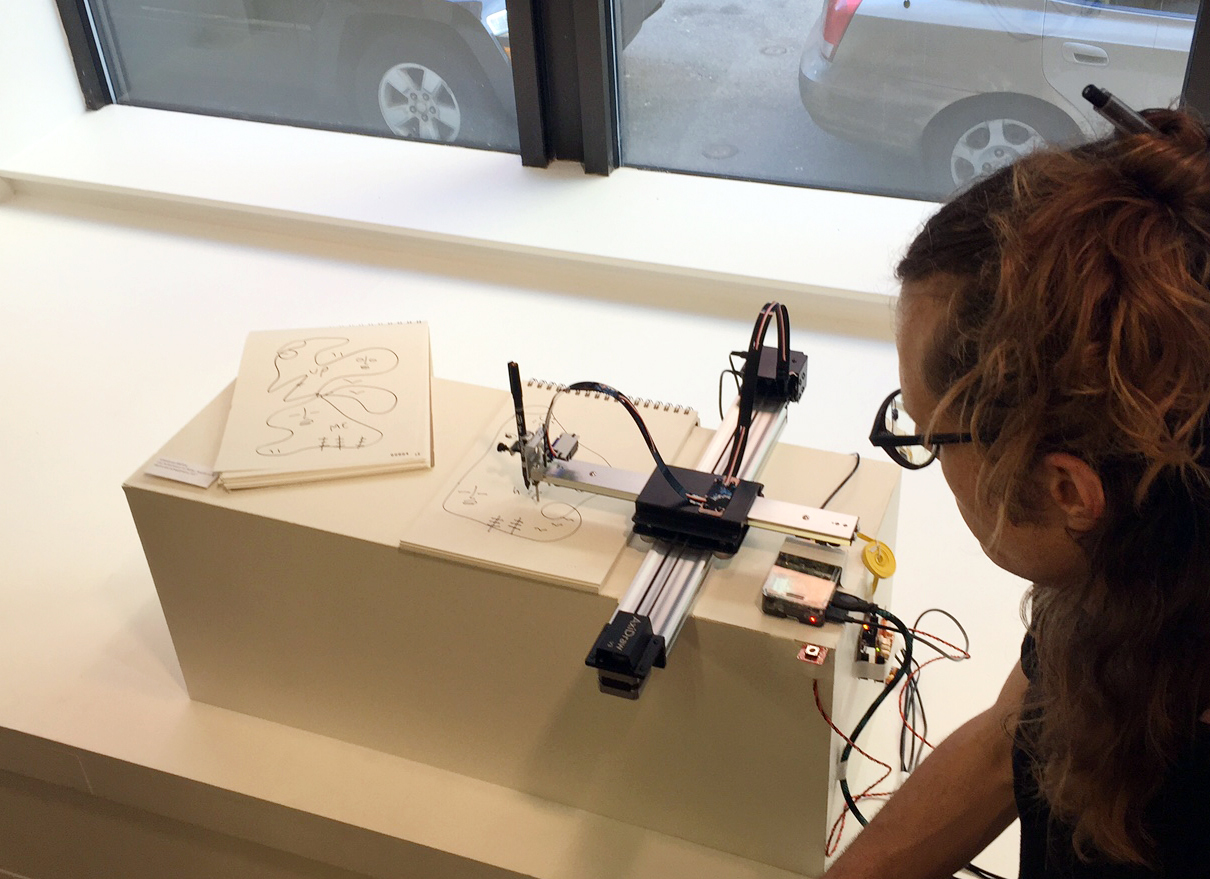

Now that we have entered week 2, there are many more functions and attributes that I have began to explore in p5.js, and based on those I decided to make a minion with animation-like facial features for my program. I didn’t particularly plan out the sketches on Illustrator, but rather drew out primitive shapes like ellipses and rectangles through trial and error. One of the more difficult process was the moving hands when the mouse hovers over, but once I figured out the coordinates and center for rotation it became much easier. Overall, I really enjoyed creating the project!

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)