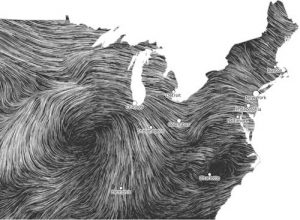

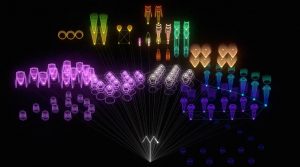

For this weeks post, I chose to compare The Creatures of Prometheus and Atlas, two generative videos that pair audio and visual experiences. The Creatures of Prometheus, creates by Simon Russell, is a visualization of Beethoven’s ballet. The animation combines both the audio and visual, directly setting up the graphics to react to the music: pitch and amplitude derive the height and speed of graphics, volume effects color, etc.

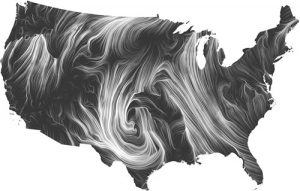

Similarly, Atlas is also a generative video that combines audio and visuals. Created by Agoston Nagy, Atlas is an “anti game environment” that produces music in a conversational cognitive space. The video uses a combination of text, sounds and graphics (“tasks”) that are automatically generated and composed and carried out (“solved”) through machine intelligence without the aid of human input. Agoston questions concepts like ad infinitum, presence, human cognition, imagination, etc.

Although both projects have similar products (both are generative videos combining audio and visuals), the concepts driving the projects and ideas behind them are very different. The Creatures of Prometheus takes a very direct approach, programming an animation where the graphics react directly to the sound.

Atlas uses a more cognitive approach, focusing on the generation of an environment and space through audio and visuals.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)