One interactive exhibit that has inspired me is the Miniature Railroad exhibit at the Carnegie Science Center. One aspect of the model that I enjoy is the combination of the tactile and technological. While this display is relatively simple in comparison to others in the museum, the combination of physical objects and technology to invoke movement and life in the Pennsylvania replica is exciting to me. Two years ago, the exhibit had its centennial, encouraging visitors to remember the advances in model building and interactivity that the museum has achieved. The exhibit operates with a series of buttons and screens, which are updated by technicians and craftsmen alongside the physical model every fall. The museum commits to preserving the past while upholding modern standards of technological advancement.

Category: LookingOutwards-01

LO: My inspiration

I recently came upon an exhibition piece called “Can’t Help Myself” (2016) by artists Sun Yuan and Peng Yu. It is a robotic arm that mimics animated movements while constantly wiping a blood-like liquid within an area around it. The artists worked with robotic engineers to program the specific movements and repurpose the robotic arm, creating code for 32 movements that the robot will perform. The more the robot wipes the liquid, the more messy the room becomes, creating a sense of helplessness. What I admire about the project is how movements can be coded into the robot to give it lifelike characteristics. Although the robot is a machine with no emotions, we as humans are able to place emotions onto it just by observing its movements. I’m curious about human interaction with robotics and computers, especially how we create emotional connections with them, which is why this artwork caught my attention. This artwork sprouted from the artists’ wish to explore how machines can be used to replace an artist’s place in performative work, broadening the boundaries of performance art. “Can’t Help Myself” was also Guggenheim’s first robotic artwork, and presented new possibilities for combining technology with exhibition art.

LO: My Inspiration

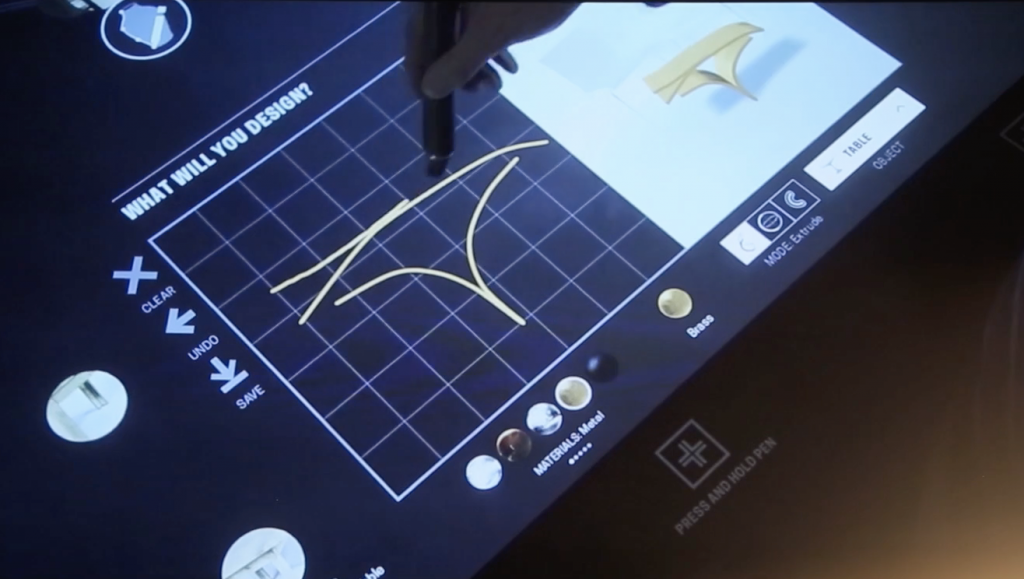

There was an interactive project at the Cooper Hewitt Museum in New York where people were able to draw on a big touch screen, and the technology transferred those simple lines to a piece of artwork. The drawings that people made were made out of very simple lines, but because the technology was so advanced, it was able to transfer the rough drawings into beautiful artworks. I really admired this project because it was appreciated by all ages from kids to adults doodling their works. I think it’s astounding how the software can change people’s drawings into something amazing and something so high tech. People were also able to save the artwork they made and take it home, and I think it’s much more meaningful to take a souvenir that they made rather than buying something from the museum store. Because of technology, people can take away experiences rather than consume art. This project points to how museums are developing their softwares to make it more interactive for people, thus creating more memorable experiences for people.

Video Link (18:35)

LO: My Inspiration

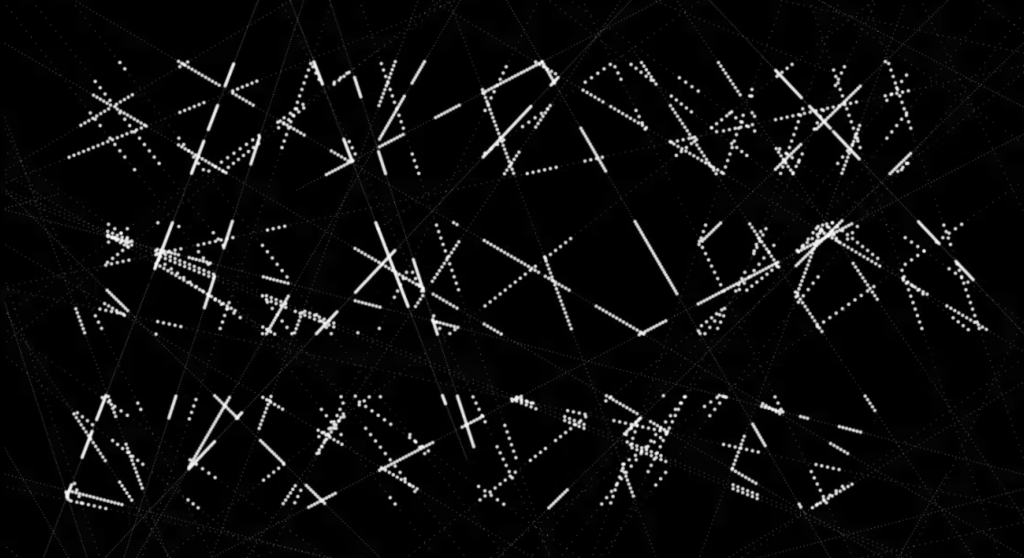

The project created by Wang really intrigues me because it changed the way I viewed computation and typography. In the past, posters that had typography were first hand drawn and then with new technologies, the letterpress became involved and I think that it is really interesting how artists and designers are beginning to use code as a way to create new and interesting designs. I believe that the poster designs were created using a free graphical library built for the context of the visual arts so the designer most likely did not have to develop their own software, but had the ability to use an application that is built for the purpose of visual artists to learn the fundamentals of programming.

LO: My Inspiration

I chose ‘Future Sketches’ displayed in Artechouse, DC by the coding artist Zach Lieberman for this blog because I really admire how he is able to create such interactive and immersive experience using a scary medium like coding, which is often not usually considered an essential tool for creating these kinds of artistic experiences. The exhibit in Artechouse was designed and executed by Zach Lieberman and his team of artists using the coding language C++ and a C++ toolkit for creative coding called “openFrameworks” which he co-founded. The main idea of ‘Future Sketches’ is that it uses data from human input and manipulates in real time to produce very creative and fun outputs that the audience can further interact with, example – one piece in the exhibition analyses audience’s facial expressions, matches the expressions with other faces in the database and replaces some features of the face with those of the matched faces. This kind of technology is appealing to me because it shows coding in a new light that most people do not know about. This project highlights how creative coding can be used to create futuristic experiences that encourage interaction from the audience and produces outputs based on audience’s data in real time.

Link to his work ‘Future Sketches’- https://www.artechouse.com/program/future-sketches/

LO: My Inspiration

http://toddbracher.com/work/bodiesinmotion/

Bodies in Motion is a large-scale interactive installation by Studio GreenEyl and Todd Bracher for Humanscale at the Milan Design Week 2019. I came across this installation when looking for inspiration for building my own installation. Motion sensors detect full-body human movement and fifteen beams of light reflect the joints of the user’s body to create an abstract human form (which was inspired by research psychophysicist Gunnar Johansson who studied the use of lights on main points of the human body to emphasize movement). The interdisciplinary use of design, technology, and social behavior created a captivating and engaging work of art. In the future, I hope to be able to work on similar projects in a studio setting.

LO: My Inspiration

Tender breeze by Mimi Park would be the artwork that introduced me to interactive art. It is not necessarily a computer-based artwork that involves coding, but more electrical engineering involved kind of work. What I admire most about this project is the interactivity of breezing out the wind. When the audience steps toward the human figure, the fan starts to turn and blow out the wind. As the wind blows, the fabric that surrounds the fan gently sways. The method behind this interactivity is by using a range sensor that allows machines to detect how close an object is to the sensor. You can adjust the range sensor for various purposes such as for an automatic light switch. Unfortunately, not much detail is provided in her blog about the process of building the installation. Regarding its small size, it is arguable that she was the sole producer of tender breeze. This artwork inspires me to explore areas of interactive and computational work using both found objects and machines, as well as self-written codes that I will hopefully be able to do after taking 15104.

https://www.mimipark.art/tender-breeze

arduino, range sensor, plaster, concrete, fabric, air dry clay, straw cleaner, shoes, moth ball, fresh flower

LO: My Inspiration

A few months ago, I went to the Immersive Van Gogh Exhibition which displayed the iconic works of Van Gogh through the combination of digital animation and music. I really enjoyed the exhibition because I thought that the technological element and digitalization of the art was extremely intriguing, especially since most of the time paintings are simply framed and hung on a wall. I believe that the exhibition was organized by Massimiliano Siccardi and his team. I’m not sure about the creation process, but it probably involved some sort of programming and software to create the animated art. I think that this project shows how artworks and museums are getting increasingly interactive and smart with utilizing technology. Furthermore, it demonstrates that creativity shouldn’t be limited to traditional mediums and that there are endless ways to approach or represent art.

LO- My Inspiration

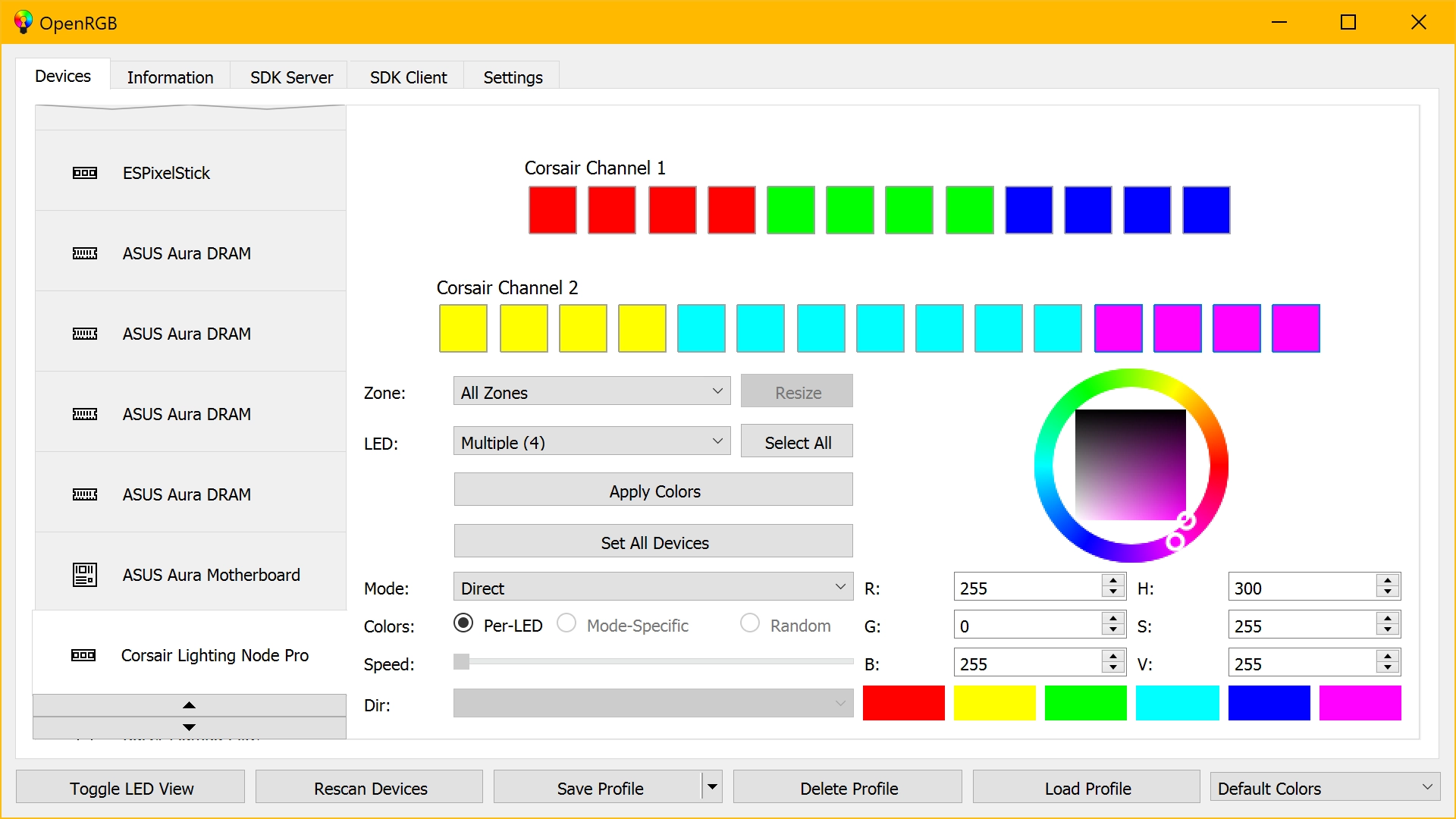

The computational project I found inspirational is an application called OpenRGB. When people build custom computers, they will often have controllable RGB lights that come with the fans, lights in the case, the CPU cooler, and other components. What OpenRGB allows you to do is control all at one, regardless of what program each component is advertised as being controlled with. OpenRGB is a program originally designed by a programmer whose name is Adam Honse. The first version, version 0.1 was released about a year ago. I am not exactly sure what programming language it uses, but since it’s open source, it takes the code of almost every RGB controlling software on the internet and puts them into one simple, easily controlled software. I admire the project because it makes the process of controlling your computer’s lights so much easier and simple. Were this project not to exist, PC owners would either have to spend extra money making sure the brand of their RGB lit PC components were all the same, or they would have to constantly juggle between different RGB controlling softwares.

https://openrgb.org/

LO: My Inspiration

From my personal point of view, I do think that Siri is quite a good example of an interactive project. It is just amazing that the machine could accurately recognize people’s voice and conduct according commands. Capturing voices, recognizing words said, and processing this information into commands that guide Siri, this intelligent assistant, to perform actions expected by the commander. I really admire how much efforts developers have put into this, since each and every step of this process requires a great number of sophisticated scripts and codes. I believe that the reason why Siri is developed is because of people’s desire to more conveniently make technology do things by our wishes. Siri’s voice recognition capability and the ability to learn from users’ behaviors also point out that machine learning could be the future developing direction. Basically, technology exists to better help people get things done. Machine learning not only allow people to let machines do whatever we ask them to do, but also help them to have a machine that could learn from the past actions and “improve” itself for further convenience.

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2023/09/stop-banner.png)