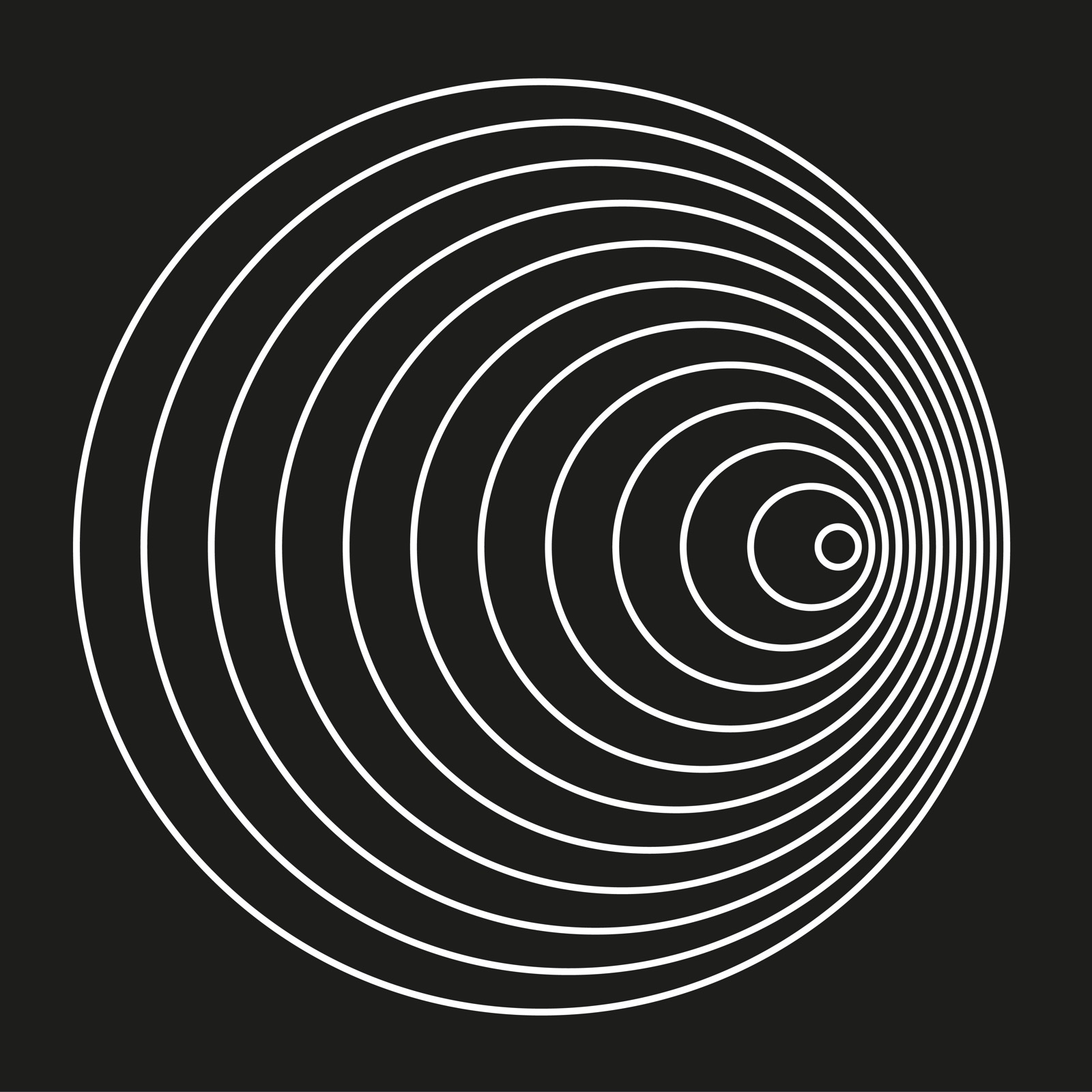

“Soft Sound” is a research pioneered by EJTECH (Esteban de la Torre and Judit Eszter Kárpáti) that brings together textiles and sound, and explores possibilities of enhancing multi-sensory experiences. I admire this project because it achieves its goal of using textile as an audio emitting surface. The artists created soft speakers and embedded it into fabric in order for it to emanate sonic vibrations, allowing viewers to perceive the audio through both hearing and touch. The viewers can perceive the audio through touch because due to the pulsating nature of the sound, the host textile will throb. By creating shapes of flat copper and silver coils, vinyl cutting and applying them onto different textile surfaces, and then running an alternating current through them, the artists created the speakers. They are connected to amplifiers and a permanent magnet in order to force the coil back and forth, allowing the textile attached to move back and forth and create enhanced sound waves. The artists’ sensibilities are clearly manifest in the final form, seeing the way the simple, crisp and geometric printed shapes on fabric resemble the visual language present in their other works. This project is extremely admirable to me because it displays a non physical entity as something tangible and physical, and seeing a small piece of cloth moving and creating sound is just really amazing to me.

Soft Sound for TechTextil – Sounding Textile series from ejtech on Vimeo.

link:http://www.creativeapplications.net/sound/soft-sound-textiles-as-electroacoustic-transducers/

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)