afukuda-project-04

/*

* Name | Ai Fukuda

* Course Section | C

* Email | afukuda@andrew.cmu.edu

* Assignment | 04-b

*/

function setup() {

createCanvas(400, 300);

}

function draw() {

background(206, 236, 236);

var x1; // x-coordinate of vertices

var y1 = 160; // initial y-coordinate of vertices

// PURPLE LINES (THICK)

strokeWeight(1.5);

stroke(204, 178, 213);

for (var x1 = 130; x1 < 201; x1+=10) { // top-left lines

line(100, 50, x1, 120+(y1-x1));

}

for (var x1 = 200; x1 < 271; x1+=10) { // top-right lines

line(300, 50, x1, x1-120);

}

for (var x1 = 130; x1 < 201; x1+=10) { // bottom-left lines

line(100, 250, x1, x1+20);

}

for (var x1 = 200; x1 < 271; x1+=10) { // bottom-right lines

line(300, 250, x1, 420-x1);

}

// PURPLE LINES (THIN)

strokeWeight(1);

for (var x1 = 130; x1 < 201; x1+=10) { // top-left lines

line(200, 150, x1, 120+(y1-x1));

}

for (var x1 = 200; x1 < 271; x1+=10) { // top-right lines

line(200, 150, x1, x1-120);

}

for (var x1 = 130; x1 < 201; x1+=10) { // bottom-left lines

line(200, 150, x1, x1+20);

}

for (var x1 = 200; x1 < 271; x1+=10) { // bottom-right lines

line(200, 150, x1, y1+260-x1);

}

// ORANGE LINES

strokeWeight(1);

stroke(253, 205, 167);

// top set of lines

for (var x1 = 130; x1 < 201; x1+=10) { // top-left lines

line(200, 20, x1, 120+(y1-x1));

}

for (var x1 = 200; x1 < 271; x1+=10) { // top-right lines

line(200, 20, x1, x1-120);

}

// left set of lines

for (var x1 = 130; x1 < 201; x1+=10) { // left-top lines

line(70, 150, x1, 120+(y1-x1));

}

for (var x1 = 130; x1 < 201; x1+=10) { // left-bottom lines

line(70, 150, x1, x1+20);

}

// bottom set of lines

for (var x1 = 130; x1 < 201; x1+=10) { // bottom-left lines

line(200, 280, x1, x1+20);

}

for (var x1 = 200; x1 < 271; x1+=10) { // bottom-right lines

line(200, 280, x1, y1+260-x1);

}

// right set of lines

for (var x1 = 200; x1 < 271; x1+=10) { // top-right lines

line(330, 150, x1, x1-120);

}

for (var x1 = 200; x1 < 271; x1+=10) { // bottom-right lines

line(330, 150, x1, y1+260-x1);

}

// BLUE LINES

strokeWeight(1);

stroke(140, 164, 212);

line(130, 150, 200, 80);

line(200, 80, 270, 150);

line(130, 150, 200, 220);

line(200, 220, 270, 150);

// BLUE VERTICES

/*

strokeWeight(3);

fill(140, 164, 212);

point(100, 50); // primary geometry vertices (purple)

point(300, 50);

point(100, 250);

point(300, 250);

point(200, 20); // secondary geometry vertices (orange)

point(70, 150);

point(200, 280);

point(330, 150);

point(200, 150); // center of geometry

*/

}

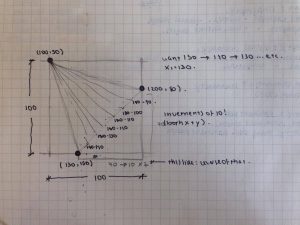

I was able to generate this string art by simply declaring two variables: one for the initial x-coordinate and another for the y-coordinate. I began with the top-left set of purple curves, and translated those appropriately to create the geometry. Things that I could improve are: using rotation to make the code much simpler, and to use variables so the geometry becomes dynamic.

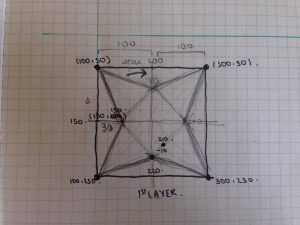

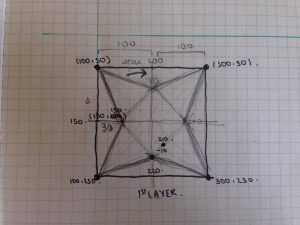

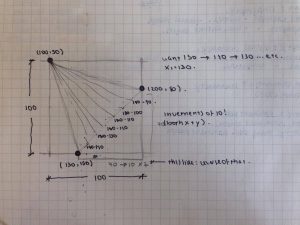

Process work:

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)