//Heidi Chung

//hschung

//Section A

//Final-Project

var clouds = [];

var bobaY = 0; // height of boba

var bobaDy = 0; // the vertical velocity of boba

var offset = 0;

var themeSong; //variable for song to be played

var blush = 200; //variable to make boba change color and "blush"

function preload() {

themeSong = loadSound("https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2017/12/bobasong.mp3");

//preloading the song Boba Beach by Grynperet

}

function newcloud(px, py, pw) { //object for clouds

return {x: px, y: py, w: pw};

}

function setup() {

createCanvas(450, 400);

clouds.push(newcloud(600, 200, 200));//adding a new cloud

themeSong.play(); //play the song

amplitude = new p5.Amplitude(); //capturing the volume of the song

}

// compute the location of the right end of a cloud

function cloudRight(p) {

return p.x + p.w;

}

// return the last cloud object

function cloudLast() {

return clouds[clouds.length - 1];

}

function draw() {

background(253, 225, 200);

var bobaX = width / 2;

drawGreeting(); //calling the greeting and its "shadow" to be drawn

drawBoba(); //calling the boba to be drawn

push();

noStroke(); //multiple layers to achieve pleasant transparency

fill(255, 99);//white sea foam

rect(0, height - 70, width, 70);

fill(177, 156, 217, 90);//lilac sea foam 2

rect(0, height - 80, width, 80);

fill(220, 200, 200, 90);//sea foam 3

rect(0, height - 90, width, 90);

fill(220, 200, 200);//solid background of sea

rect(0, height - 60, width, 60);

fill(177, 156, 217, 90);//the taro sea

rect(0, height - 60, width, 60);

pop();

stroke(255);

strokeWeight(3);

var level = amplitude.getLevel();

var cloudSize = map(level, 0, 1, 30, 50);

//clouds' height wiggles in response to song volume

for (var i = 0; i < clouds.length; i++) {

var p = clouds[i];

noStroke();

fill(255); //white clouds

ellipse(p.x - offset, p.y, p.w, cloudSize);

}

// if first cloud is offscreen to left, remove it

if (clouds.length > 0 & cloudRight(clouds[0]) < offset) {

clouds.shift();

}

// if last cloud is totally within canvas, make a new one

if (cloudRight(cloudLast()) - offset < width) {

var p = newcloud(cloudRight(cloudLast()), // start location

random(50, 320), // height of new cloud

140); // all clouds have width 140 for now

clouds.push(p); //add to array of clouds

}

noStroke();

// move the "landscape"

// move and draw the "boba"

//which cloud is current? linear search (!) through clouds

var pindex = 0;

while (cloudRight(clouds[pindex]) - offset + 20 < bobaX) {

pindex += 1;

}

//now pindex is index of the cloud in the middle of canvas

//find the cloud height

var py = clouds[pindex].y;

//if boba is above a cloud, fall toward it, but don't go past it

if (bobaY <= py) {

bobaY = min(py, bobaY + bobaDy); // change Y by Dy

} else { // if we are below the cloud, fall to ground

//to avoid this, once we are below a cloud, force Dy non-negative

if (bobaDy < 0) {

bobaDy = 0;

}

bobaY = min(height, bobaY + bobaDy);

}

//if the boba falls from a cloud, it will "jump" to the next cloud

if (bobaY >= height) {

bobaY = 0;

}

//move the "landscape"

offset += 3;

//accelerate boba with gravity

bobaDy = bobaDy + 1;

}

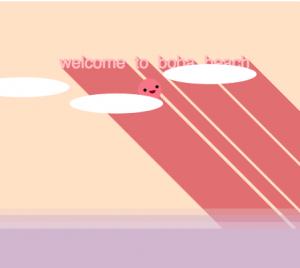

function drawGreeting() {

var level = amplitude.getLevel();

var greetingSize = map(level, 0, 1, 25, 30); //control limits of greeting size

for (i = 0; i < width; i++) {

fill(210, 120, 120);

textSize(greetingSize);

text("welcome to boba beach", width/5 + i, height/4 + i); //streaked greetings

}

fill(255, 196, 197); //light pink greeting

strokeWeight(3);

textSize(greetingSize);

text("welcome to boba beach", width/5, height/4);

}

function drawBoba() {

var bobaX = width / 2;

var level = amplitude.getLevel();

var bobaSize = map(level, 0, 1, 35, 85); //control limits of boba size

var eyeSize = map(level, 0, 1, 5, 10); //control limits of eye size

//traits fluctuate in size depending on the song's volume.

var mouthSize = map(level, 0, 1, 7, 15); //control limits of mouth size

fill(blush - 100, 120, 120); //boba "blushes" when you make it jump by pressing any key

noStroke();

fill(230, 130, 140);

ellipse(bobaX, bobaY - 20, bobaSize, bobaSize); //jumping boba

fill(0);

ellipse(bobaX - 7, bobaY - 20, eyeSize, eyeSize); //left eye

ellipse(bobaX + 11, bobaY - 25, eyeSize, eyeSize); //right eye

fill(120, 0, 32); //happy mouth

arc(bobaX + 4, bobaY - 20, mouthSize + 3, mouthSize + 3, 0, PI + 50, CHORD);

}

function keyPressed() {

bobaDy = -15; //velocity set to up when key is pressed

if (blush === 200) { //changing the color of the boba

blush = 255; //if the boba's R value is 200, and a key is pressed,

} else { //change the R value to 255, and vice-versa.

blush = 200;

}

}

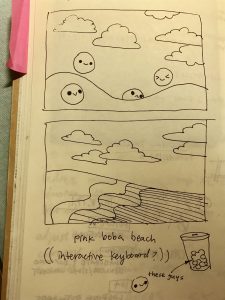

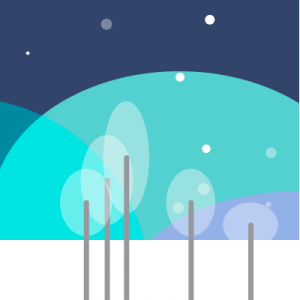

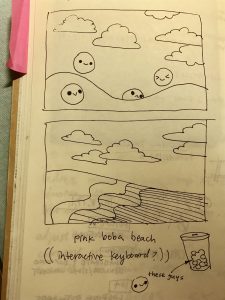

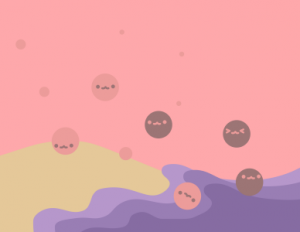

For my final project, wanted to create a cute, simple, and clean animation with an interactive touchpoint- perhaps by pressing keys or clicking the mouse. My inspiration was to create something that brings the user a sense of delight. I wanted to animate with AfterEffects using the soundtrack “Boba Beach” and I attempted to over the summer, but didn’t finish, and that led me to want to create a cute thing for the final project. I was inspired by the delight and familiarity of platform games, and thought it would mesh well with my idea of having cute visuals that you can interact with if you so choose. I chose not to make the boba “die” when it falls off the clouds, like a classic platform game.

The jumping boba wiggles in size depending on the volume of the song, as do its eyes and mouth. The clouds passing by also wiggle. When you press any key, the boba will change color slightly and “blush” and it jumps as well. The greeting in the background also wiggles with the volume. I am pleased with the cute aesthetics and interaction I was able to make.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)