var turtle1;

var turtle2;

var turtle3;

var forwardAmt;

var turnAmt;

var turtles = [];

var colorBank = ["lightskyblue", "darksalmon", "gold", "coral", "skyblue", "cadetblue", "yellowgreen", "tomato"]

var colors = [];

var turtlePosition;

function setup() {

createCanvas(480, 480);

background(220);

blendMode(OVERLAY);

frameRate(10);

}

function draw() {

for(var i = 0; i < turtles.length; i++) {

forwardAmt = random(1, 50);

turnAmt = random(-100, 100);

turtles[i].setColor(colors[i]);

turtles[i].setWeight(10);

turtles[i].penDown();

turtles[i].forward(forwardAmt);

turtles[i].right(turnAmt);

turtles[i].penUp();

}

}

function mousePressed() {

turtles.push(makeTurtle(mouseX, mouseY));

colors.push(random(colorBank));

print(colorBank);

print(colors);

}

function keyPressed() {

if (key === "R") {

turtles = [];

blendMode(NORMAL);

background(220);

blendMode(OVERLAY);

}

}

// turtle graphics

function turtleLeft(d){this.angle-=d;}function turtleRight(d){this.angle+=d;}

function turtleForward(p){var rad=radians(this.angle);var newx=this.x+cos(rad)*p;

var newy=this.y+sin(rad)*p;this.goto(newx,newy);}function turtleBack(p){

this.forward(-p);}function turtlePenDown(){this.penIsDown=true;}

function turtlePenUp(){this.penIsDown = false;}function turtleGoTo(x,y){

if(this.penIsDown){stroke(this.color);strokeWeight(this.weight);

line(this.x,this.y,x,y);}this.x = x;this.y = y;}function turtleDistTo(x,y){

return sqrt(sq(this.x-x)+sq(this.y-y));}function turtleAngleTo(x,y){

var absAngle=degrees(atan2(y-this.y,x-this.x));

var angle=((absAngle-this.angle)+360)%360.0;return angle;}

function turtleTurnToward(x,y,d){var angle = this.angleTo(x,y);if(angle< 180){

this.angle+=d;}else{this.angle-=d;}}function turtleSetColor(c){this.color=c;}

function turtleSetWeight(w){this.weight=w;}function turtleFace(angle){

this.angle = angle;}function makeTurtle(tx,ty){var turtle={x:tx,y:ty,

angle:0.0,penIsDown:true,color:color(128),weight:1,left:turtleLeft,

right:turtleRight,forward:turtleForward, back:turtleBack,penDown:turtlePenDown,

penUp:turtlePenUp,goto:turtleGoTo, angleto:turtleAngleTo,

turnToward:turtleTurnToward,distanceTo:turtleDistTo, angleTo:turtleAngleTo,

setColor:turtleSetColor, setWeight:turtleSetWeight,face:turtleFace};

return turtle;}

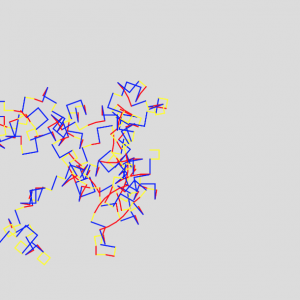

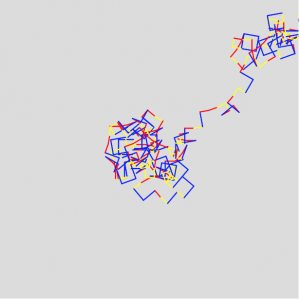

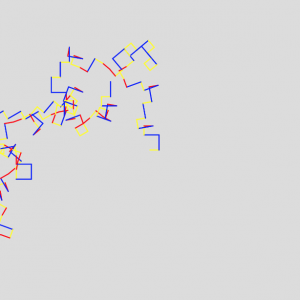

I was inspired by the look of stylized subway maps (dots connected by segments in particular) and wanted to create something that partially mimicked that look. I decided to randomize the angles and segment lengths and incorporate user interaction: click to add more turtles/ paths, and press R to reset the canvas.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)