//Crystal Xue

//15104-section B

//luyaox@andrew.cmu.edu

//Project-09

var underlyingImage;

var xarray = [];

var yarray = [];

function preload() {

var myImageURL = "https://i.imgur.com/Z0zPb5S.jpg?2";

underlyingImage = loadImage(myImageURL);

}

function setup() {

createCanvas(500, 500);

background(0);

underlyingImage.loadPixels();

frameRate(20);

}

function draw() {

var px = random(width);

var py = random(height);

var ix = constrain(floor(px), 0, width-1);

var iy = constrain(floor(py), 0, height-1);

var theColorAtLocationXY = underlyingImage.get(ix, iy);

stroke(theColorAtLocationXY);

strokeWeight(random(1,5));

var size1 = random(5,15);

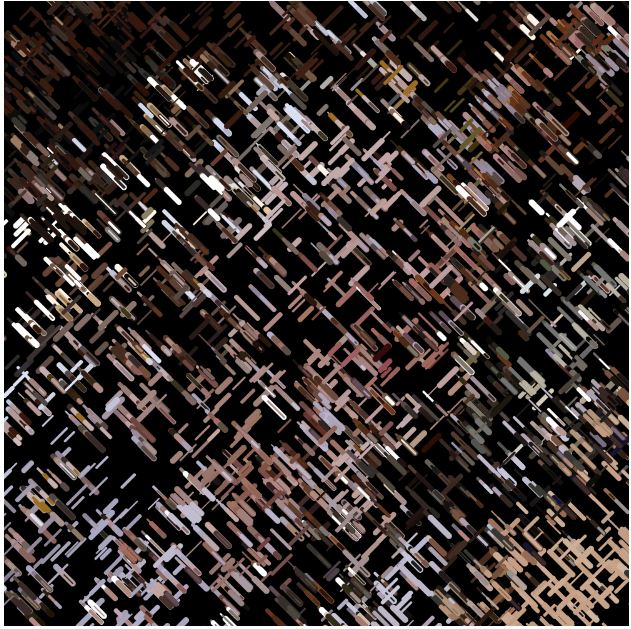

//brush strokes from bottom left to top right diagnal direction

line(px, py, px - size1, py + size1);

var theColorAtTheMouse = underlyingImage.get(mouseX, mouseY);

var size2 = random(1,8);

for (var i = 0; i < xarray.length; i++) {

stroke(theColorAtTheMouse);

strokeWeight(random(1,5));

//an array of brush strokes from top left to bottom right diagnal direction controlled by mouse

line(xarray[i], yarray[i],xarray[i]-size2,yarray[i]-size2);

size2 = size2 + 1;

if (i > 10) {

xarray.shift();

yarray.shift();

}

}

}

function mouseMoved(){

xarray.push(mouseX);

yarray.push(mouseY);

}

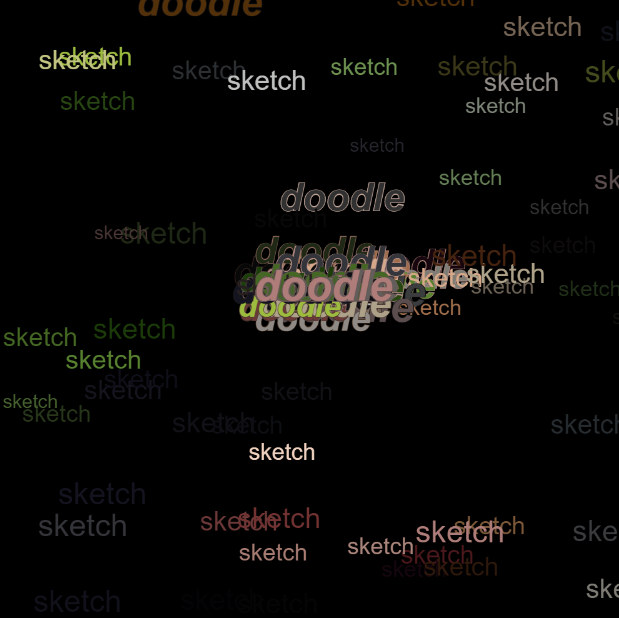

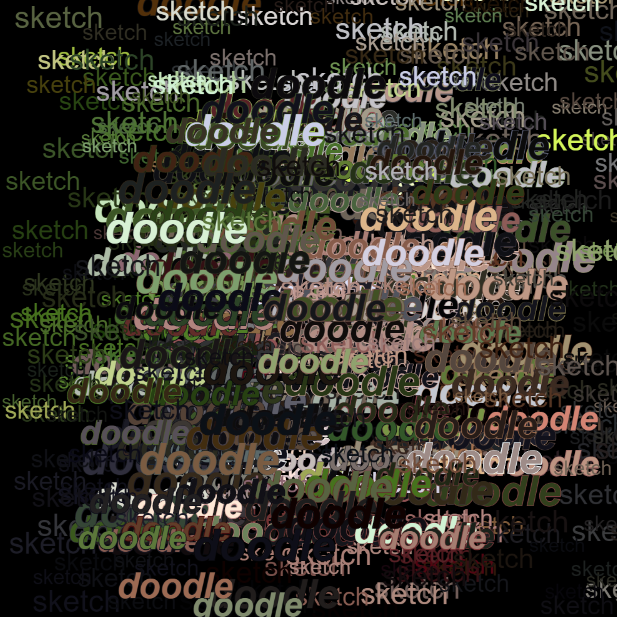

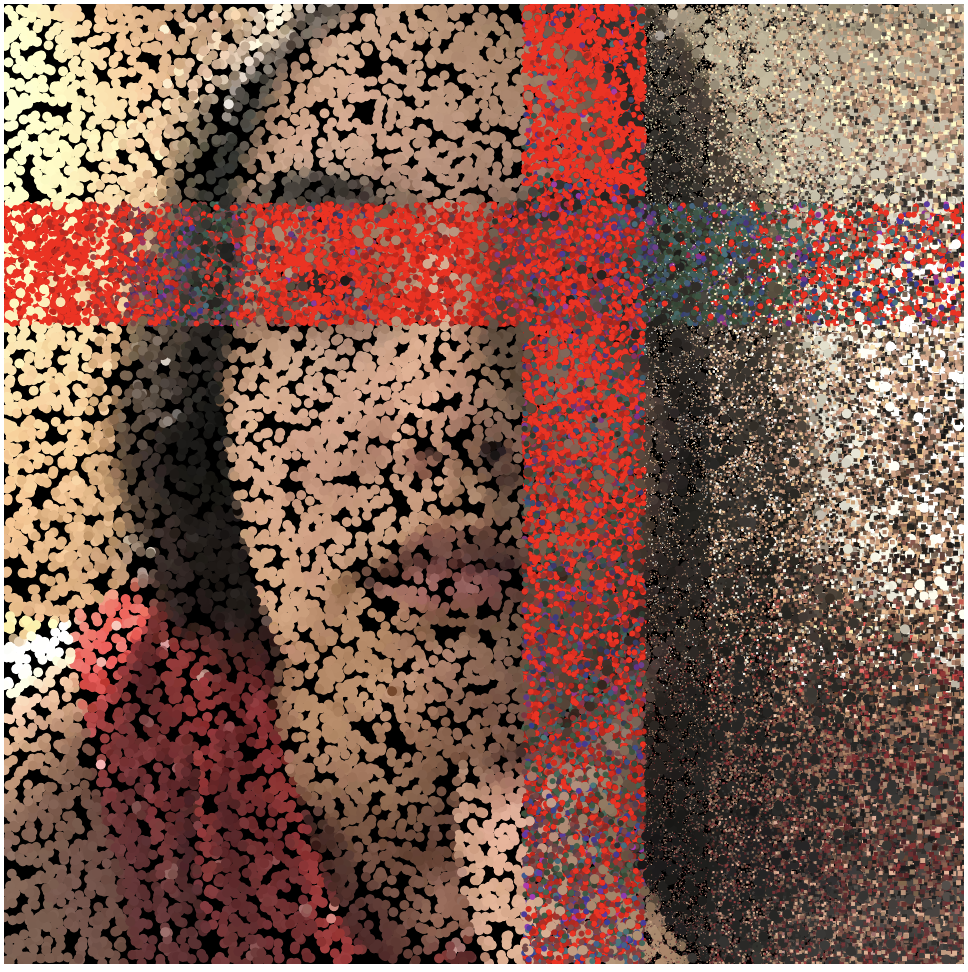

This is a weaving portrait of my friend Fallon. The color pixels will be concentrated on the cross between strokes of two directions

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)