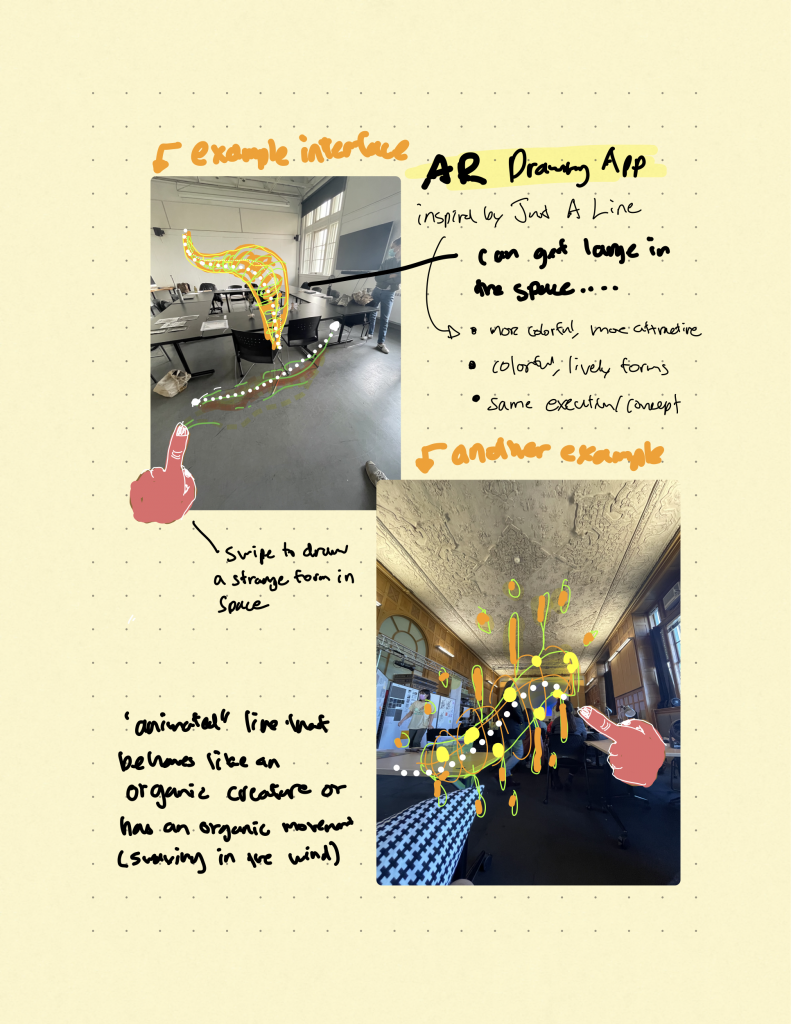

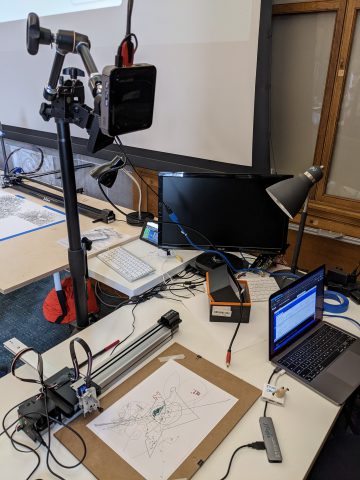

My project is going to be a collaborative drawing session with the plotter. When you draw something (on the drawing app that I am currently making) the axidraw will respond is some altered/responsive way. I plan on adding as many features as I can that allow for different variations of response. For example, maybe one drawing feature reads in your drawing’s coordinates and draws hairy lines that appear to sprout out of your drawing. Since what is being drawn onscreen is also being projected onto the area where the axidraw is plotting, it will look as if the plotter’s lines and the user’s lines are a part of the same surface/drawing. Right now I am running into a lot of issues with getting the initial drawing functionality and projection working properly so I am not that far. Below, I am showing my line plotted as a dotted line.

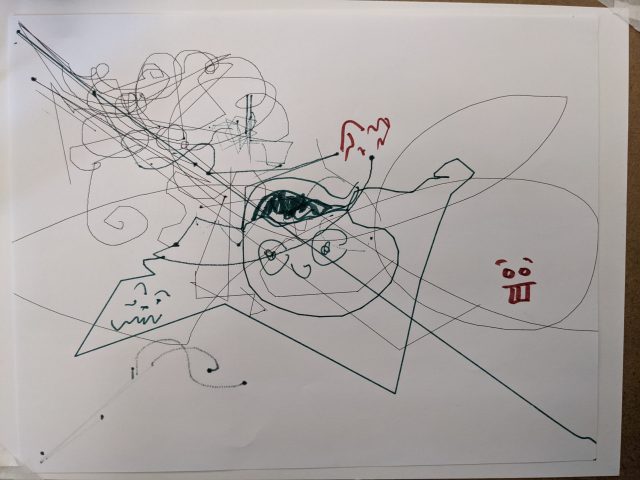

Tests…

Plotter draws dotted line of my line ( and you can see a bug where the pen doesn’t go up when it goes to make the first drawing).

Demo of above:

https://vimeo.com/646995264

Initial interactive tests –> plotter mirrors what I draw (+ some funny faces made by Shiva and I)

Setup:

The goal is to project my screen onto the area of the paper so my drawing is overlaid with the plotter’s drawing. The projection mapping didn’t work in time for this crit 🙂

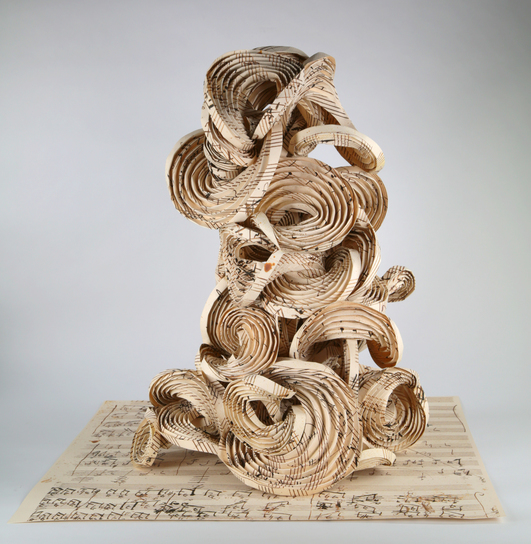

Sougwen Chung has been a big inspiration for my work.