For my final project, I’m programming a Universal Robotics robotic arm to inscribe and mark polymer clay surfaces. This is meant to primarily be a two-pronged technical exploration of both the material possibilities of polymer clay and the technical capabilities of the robotic arm to use tools and manipulate physical media.

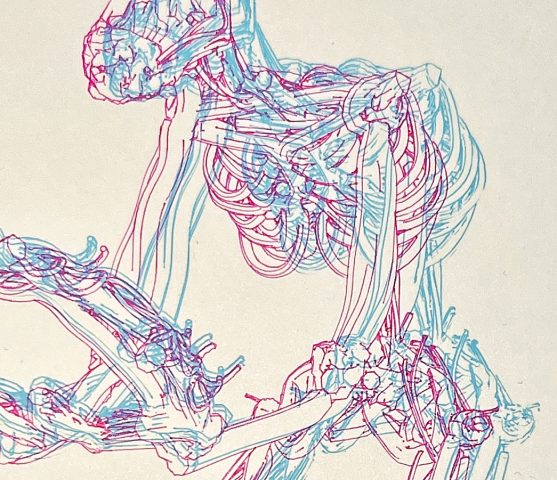

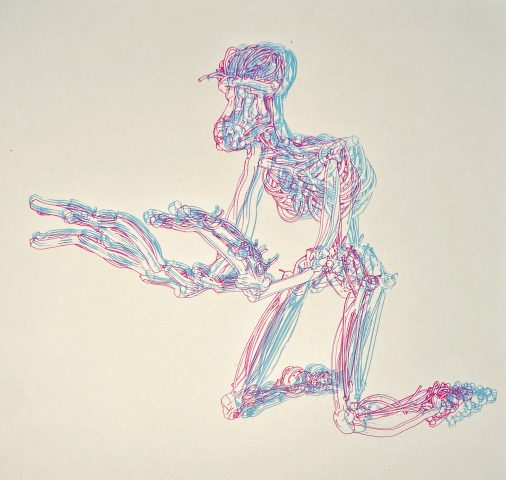

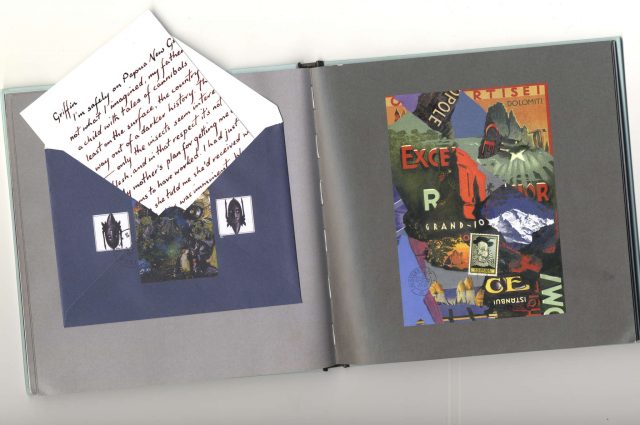

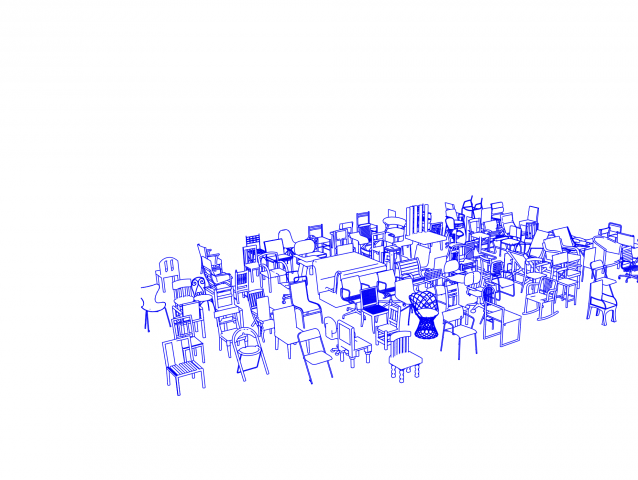

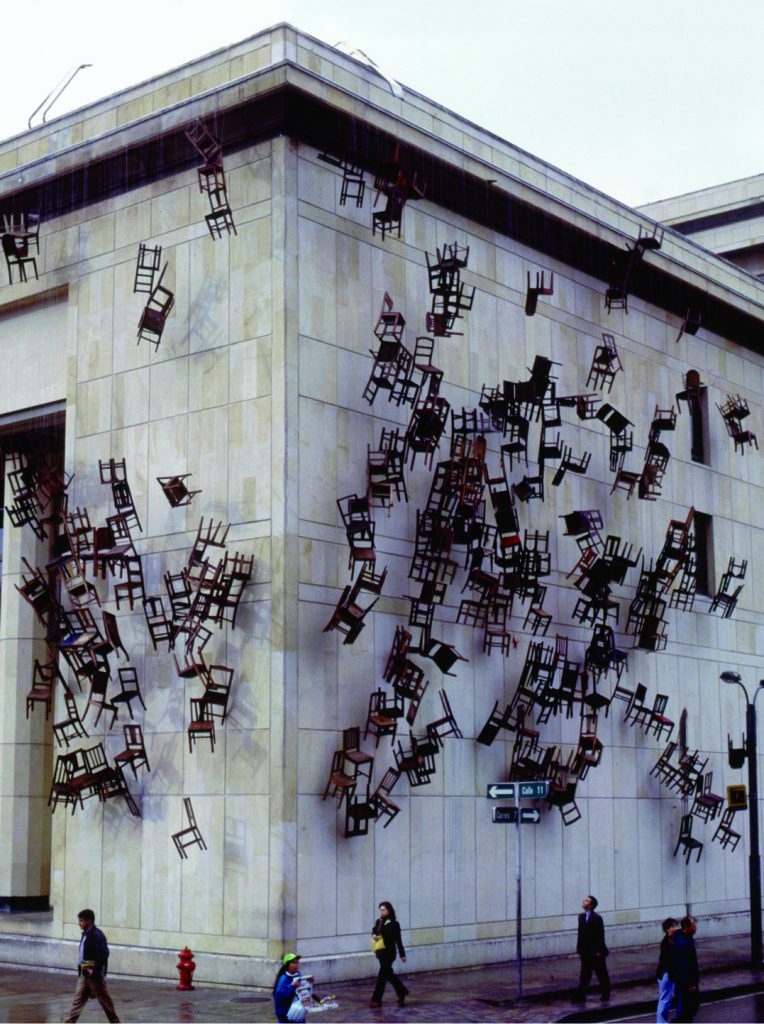

The inspiration for this project is honestly more rhizomatic than emergent from a collection of works of interest. That said, I was at least partially inspired by the robot-human collaborations of artist Sougwen Chung.

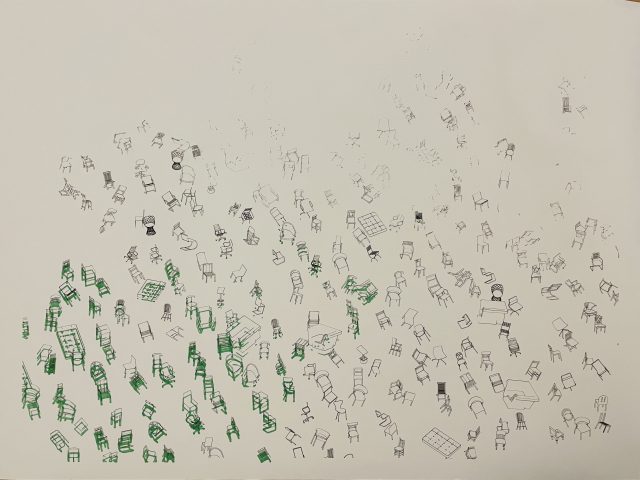

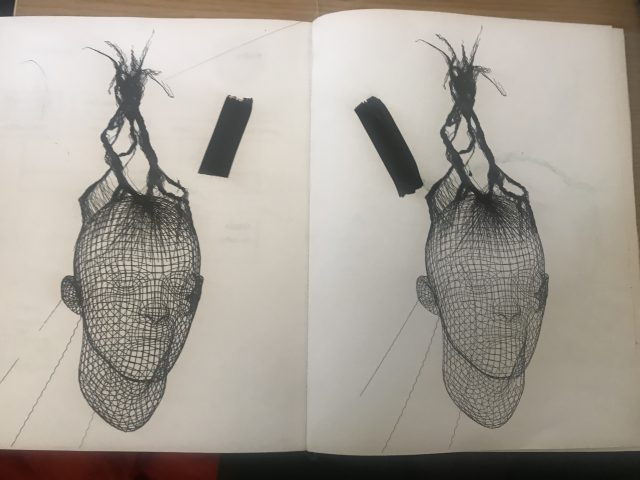

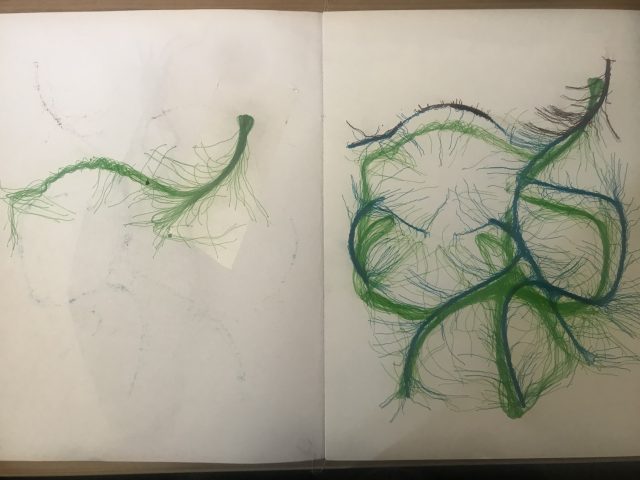

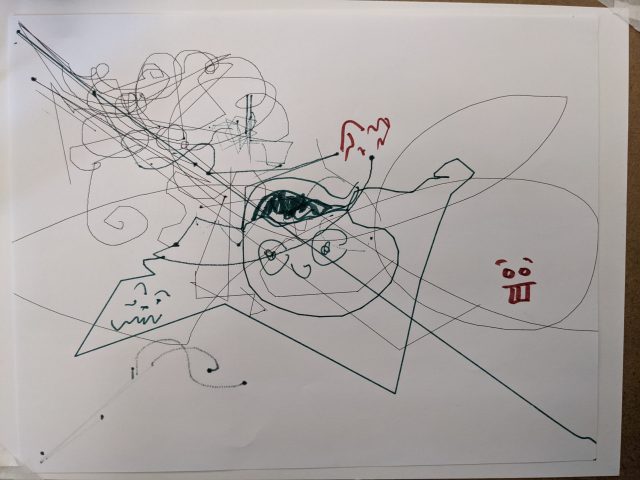

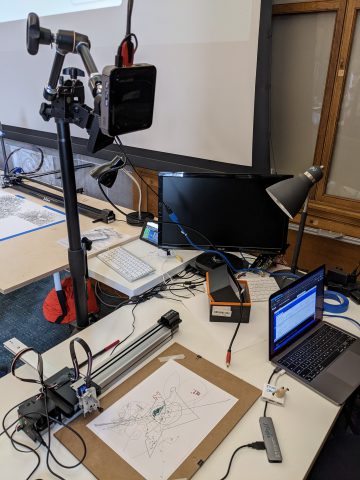

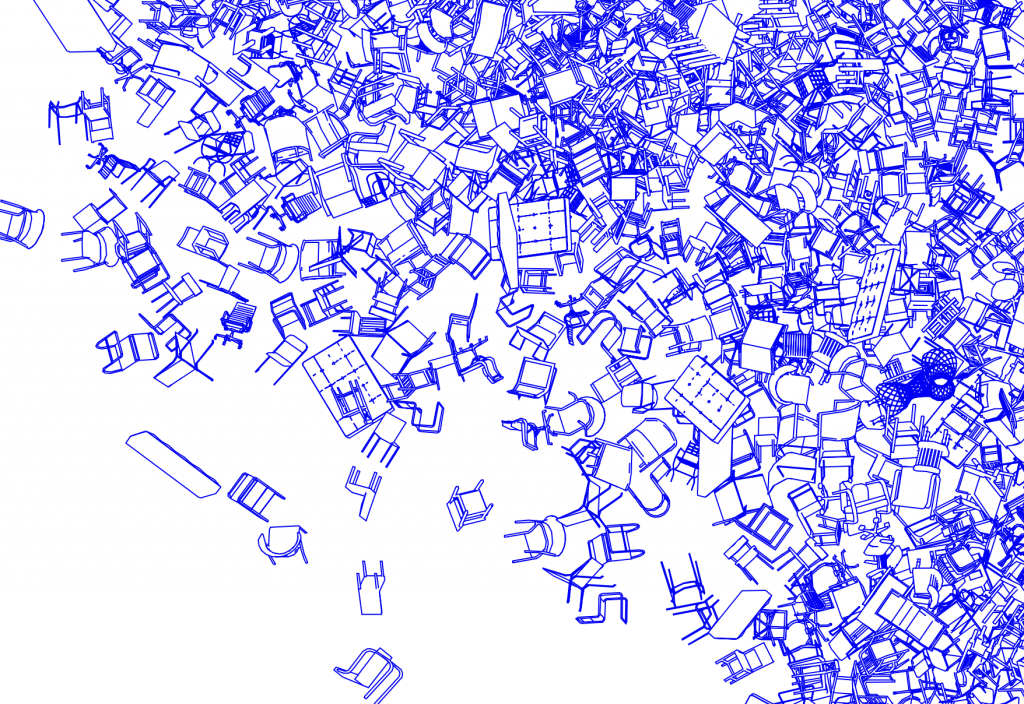

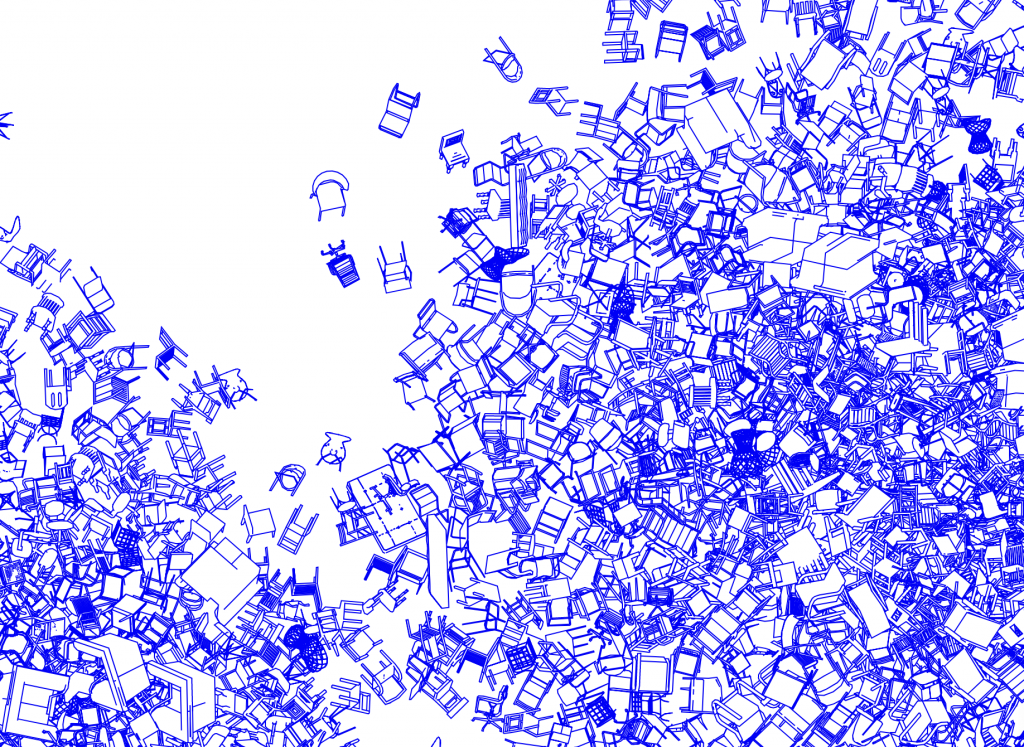

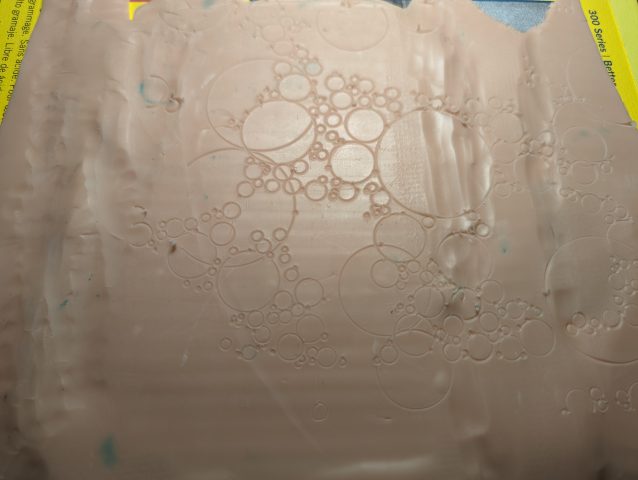

Throughout the semester, I’ve done most of my work using pen and plotter. While the plotter creates an exciting interaction point where computational principles and the physical properties of media try to coexist and even contradict each other, I wanted to explore a new space with different effects at play. I decided it was time to try a ne medium, so I moved into paint and polymer clay. Initially I tried merely plotting on clay:

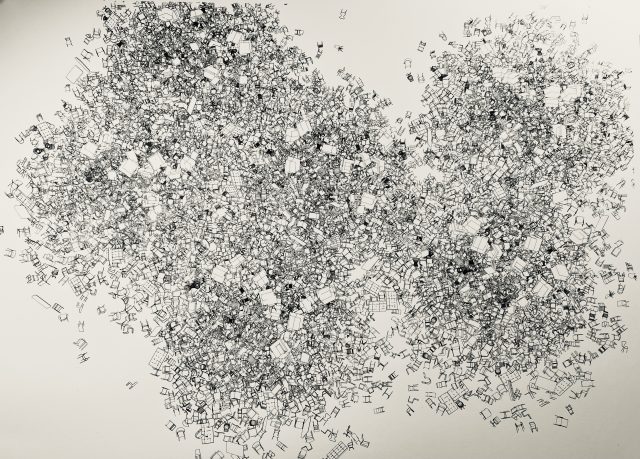

Above – using pen plotter and needle tool to poke points into clay

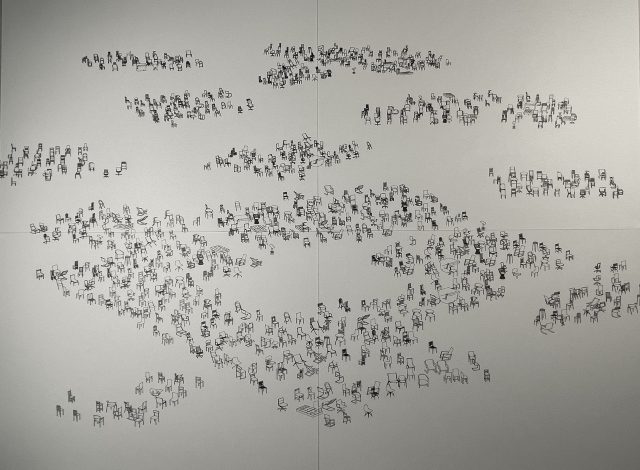

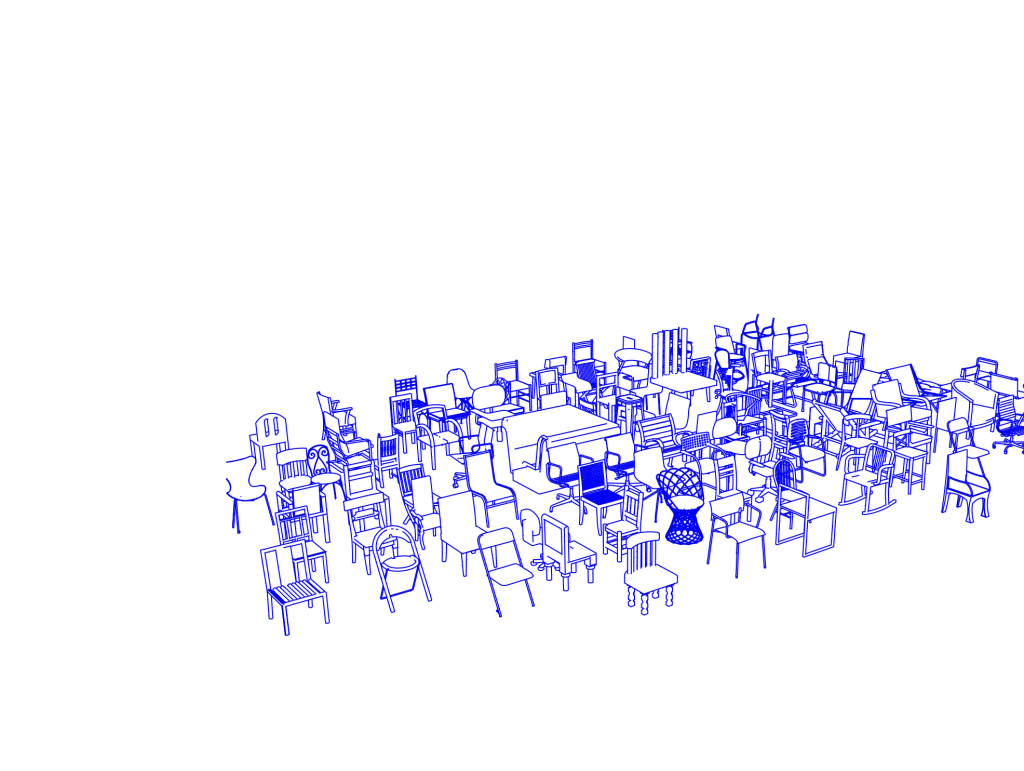

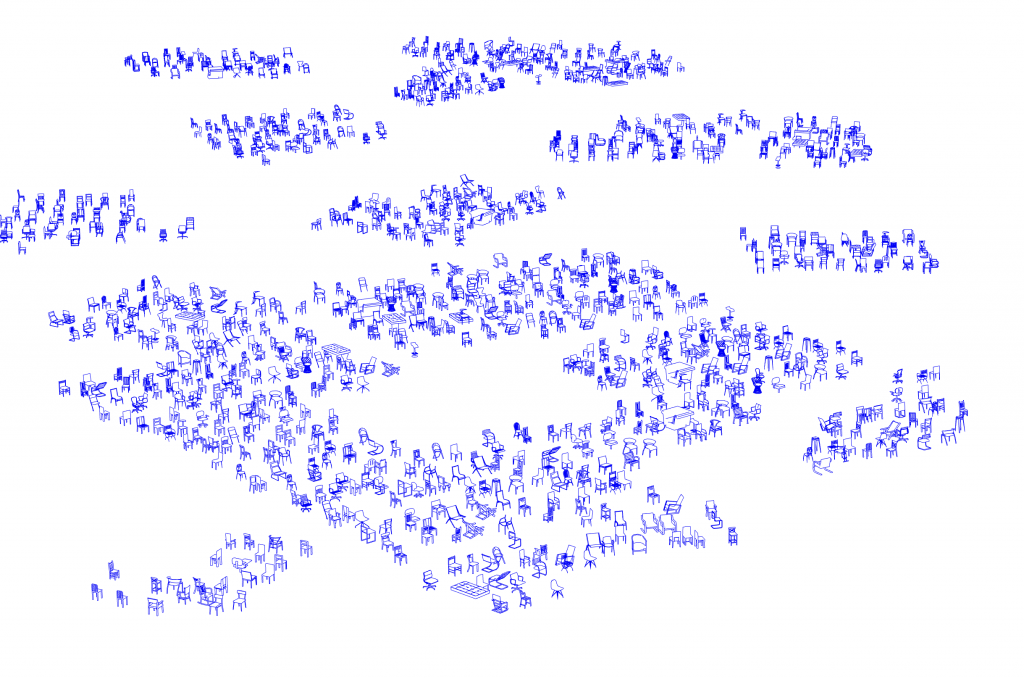

Above – misregistered plot

Above – misregistered plot

After a few experiments, it seemed that the plotter just didn’t have the power needed to do anything substantial to the clay – the tool would often snag and the motor couldn’t always actually push the clay, messing up registration.

I realized that the robotic arm in Frank-Ratchye studio could push what I could do computationally and gives me a continuous third dimension. The robotic arm also seemed to promise more physical pushing strength compared to the plotter. From there, I realized that polymer clay pairs nicely with the additional dimension and stuck with the robot.

I’ve been using polymer clay for most of my life already but have taken a long hiatus, so this feels like a natural direction for my work to go into and an exciting opportunity to explore the medium with a fresh set of eyes.

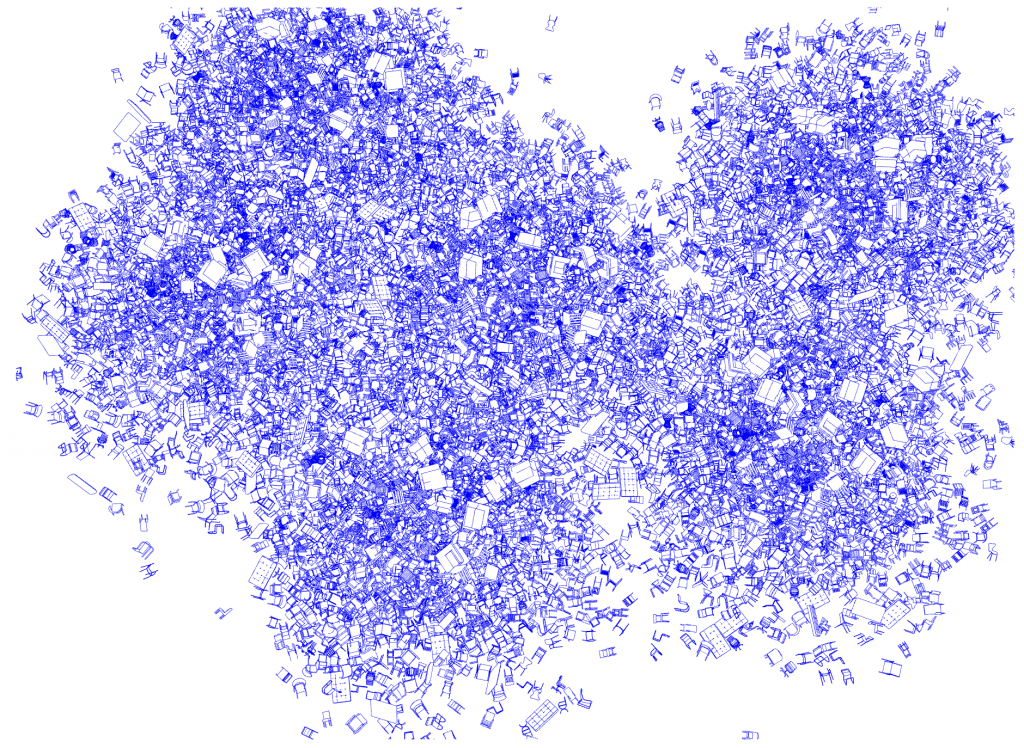

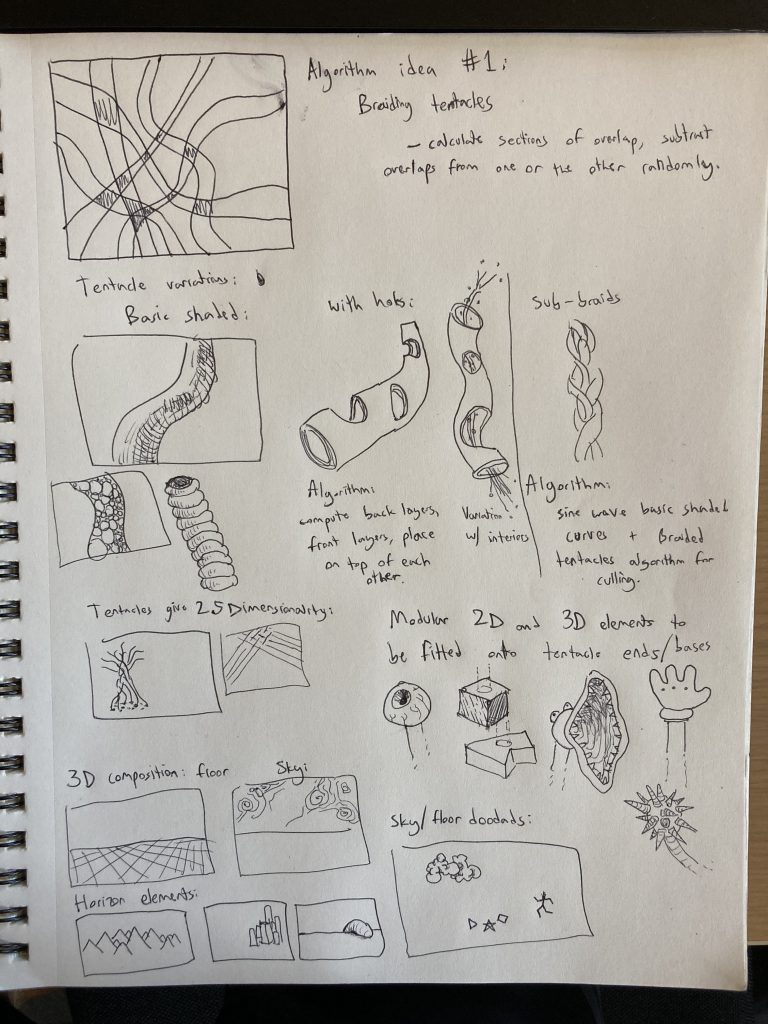

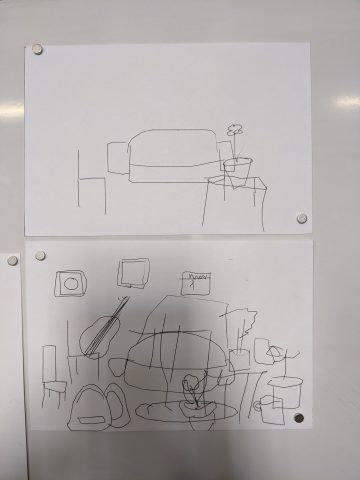

I started exploring for this project by digging into clay by hand in different ways. My intent was to test what sorts of marks my tools could make in an attempt to think of concrete ideas for the final project and produced the following:

From here, all that I had left to do a learn how to use the robot. After getting comfortable with manually controlling the robot, I moved on to automating the bot. I received extensive help from my classmate Dinkolas in understanding how to program the robot and am indebted to his time and efforts.

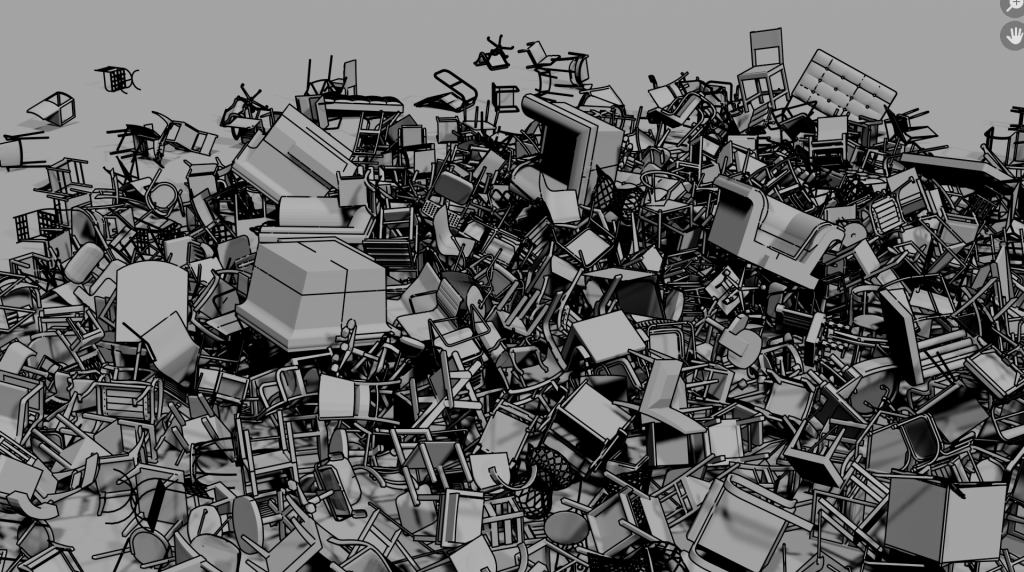

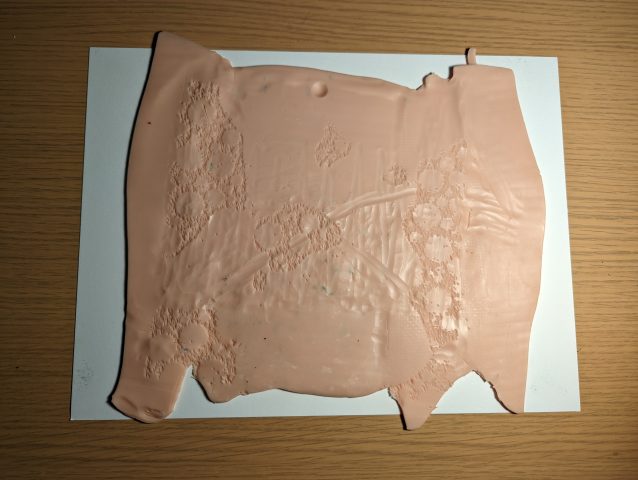

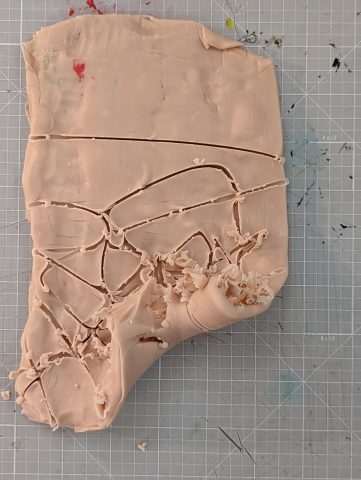

Below are the preliminary results of merely having the robot tear across a block of clay along random paths:

The robot managed to fold the clay up during the inscription process. More work will need to go into measurements to ensure that the clay stays flat, or maybe this folding direction may deserve a bit more time and thought.

Video pending, I need to remove audio from the footage that I have.