Author: agusman@andrew.cmu.edu

agusman-FinalProjectProposal

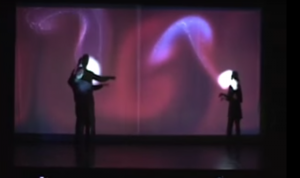

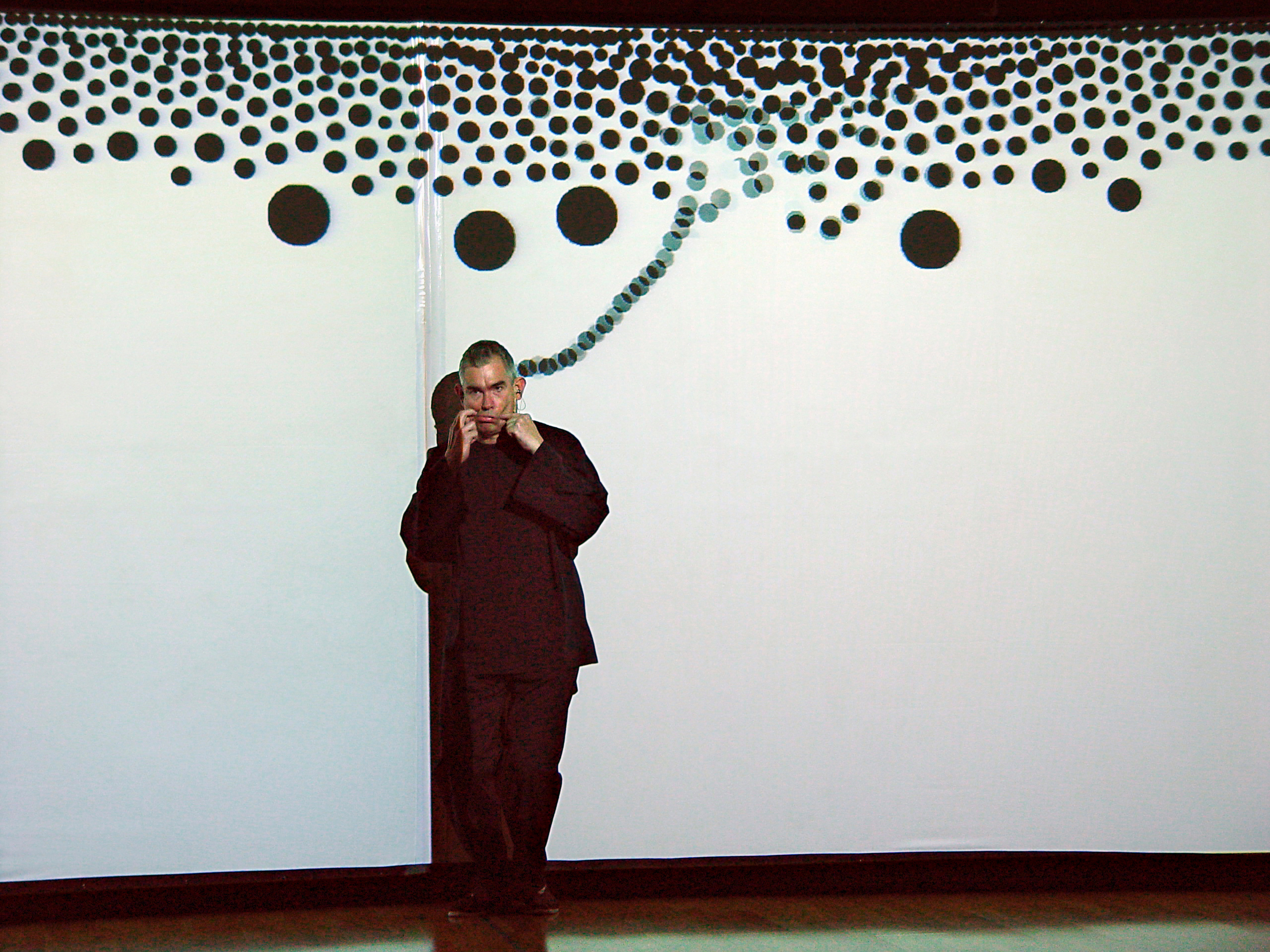

In my artistic practice, I’m excited by the the concept of discovery through the visualization of the self, through both visual and sonic means. I was very inspired by Golan Levin’s, “Messa di Voce” part 4, where moving bodies detected by computer vision leave dynamic computational “auras” that disperse across the screen.

“Messa Di Voce” by Golan Levin

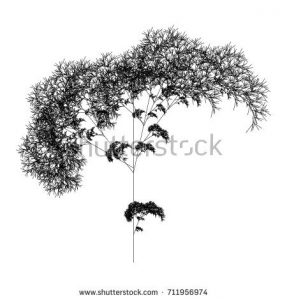

For my final project, I propose a program that manipulates a particle system with features of the voice. I am thinking about creating a landscape of trees, flowers and animals based generated by a singing to it. Trees and flowers could be generated using an l-system growth pattern that responds to the volume or frequency of input sound.

Or, it could be a bit more abstract and simply contain particles that are displaced and moved based on frequency and volume of the voice. That system could act more like a flock of birds, using encoded Boid behaviors.

agusman-Project11-TurtleGraphicsFreestyle

//Anna Gusman

//agusman@andrew.cmu.edu

//Section E

//Turtle Graphics

function turtleLeft(d) {

this.angle -= d;

}

function turtleRight(d) {

this.angle += d;

}

function turtleForward(p) {

var rad = radians(this.angle);

var newx = this.x + cos(rad) * p;

var newy = this.y + sin(rad) * p;

this.goto(newx, newy);

}

function turtleBack(p) {

this.forward(-p);

}

function turtlePenDown() {

this.penIsDown = true;

}

function turtlePenUp() {

this.penIsDown = false;

}

function turtleGoTo(x, y) {

if (this.penIsDown) {

stroke(this.color);

strokeWeight(this.weight);

line(this.x, this.y, x, y);

}

this.x = x;

this.y = y;

}

function turtleDistTo(x, y) {

return sqrt(sq(this.x - x) + sq(this.y - y));

}

function turtleAngleTo(x, y) {

var absAngle = degrees(atan2(y - this.y, x - this.x));

var angle = ((absAngle - this.angle) + 360) % 360.0;

return angle;

}

function turtleTurnToward(x, y, d) {

var angle = this.angleTo(x, y);

if (angle < 180) {

this.angle += d;

} else {

this.angle -= d;

}

}

function turtleSetColor(c) {

this.color = c;

}

function turtleSetWeight(w) {

this.weight = w;

}

function turtleFace(angle) {

this.angle = angle;

}

function makeTurtle(tx, ty) {

var turtle = {x: tx, y: ty,

angle: 0.0,

penIsDown: true,

color: color(128),

weight: 1,

left: turtleLeft, right: turtleRight,

forward: turtleForward, back: turtleBack,

penDown: turtlePenDown, penUp: turtlePenUp,

goto: turtleGoTo, angleto: turtleAngleTo,

turnToward: turtleTurnToward,

distanceTo: turtleDistTo, angleTo: turtleAngleTo,

setColor: turtleSetColor, setWeight: turtleSetWeight,

face: turtleFace};

return turtle;

}

var t1;

function setup() {

createCanvas(400,400);

background(0);

t1 = makeTurtle(200, 200);

t1.setColor(255);

t1.setWeight(1);

t1.penDown();

var sideLength = 20;

for(var i = 0; i < 100; i++) {

t1.penDown();

sideLength = sideLength - 20;

t1.right(2);

makeSquare(sideLength);

}

}

function makeSquare(sideLength)

{t1.forward(sideLength);

t1.right(90);

t1.forward(sideLength);

t1.right(90);

t1.forward(sideLength);

t1.right(90);

t1.forward(sideLength);

t1.right(90);

ellipse(t1.x,t1.y,10,10)

t1.penUp();

t1.right(90);

t1.forward(sideLength)

t1.right(90);

t1.forward(sideLength)

ellipse(t1.x,t1.y,10,10)

}

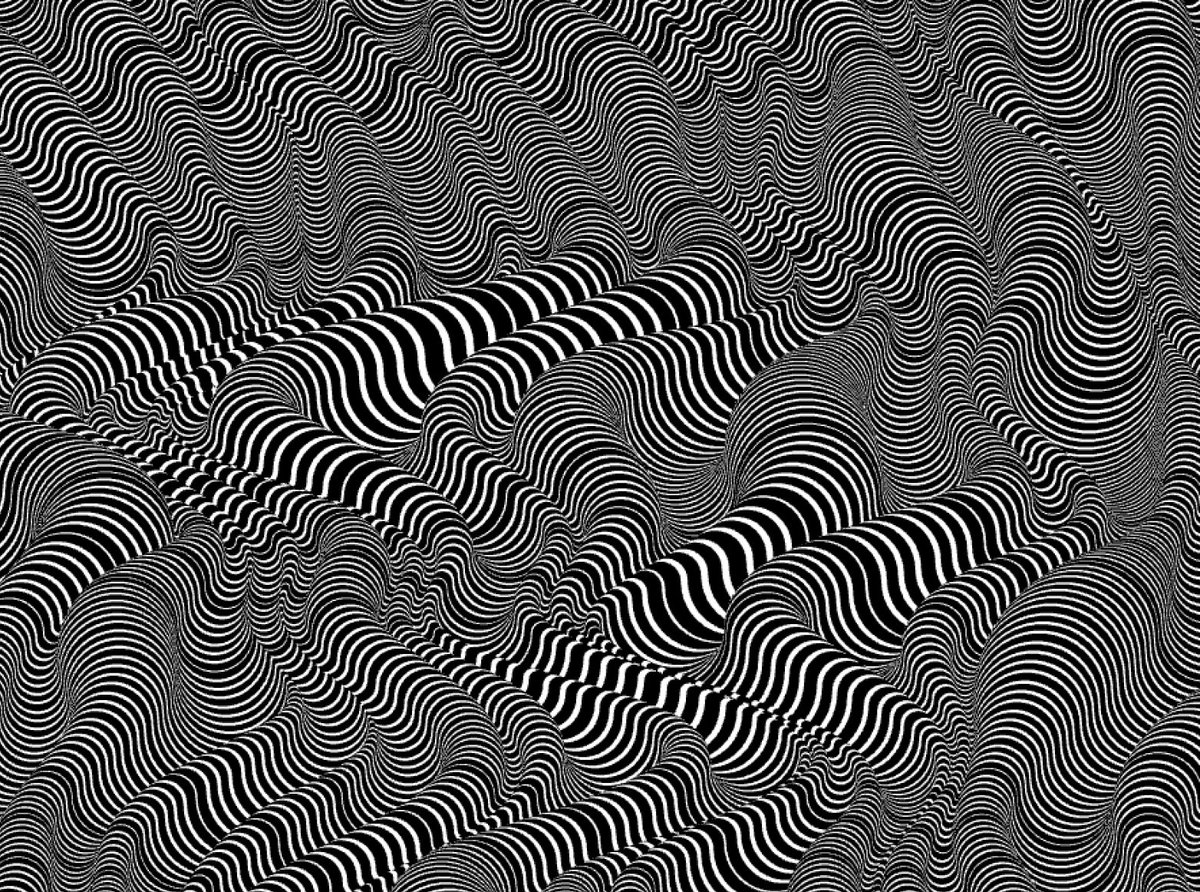

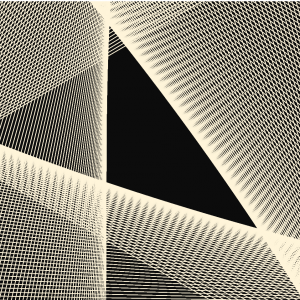

Inspired by my struggles with the lab this week, I wanted to dive a bit further into the mechanism of iterated rotation. Here’s a simple transformation I thought looked really lovely.

agusman-LookingOutwards-“Sound Objects” by Zimoun

Zimoun Sound Objects Compilation Video

This series of “sound objects”, created by installation artist Zimoun are architectural soundscapes constructed from simple and functionally components. These components have ranged from ping pong balls, chains, cardboard boxes, springs and slats of wood, usually “activated” or displaced using an array of simple servo motors. While some of their more elaborate collaborative pieces incorporate plotters and hot plates, the majority of these sound objects call not to their complex build but the sonically resonant qualities of the commonplace materials used, especially in mass quantities. The architectural systems constructed from these individual sound objects articulate the tension between the orderly and the chaotic (or the chaotic within the orderly). Rather than a true sound being produced, these “sound objects” are characterized as emitting more of an acoustic hum that feels industrial, yet elegant.

I am extremely interested in the fabrication and facilitated performance of large-scale patterns- how intimate sonic and visual experiences can unfold from an environment, rather than an encapsulated piece of media. I also love how, through analog materials and motion, the “computational” aspects of this piece are downplayed in a very elegant way. To me, this really emphasizes what computational art is actually about- not the depictions of technology but of ideas. That being said, large-scale installations with functionality like these “sound objects” could not be accomplished easily without computation. The vastness of these patterns play to the computer’s ability to process large quantities of information and execute many outputs simultaneously.

agusman-LookingOutwards-10

Reverb

Reverb Video Documentation

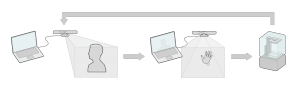

REVERB is a project by new media artist and robot tamer Madeline Gannon that bridges the virtual and physical contexts of fabrication and wearable design.

This 3D modeling environment allows the artist or designer to guide the movement of a 3D asset across the curvature of the body, leaving a digital trace that is then 3D printed and transformed into wearable jewelry. The gestures of the artist’s hand are what the 3D asset uses as a guiding path as it leaves it’s trace. Madeline refers to this method as a sort of digital chronomorphology, an offshoot of chronophotography- which is a composite recording of an object’s movement.

The pipeline of Madeline’s chronomorphological process begins with a depth sensor (e.g. Kinect) that records the depth data of the person who will be fitted for the wearable model. A point cloud is then generated and imported into a 3D workspace. Once positioned in there, the same depth sensor will detect the motion of the artist’s hands as they move around, forming the path on which a small 3D asset moves. Finally, the generated chronomorphological 3D model is printed and worn by the person.

Reverb is one of Madeline’s most famous and reputed pieces, covered by virtually every important technology magazine and conference in America. Other successful works include Mimus, a whimsical robot with childlike wonder and curiosity for other humans, as well as Tactum, another exploration in 3D printed wearables that could be custom fit and distributed online. While her education background is predominantly in architecture, she has studied computational design, art and robotics in more recent years. She is currently attending Carnegie Mellon and will be graduating in May with a PhD in computational design.

agusman-Project09-Portrait

//Anna Gusman

//agusman@andrew.cmu.edu

//Section E

//

//Assignment 06 B

var underlyingImage;

var vals = [0.001, 0.001, 0.001, 0.001, 0.001, 0.001, 0.001]; //declare array of pie slice percentages

var nVals = vals.length; //length of array values

var start = 0; //first set the starting point of the first arc = to 0

var end = start + vals[0] * 360;

//Set the ending point of arc relative to its starting point

//the arc + the percentage value (decimal * 360)

function preload() {

var myImageURL = "https://i.imgur.com/wU90PUnl.jpg";

underlyingImage = loadImage(myImageURL);

}

function setup() {

createCanvas(400, 400);

background(0);

angleMode(DEGREES);

underlyingImage.loadPixels();

frameRate(5000);

}

function draw() {

for (var i = 0; i < nVals; i++) {

start = end;

end = start + vals[i] * 360; //draw a new arc from the "end" of the preceding

//arc for every i

noStroke();

var px = random(width);

var py = random(height);

var ix = constrain(floor(px), 0, width-1);

var iy = constrain(floor(py), 0, height-1);

var theColorAtLocationXY = underlyingImage.get(ix, iy);

fill(theColorAtLocationXY);

arc(px, py, 30, 100, start, end);

}

}

I had a ton of fun with this project, even though the outcome was not as expected. I was inspired by a few pieces I’d seen by Sergio Albiac with sample pixel colors across the circumference of randomly distributed circles.

I decided to play with the rotational theme myself. Here are some examples of my process.

Photograph of my twin sister, taken by me:

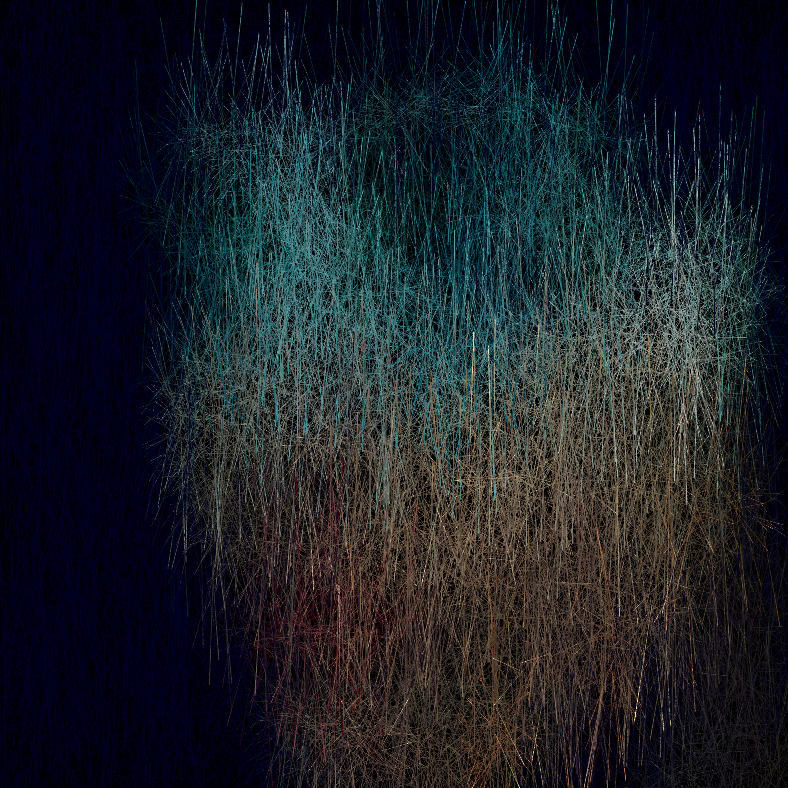

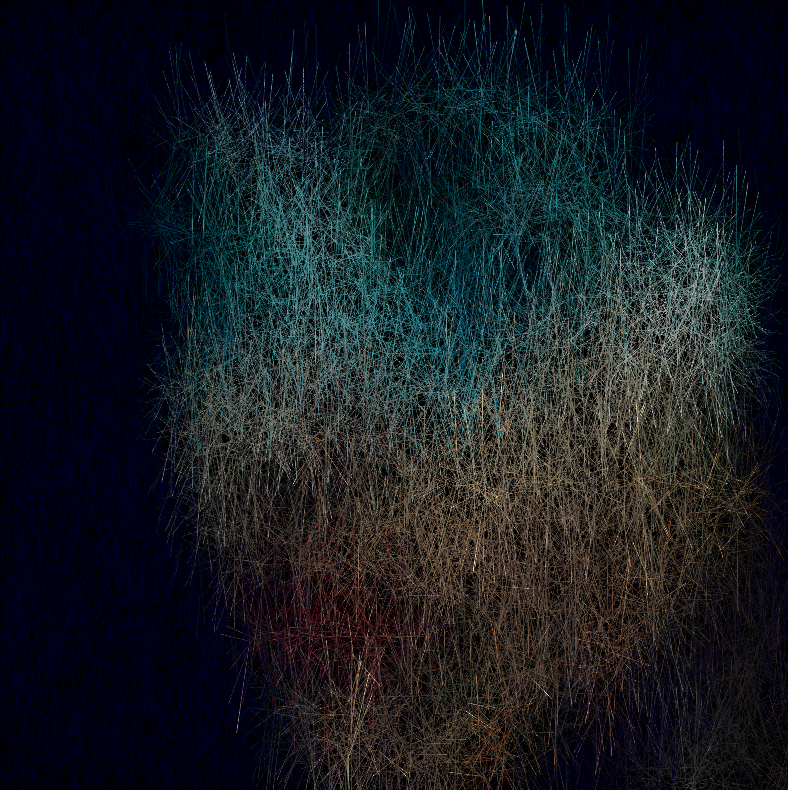

Experimenting with texture parameters: Dreary Downpour

Slightly more defined

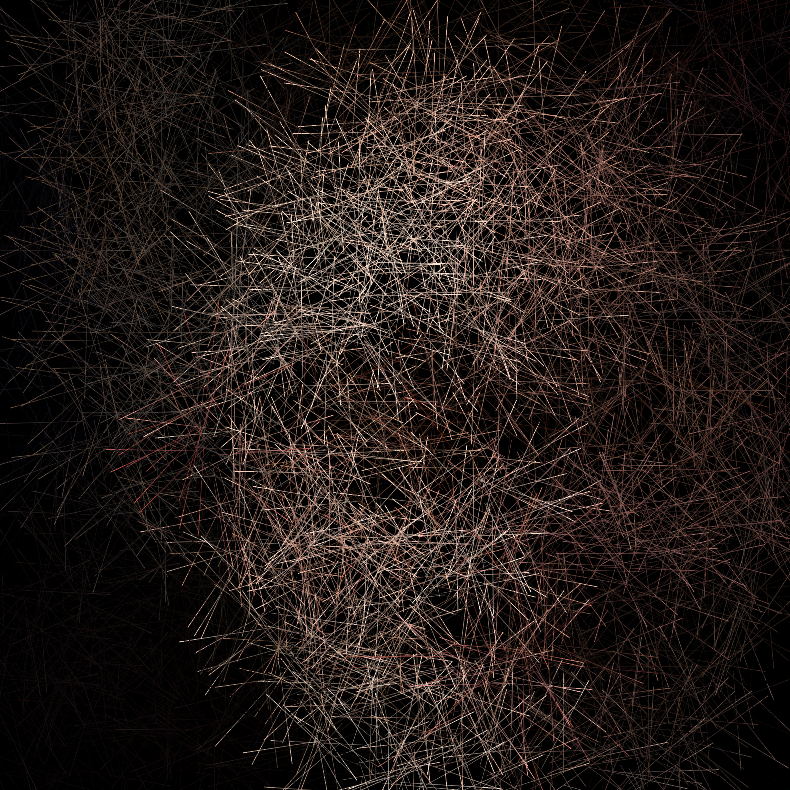

Furry Golan

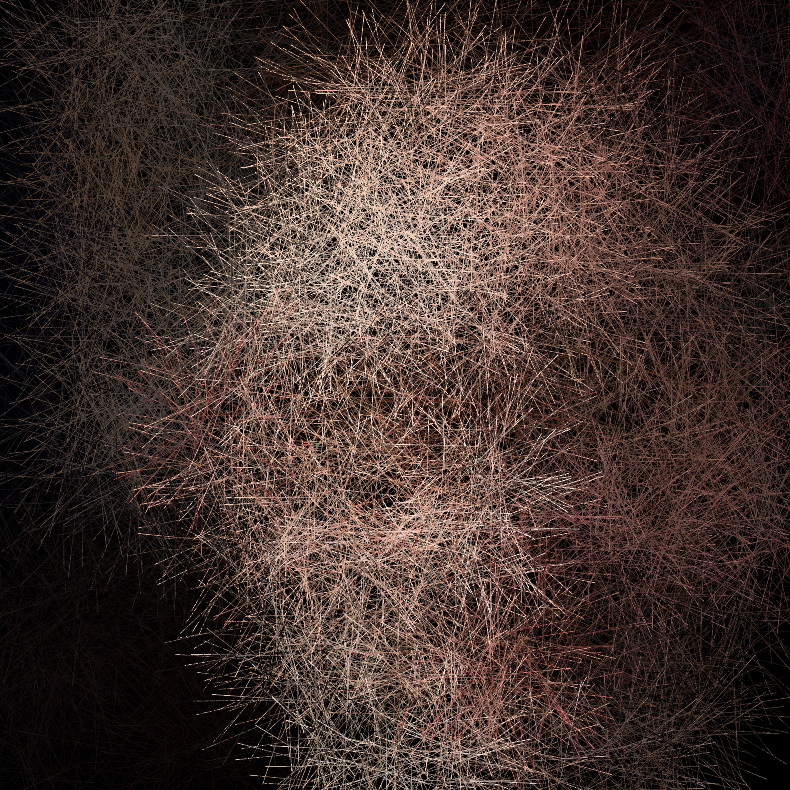

More furry Golan

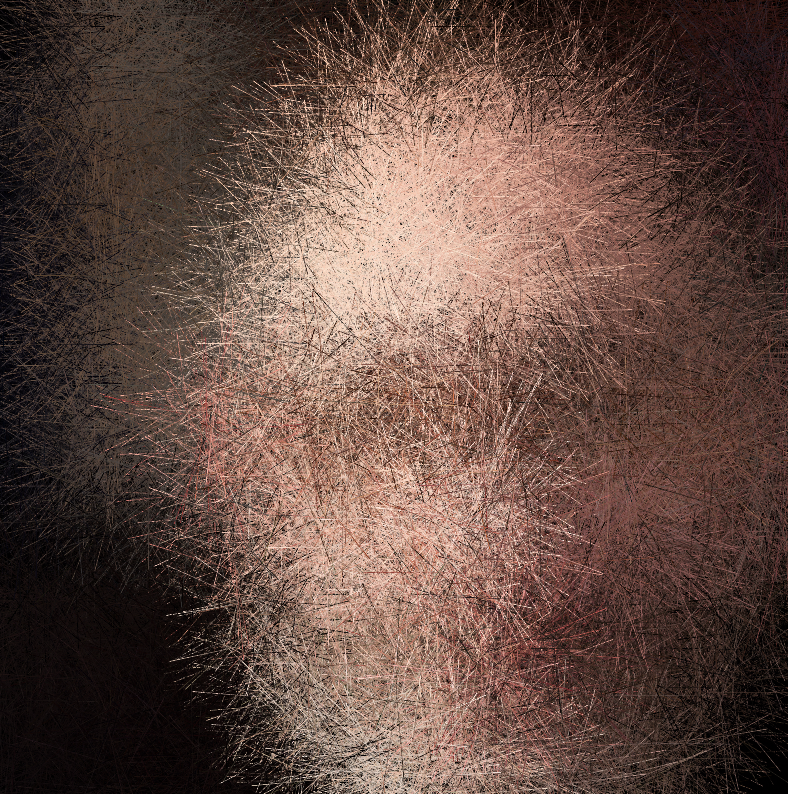

Most furry Golan

LookingOutwards-09-LookingOutwards!

SEM scans of various plant seeds colorized

Supawat’s Looking Outwards Post

This week, I’d like to address Supwat’s Looking Outwards post from Week 5, where he writes about the magical microscopic universe uncovered through colored electron microscopy (SEM). It was really interesting to read an alternative perspective on one of the Miller Gallery’s most exciting exhibitions (in my opinion), World Within, created and curated by Rob Kesseler. What was most surprising to me when observing the artifacts and documentation of this photographic process is that SEM scans are taken only in black and white.

Here’s how it works: Through a an electric light filament, the machine shoots a flow of electron through a pair of electromagnetic lenses. The beam is varied and scans itself across the studied object. Depending on the position of the beam, an electron detector collects data from the secondary electrons that are repelled back from scan. After that, the image is run that through some filters that result in a extremely high resolution black and white image.

While these images are quite impressive on their own, without the sensibility of the artist who colors and shades these images, we wouldn’t be able to experience such evocative and beautiful colored imagery. It’s refreshing to find this kind of artistic collaboration even in the most dense and complex scientific studies.

Here is the tutorial Supawat linked that reveals the methodology behind coloring SEM scans:

Tutorial on Coloring SEM Scans

I took a gander and was truly blown away 🙂

agusman-LookingOutwards-08

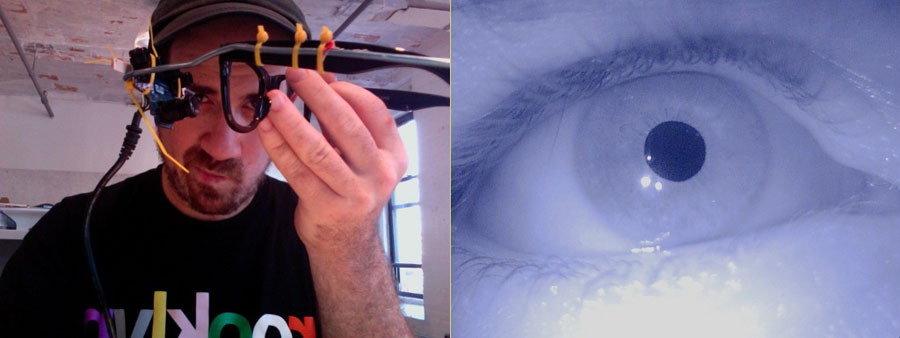

Zach Lieberman Eyeo Festival 2011

Zach Lieberman Eyeo Festival 2017

Zach Lieberman is an artist, researcher, hacker and maker of interactive computational works that unveil the synesthetic relationships between human behavior, form and color. Most notably, he is one of the co-founders and developers of Open Frameworks, a c++ library for creative coding. You can find the documentation and more information about it here: http://openframeworks.cc/about/

Zach describes his life’s work as a splicing between three categories: artistic, commercial and educational. Though he describes his work as taking place between these binaries, his projects are often seen at the intersection of these spaces. As an artist, Zach primarily explores themes concerning the human form and uses technology as an artificial limb with which we can extend our interactions with the world.

The Manual Input Workstation presents a series of audiovisual vignettes which probe the expressive possibilities of hand gestures and finger movements.

Messa di Voce (“placing the voice” in Italian)- an audiovisual performance in which the speech, shouts and songs produced by two abstract vocalists are radically augmented in real-time by custom interactive visualization software.

Eyewriter- a low-cost, open source eye-tracking system that will allow ALS patients to draw using just their eyes.

Many of his projects, such as those noted above, pose the human as the central performer of the art. I admire this model of art creation and personally strive to engage an audience through my work in the way Zach’s pieces do. In interaction-driven artworks, we can see the tangible relationships between the human form and the artist’s visual expression, combining the two in a very poetic way. This places the audience in a very unique position of autonomy, allowing themselves to become the “creators” of new, generative works.

In his free time, Zach also loves to explore sketching for his own personal fulfillment and enjoyment. He posts daily sketches to his social media, garnering the attention of thousands each day.

Some of the most commonly observed visual motifs and elements he uses are circles, organic blobs, colors, typography and alphabet, masks and the body.

Zach enjoys being a mentor and educator to many. He has held teaching positions at the School for Poetic Computation and Parsons, and holds Friday Open Office Hours on Twitter.

agusman-LookingOutwards-07

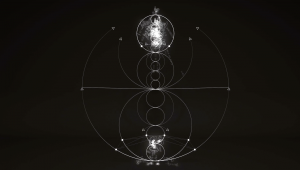

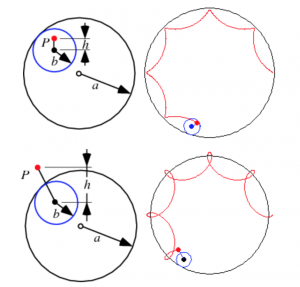

1.01 ‘Circles Within Circles’

Simon F A Russell

This video, created by freelance animation director Simon Russell, is one in a series of explorations on sonically generated geometry. He uses a program called Houdini, a plug-in commonly used with Cinema 4D, to generate 3D particle simulations that emit audio pulses from particle data (such as collisions, position on the canvas, etc.) In this sequence, a particle burst generates the first tone which sets the precedent for the following tones. The outer and inner ring also emit a constant tone. A Plexus-like effect, as Russell describes it, generates other tones based on the heights of the circular drawings.

As a musician, it’s really fascinating to see how other musicians interpret sound because it is almost always never the same. Sound is a highly intimate sensation and is internalized within us from a very young age. Our interpretations and projections of sound, music and raw noise alike, are a reflection of a deeper aggregate of personal influences, preferences, inspirations. When we hand off the task of interpretation to a computer, we see a collaboration between the preferences of a human and the logic of a highly sensitive machine- it is a joint effort between the person who has influenced the program with their associations with sound and the computer who can grab pieces of data that humans simply do not have access to or are too complex to grasp. These things include frequencies below or above our range of hearing, precise decibel and frequency levels that can be compared and contrasted relatively to others, and of course the potentiality of representing these datums in an orderly and beautiful way. In Russell’s video, we can see manifested the representations of the 8 note scale and how the laws of physics applies to the perception of sound. Really data-vis!!!

agusman-Project07-Curves

//Anna Gusman

//agusman@andrew.cmu.edu

//Section E

//Project 07

var edges = 25;

function setup() {

createCanvas(400, 400);

}

function draw() {

background(10, 10); //use opacity to create lag illustion

translate(width/2,height/2); //move "0,0" to center

strokeWeight(0.2);

noFill();

drawHypotrochoid();

}

function drawHypotrochoid() {

var x; //initialize x

var y; //initialize y

//set variables for hypotrochoid

var a = 0;

var h = map(mouseX, 0, width, 0, 480);

var b = a/map(mouseY, 0, height, 0, 480);

for (var i = 0; i < 20; i++){

var x;

var y;

var a = map(mouseX,0,width,-200,200); //alpha

var b = edges; //beta

var h = map(mouseY,0,height,0,10*i);

//changes the color gradient based on mouse position

var red = map(i,0,50,0,255);

var green = map(mouseX,0,width,0,255);

var blue = map(mouseY,0,height,0,255);

stroke(color(red,green,blue));

}

//draw hypotrochoid

beginShape();

for (var i = 0; i < 200; i++) {

var angle = map(i, 0, 200, 0, TWO_PI);

var x = (a - b) * cos(angle) + h * cos (((a - b)/b)*angle);

var y = (a - b) * sin(angle) - h * sin (((a - b)/b)*angle);

vertex(x, y);

}

endShape();

}

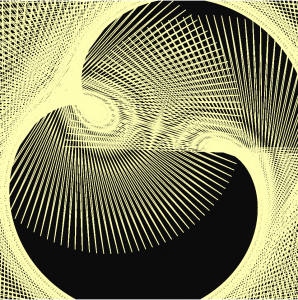

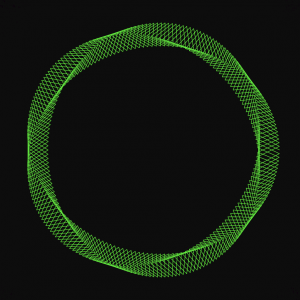

This project was culmination of happy accidents- I found that the best way to approach manipulating graphical elements of my algorithm was to experiment and play with combinations of values when mapping my mouse interactions. It was surprisingly helpful to use the mouse as a tool to explore the different geometric permutations and their relationships to one another. The experience weakened my fear of using strange and foreign numbers and letters (back from calc in high school) as a very liberating creative tool. I found that adding even the simplest and subtlest of effects (like redrawing the background with a lower opacity) resulted in really great visual manipulations. For my curve, I used a hypotrochoid because it’s roulette drawing sequence reminded me of the clock mechanism from my project 06.

Here are some screenshots of some permutations

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)