While researching for this week’s looking outwards, I came across my friend’s Monica Chang’s week 8 Looking Outwards and became very interested. Her post was about Mike Tucker, an interactive designer and director at a company called MagicLeap, who centers around the future of spatial computing. They have collaborated with Radiohead’s Johnny Greenwood and Enclyopedia Pictura for the creation of Kanye West’s video game.

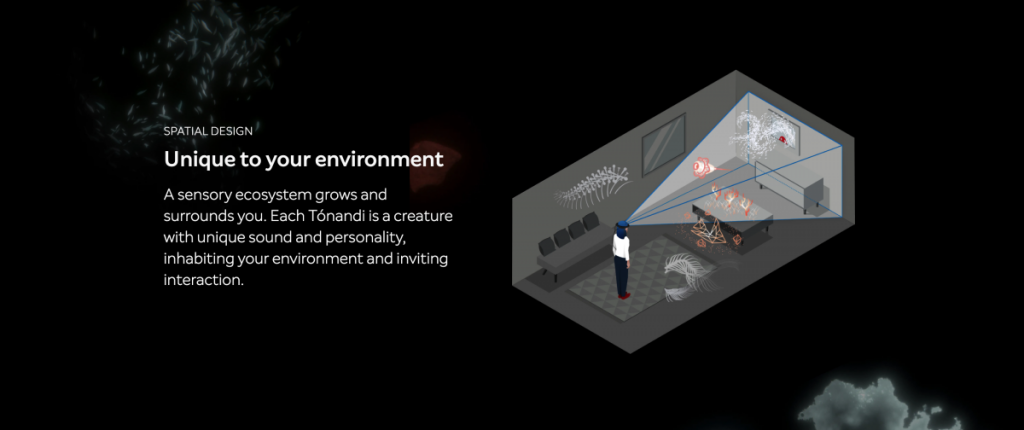

In Monica’s Looking Outwards post, she mentions a virtual app called Tónandi created in collabration with Tucker’s company, Magic Leap and Sigur Rós. Tónandi translates to sound spirit in Icelandic, an interactive audio-visual exploration of the sounds and spirit of Sigur Rós. They claim that Tónandi is a creature with unique sound and personality, inhabiting our environment and inviting interaction. I was very intrigued by this project because of its ability to interact with the environment naturally and how hands and body are all that is needed to execute this project and to connect with the sound creatures around you.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)