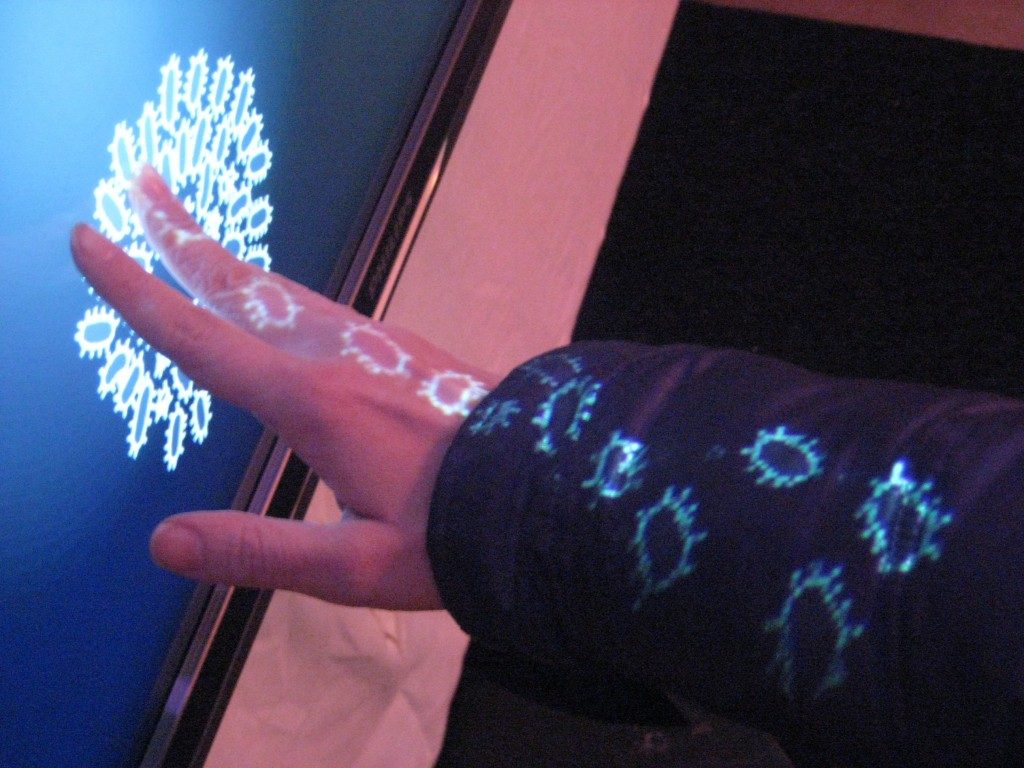

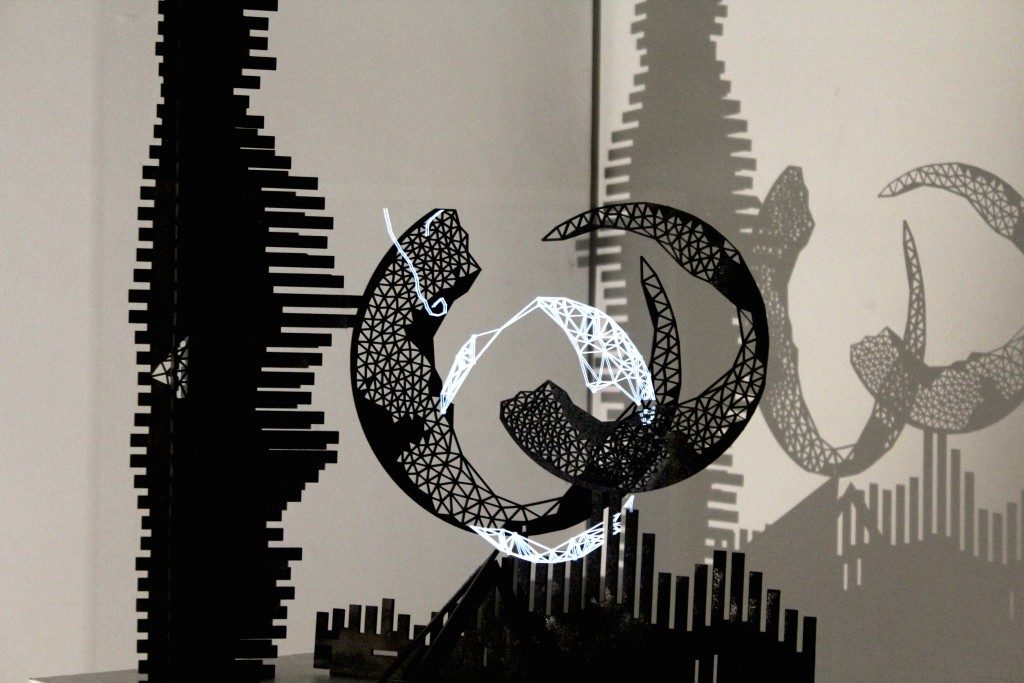

Heather Knight is a student who is currently conducting her doctoral research at Carnegie Mellon’s Robotics Institute and running Marilyn Monrobot Labs in NYC, which creates socially intelligent robot performances and sensor-based electronic art. She earned her bachelor and masters degrees at MIT in Electrical Engineering and Computer Science and has a minor in Mechanical Engineering. I’m inspired by her work because she creates robots that interact with human and also use robotic intelligence to create performances that are interesting.

Footnote on the Video: Heather Knight’s Ted talks with the Marilyn Monrobot which creates socially intelligent robot performances and sensor-based electronic art.

Her work also includes: robotics and instrumentation at NASA’s Jet Propulsion Laboratory, interactive installations with Syyn Labs, field applications and sensor design at Aldebaran Robotics, and she is an alumnus from the Personal Robots Group at the MIT Media Lab.

When she talks she talks very fluently and it was nice to see the actual robot interacting with the audience. I find that very powerful and a good way to present.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)