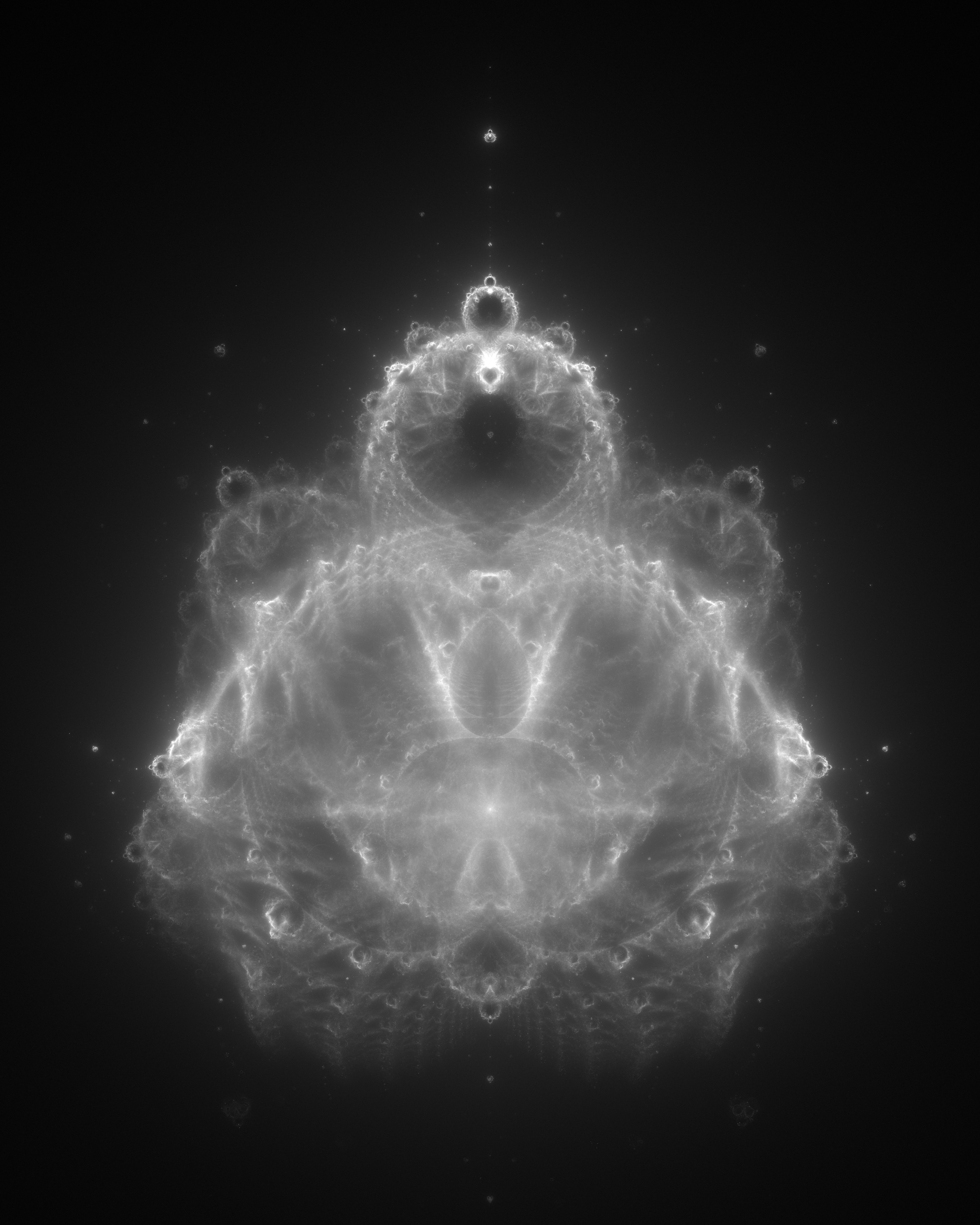

The Buddhabrot Technique was developed by Melinda Green and Lori Gardi, who named it such because of the image’s resemblance to Buddha.

This technique derives from the Mandelbrot, and, as creator Melinda Green stated, it is basically “a different way of representing the Mandelbrot set.” What she did was take the points used in the Mandelbrot set and randomize them to get this complex image. It took quite a long time to complete, as the “third eye,” (the particularly bright circle centered at the top of the “head”), alone took an entire “long weekend,” which is presumably three days, to complete. That being said, the significance is that certain parts of this, such as the “third eye,” could not even be seen in the Mandelbrot set, so ultimately this creation has opened up a new way to look at the old information.

This creation is truly magnificent and beautiful, and this Youtube video also highlights the “mini-Mandelbrot” components that surround the bigger and more in-depth Buddhabrot.

Works Cited

Bourke, Paul. The Buddhabrot. paulbourke.net. November 2000. 1 September 2016. <paulbourke.net/fractals/buddhabrot/>.

Computational Art. Metablake. 2014. 1 September 2016. <metablake.com/b/Computational_Art.html>.

Green, Melinda. The Buddhabrot Technique. Superluminal.com 1 September 2016. <superliminal.com/fractals/bbrot/bbrot.html>.

![[OLD – FALL 2016] 15-104 • COMPUTING for CREATIVE PRACTICE](../../../../wp-content/uploads/2020/08/stop-banner.png)

The Snout is a robotic arm with a googly eye on top that reacts to human movement by doing what seems like a double take. This project was done by Golan Levin, Lawrence Hayhurst, Steven Benders and Fannie White in 2008. It took 5 months and dealt with difficult problems like getting the 600-kilo robot on the roof for display. The software for this robot was developed in 3 weeks using toolkits such as openFrameworks. Professor Levin explains that thisproject was inspired by a performance by Mummenschanz when he was 6 years old. The performers were dressed in worm like tubes that look similar to The Snout. What is interesting about this interactive project is that it causes the illusion that it is confused. It is difficult making an animated character seem confused in a normal setting, not to mention doing what professor Levin calls “real-time animation” with a huge robot. This project addresses only one emotion. In future projects, robots should be able to display other emotions like joy, anger, sadness and fear etc. This may be a difficult task, requiring artists, computer scientists and Psychologist to work together, but something like The Snout project had been done almost 50 years ago. In 1970, Edward Ihnatowicz made a robot called “Senster” that turned to you when you clapped. It was a simple reaction but it shed light on what we are able to do with the technology we have today.

The Snout is a robotic arm with a googly eye on top that reacts to human movement by doing what seems like a double take. This project was done by Golan Levin, Lawrence Hayhurst, Steven Benders and Fannie White in 2008. It took 5 months and dealt with difficult problems like getting the 600-kilo robot on the roof for display. The software for this robot was developed in 3 weeks using toolkits such as openFrameworks. Professor Levin explains that thisproject was inspired by a performance by Mummenschanz when he was 6 years old. The performers were dressed in worm like tubes that look similar to The Snout. What is interesting about this interactive project is that it causes the illusion that it is confused. It is difficult making an animated character seem confused in a normal setting, not to mention doing what professor Levin calls “real-time animation” with a huge robot. This project addresses only one emotion. In future projects, robots should be able to display other emotions like joy, anger, sadness and fear etc. This may be a difficult task, requiring artists, computer scientists and Psychologist to work together, but something like The Snout project had been done almost 50 years ago. In 1970, Edward Ihnatowicz made a robot called “Senster” that turned to you when you clapped. It was a simple reaction but it shed light on what we are able to do with the technology we have today.