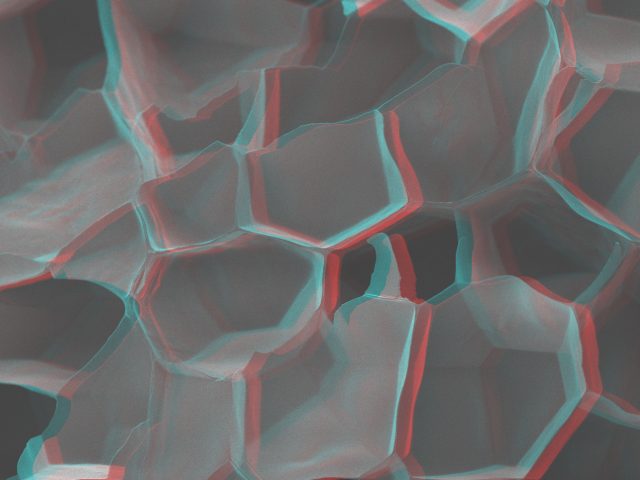

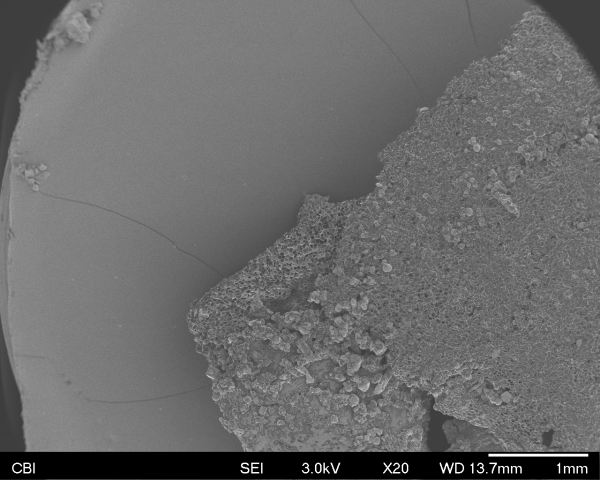

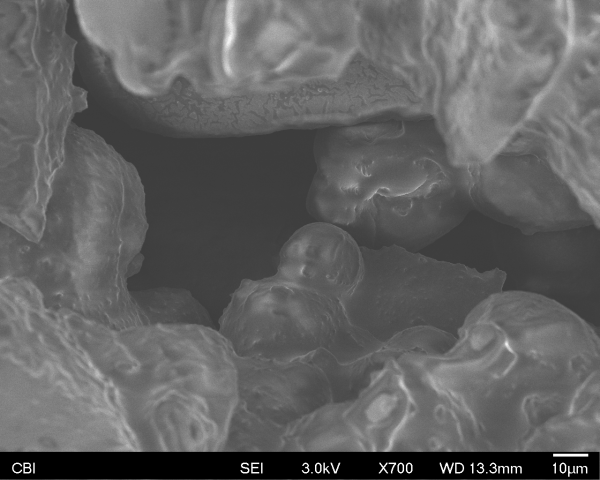

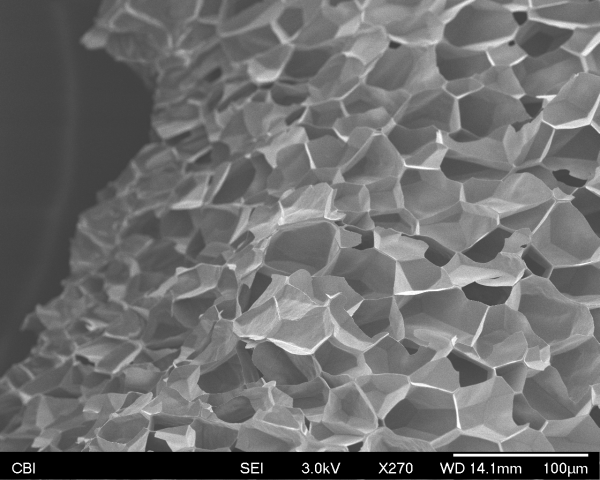

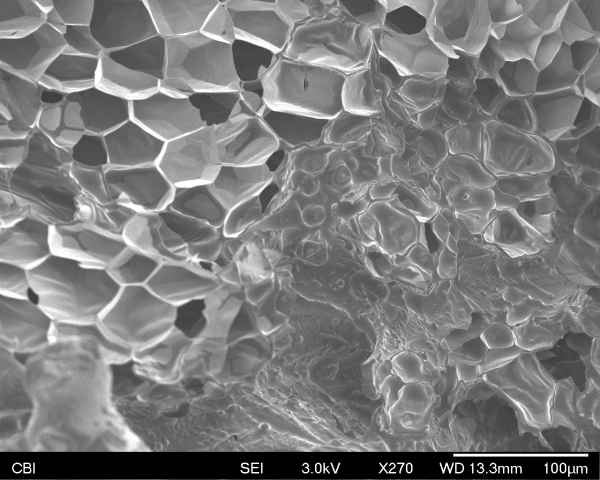

The medium in which we decide to capture an image can substantially influence our perception of the subject. These mediums can expose the object in a new way that we haven’t seen before. A very recent example is the electron microscopy where we would be able to see the low-level structure of objects. Those images that contradict what we originally think of when we think of an object are the ones that we remember the most (looking at popcorn up close and seeing its paper hex-like structure will stick with me).

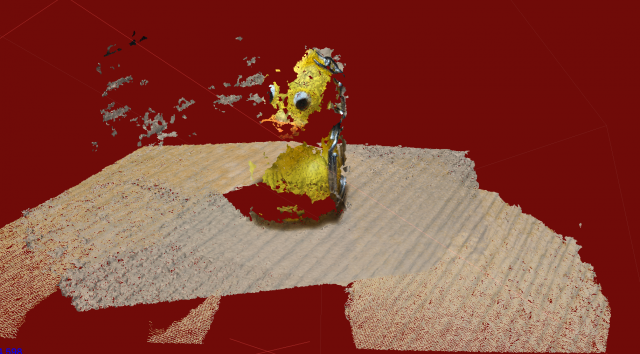

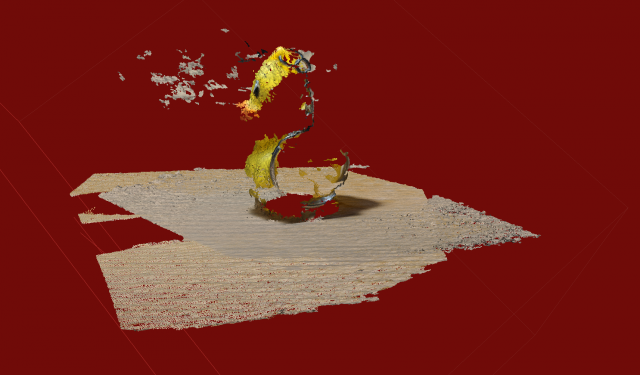

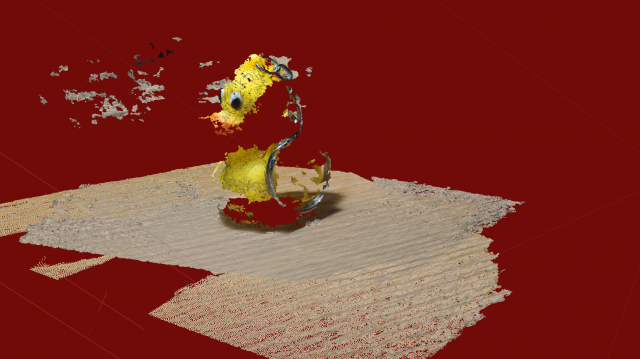

We can then abstract this idea to say that the medium can also influence the topology of an object. Since images are used to describe and give meaning to a topology, then influencing images will also influence their corresponding topology.

I would argue that different mediums can be objective. These mediums can be objective if you define mediums in terms of their equipment used. Since there is no disagreement on the classification of different imaging equipment, then there shouldn’t be any disagreement among the mediums. These mediums would then be both predictable and scientifically accurate since they reference the technology used to make the mediums.