In essence I find that in the age of Machine Vision, the operator of the camera becomes more and more a curator of vision. GAN, Google Clips and Bruce Sterling’s camera all to me seem facets of a form of permanent world data collection, that are then curated and filtered by the operator. Through this, the individual’s biases and interests act in through the content they choose to convey, much in a similar fashion to the traditional notion of the eye of the photographer with the point and shoot. The user becomes author of their specific curations, with the camera act as a point of data collection of their world, with the world of camera no longer framed through a snapshot of time but rather a continuous stream of input that is then filtered through: the camera operator as film editor. Through this, the data collection and curation in essence become one. This collected data in turn is no longer limited by the notion of light and indeed visual aesthetics, but becomes a way to frame any stimulus that the world and human existence provides that can be documented.

Category: Uncategorized

Response: The Camera, Transformed by Machine Vision

This article highlights a number of examples where a user is acting as a trainer for a computer that then acts as an image capture or generation device. The article seems to imply that in the future of image capture we may cede control and authorship to our computational tools.

The article begins by presenting the example of common icons for and 128,706 sketches of the camera — each of which tends to look like a classic point and shoot camera. The article begins this way so that, in it’s larger discussion of contemporary imaging, it can begin to show that the concept of the camera – or the things that we take images with – is shifting towards devices with more self agency and intelligence.

The key subjects that the article traverses through are YOLO Real-Time Object Detection, Google Clip, Pinterest visual search, and machine learning tools for image generation. What becomes evident is that the key paradigm shift is more about computers’ ability to interpret and modify image produced by cameras. In the case of the object detection the Google camera and Pinterest are using algorithms to understand the contents of the image. In these cases the computer generates a different result depending on the contents of the images is sees. The end of the article is where some more troubling subjects are explored. The article highlights how machine learning algorithms can generating photo real images. This computational power not only has the possibility of altering our understanding of cameras and photo equipment, bus also could begin to drastically alter our understanding of the truth of images.

Hans Hacke – Environmental & Kinematic Sculptures

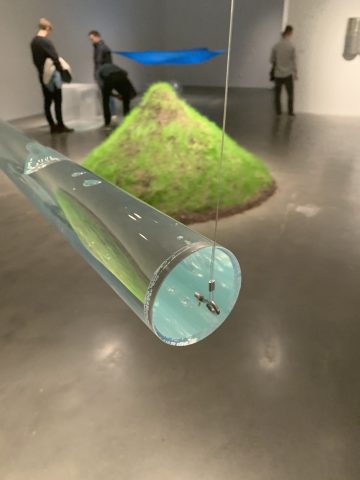

I was recently at the New Museum In New York City. I had been drawn there by the work of Hans Hacke, specifically his kinetic and environmental art. The museum had devoted one large space to the pieces in question — the room was filled with undulating fabric, a balloon suspended in an airstream, acrylic prisms filled with water to varying degrees with water, and more. Each of these pieces represented a unique physical system that was dependant on the properties of the physical space it was curated in or the viewers that interacted with it. One specific piece that caught my eye was a clear acrylic cylinder filled, almost completely, with water, and suspended from the ceiling. It was in essence a pendulum with water sloshing around inside. I found myself intrigued by the way the water moved and air bubbles oscillated as the larger system moved. There’s a highly complex relationship between the dynamics of the fluid and that of the pendulum. Connecting this back to experimental capture: how we can capture and highlight this kind of complex dynamic system? What if you had a robot driven camera followed the motion of the watery pendulum? What if you used a really big camera that highlighted the act of robot capturing the pendulum? If you curated the robot, the pendulum, and the captured video together how would that change the piece?

I get excited by art that can spur ideas and inspiration in me – that’s why I got excited about Hans Haacke’s kinetic art.

Link to New Museum Show: https://www.newmuseum.org/exhibitions/view/hans-haack

Response: The Camera, Transformed By Machine Learning

When making our list of expectations of “cameras” and “capturing”, we discussed the fact that there has to be a human photographer when an image is captured. While I agree this is not true, I think owners of image-capturing machines are not talented, and machine-captured images cannot be beautiful because they are not purposeful. Projects like the Google Clip eliminate the need for a photographer, which, as one myself, really upsets me. I already get annoyed by the amount of people who claim they are “photographers” but hardly know how to handle a DSLR off of auto, and I think smart cameras would create even more of these people. I imagine that training a neural net to take “beautiful” photos (well-composed, well-lit, with strong subjects) isn’t that far off, and it’s acceptable to assume those who own such cameras would claim the computational excellence as their own expertise. It’s important to highlight the difference between ownership and authorship here–I think owning a device that takes a photo, and even using it, does not make you a photographer. It would feel so wrong to credit the owner of a smart camera for a “beautiful” photo–in that situation, the human hardly even touches the camera. But I realize saying the opposite, the camera should get credit for its own photos, is not necessarily correct either, because the camera has no intuition about beauty–it’s capturing strategy comes from an algorithm. There is no reason why the camera is taking the photo other than it senses something it recognizes. And the people who coded that algorithm shouldn’t get credit for the beauty; they weren’t even at the scene of the photo. The smart camera seems to take authorship, or uniqueness, out of photography, which is essentially what makes photography a valid art practice. In my opinion a good photographer is one that provides a unique perspective and voice to the world through their images. The smart camera will strip the world of these unique perspectives. That makes me so sad.

Reading 01

We often think of cameras as devices that are commanded by the photographer, but they’ve been slowly moving further and further away from that definition. Most if not all of the students in the class grew up around cameras that had auto-focus and auto-aperture/exposure capabilities. But now that cameras can press the shutter (or the digital equivalent) on their own, we begin to question the role of authorship over the photograph. At this point, I don’t think we need to be asking that question yet. There is still an authorship role present in the use of these cameras: they still need to be placed somewhere, and then the resulting photographs need to be curated. While the actual photo taking is mindless for the photographer, there is still a substantial amount of agency and consideration present in the act of setting up the camera and selecting the resulting photographs. Because of this, and I think this also can be extended for most automated art, the user of a Clip camera or any other camera that takes its own photos still has a role of authorship over the resulting art. That role may be different than it was in the past, but it has yet to vanish.

The Camera, Transformed By Machine Learning

This article introduces the AI and ML movements into the field of Computer Vision, and more specifically in Cameras. Before 2010, some of the best performing Vision algorithms operated on code written and reasoned by humans. With the rise of AI, autonomous systems capable of understanding patterns among thousands of pieces of data were able to outperform some of the best human algorithms, thus introducing AI into the Vision world.

Now with intelligent vision systems, the operator has less responsibility when taking a photo, and can leave the camera to do more work. The role as an operator should be to have the creative freedom to decide on a photograph when taking it, and the AI should only interfere to enhance the photo if the operator requests it (sometimes AI can make a photo worse). We are currently at the point where operators and cameras work together, but we very well may be entering a future in which cameras can make most of the decisions when taking a photograph.

Welcome to the Spring 2020 ExCap website!

Welcome to the Sping 2020 ExCap website! You can login here:

https://courses.ideate.cmu.edu/60-461/s2020/wp-admin/

Use your Andrew credentials to access the dashboard. Your WordPress site is configured to use Shibboleth, the Single Sign On (SSO) system in use on campus, which eliminates the need for separate accounts on each course site.