// Bettina Chou

// yuchienc@andrew.cmu.edu

// section c

// project 11 -- freestyle turtles

///////////////TURTLE API///////////////////////////////////////////////////////////////

function turtleLeft(d) {

this.angle -= d;

}

function turtleRight(d) {

this.angle += d;

}

function turtleForward(p) {

var rad = radians(this.angle);

var newx = this.x + cos(rad) * p;

var newy = this.y + sin(rad) * p;

this.goto(newx, newy);

}

function turtleBack(p) {

this.forward(-p);

}

function turtlePenDown() {

this.penIsDown = true;

}

function turtlePenUp() {

this.penIsDown = false;

}

function turtleGoTo(x, y) {

if (this.penIsDown) {

stroke(this.color);

strokeWeight(this.weight);

line(this.x, this.y, x, y);

}

this.x = x;

this.y = y;

}

function turtleDistTo(x, y) {

return sqrt(sq(this.x - x) + sq(this.y - y));

}

function turtleAngleTo(x, y) {

var absAngle = degrees(atan2(y - this.y, x - this.x));

var angle = ((absAngle - this.angle) + 360) % 360.0;

return angle;

}

function turtleTurnToward(x, y, d) {

var angle = this.angleTo(x, y);

if (angle < 180) {

this.angle += d;

} else {

this.angle -= d;

}

}

function turtleSetColor(c) {

this.color = c;

}

function turtleSetWeight(w) {

this.weight = w;

}

function turtleFace(angle) {

this.angle = angle;

}

function makeTurtle(tx, ty) {

var turtle = {x: tx, y: ty,

angle: 0.0,

penIsDown: true,

color: color(128),

weight: 1,

left: turtleLeft, right: turtleRight,

forward: turtleForward, back: turtleBack,

penDown: turtlePenDown, penUp: turtlePenUp,

goto: turtleGoTo, angleto: turtleAngleTo,

turnToward: turtleTurnToward,

distanceTo: turtleDistTo, angleTo: turtleAngleTo,

setColor: turtleSetColor, setWeight: turtleSetWeight,

face: turtleFace};

return turtle;

}

///////////////BEGINNING OF CODE///////////////////////////////////////////////////////////////

function preload() {

img = loadImage("https://i.imgur.com/UB3R6VS.png")

}

function setup() {

createCanvas(300,480);

img.loadPixels(); //load pixels of image but don't display image

background("#ffccff");

}

var px = 0; //x coordinate we're scanning through image

var py = 0; //y coordinate we're scanning through image

var threshold = 90; //image is bw so threshold is set to high brightness

function draw() {

var col = img.get(px, py); //retrives RGBA value from x,y coordinate in image

var currentPixelBrightness = brightness(col);

var t1 = makeTurtle(px,py);

var t2 = makeTurtle(px + 20, py); //offset by 10 pixels to the right

strokeCap(PROJECT);

t1.setWeight(5);

t1.setColor("#ccffff");

t2.setWeight(1);

t2.setColor("#33cc33");

if (currentPixelBrightness > threshold) { //does not draw lines in negative space

t1.penUp();

t2.penUp();

}

else if (currentPixelBrightness <= threshold) { //only draws lines to fill in positive space

t1.penDown();

t2.penDown();

}

t1.forward(1);

t2.forward(1);

px += 1;

if (px >= width) { //brings px to 0 again

px = 0;

py += 10; //starts a new row

}

}

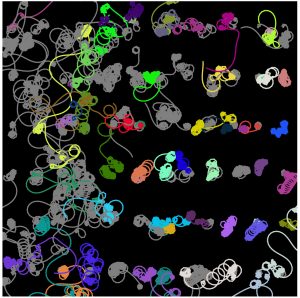

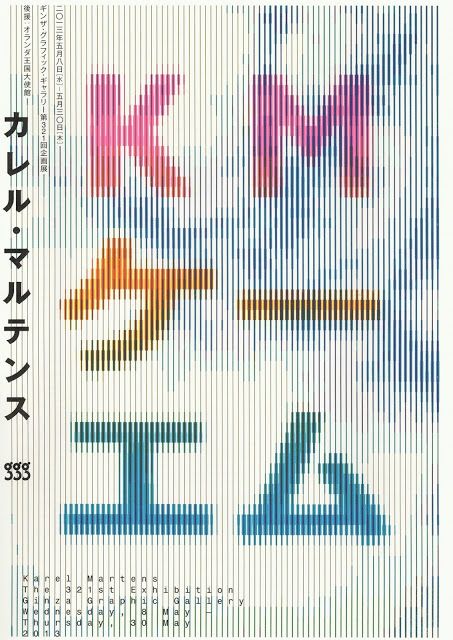

I was inspired by the following piece of work and considered how computation could create such image treatments as opposed to manually setting the lines and offsets.

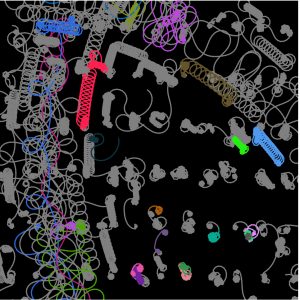

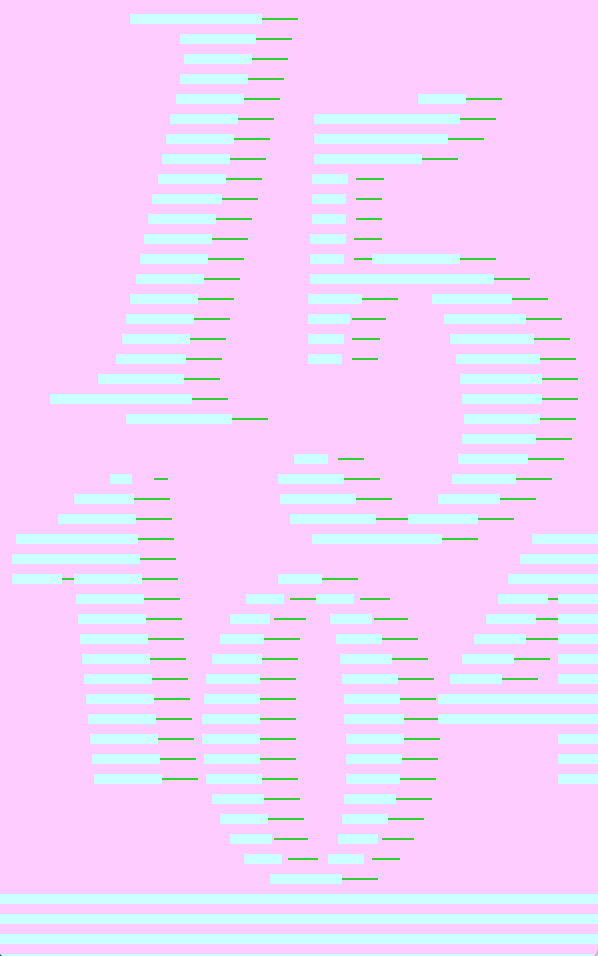

I would have had no idea where to start if not for the deliverables prompt suggesting that we could have turtles draw things in relation to an image. I decided to build upon the pixel brightness techniques we learned in previous weeks to make the turtle penDown() when the image is black and penUp() when the image is white. Thus, in a black and white image it is easy to recreate it using the lines method.

Above is the quick image I put together in illustrator to have my turtle trace

Above is a screenshot of the finished image from this particular code. Line weight, colors, amounts, and offsets could easily be manipulated to create a variety of imaging. There could even be multiple images referenced to create a more complex drawing.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)