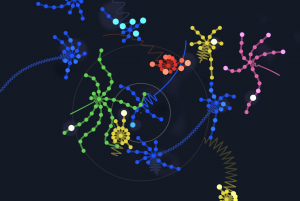

sketch

var faceD = 150;

var cheekW = 35;

var cheekH = 20;

var LeftEarW = 110;

var LeftEarH = 150;

var RightEarW = 70;

var RightEarH = 100;

var R = 252;

var G = 200;

var B = 200;

var BubbleD = 40; // diameter of bubble

var x = 425; //point position of nose

var y = 130; //point position of nose

var eyeW = 10;

var eyeH = 10;

var Px = 240; //point position of line

function setup() {

createCanvas(640, 480);

angleMode(DEGREES);

}

function mousePressed() {

faceD = random(150, 170);

LeftEarW = random(100, 180);

LeftEarH = random(145, 185);

RightEarW = random(60, 140);

RightEarH = random(95, 135);

R = random(200, 255);

G = random(100, 220);

B = random(100, 220);

BubbleD = random(20, 60);

x = random(415, 475);

y = random(110, 170);

Px = random(240, 280);

eyeW = random(10, 20);

eyeH = random(10, 20);

}

function draw() {

background(255, 236, 236);

//body

noStroke()

fill(210);

beginShape();

curveVertex(200, 250);

curveVertex(220, 260);

curveVertex(175, 330);

curveVertex(165, 400);

curveVertex(300, 400);

curveVertex(330, 240);

curveVertex(350, 240);

endShape();

//body division

stroke(50, 32, 32);

strokeWeight(2);

curve(240, 300, 240, 350, 290, 330, 300, 330);

line(260, 345, Px, 400)

//right ear

push();

rotate(10);

noStroke();

fill(210);

ellipse(270, 120, RightEarW, RightEarH);

fill(252, 225, 225);

ellipse(270, 120, RightEarW/1.5, RightEarH/1.5); //inner ear

pop();

//Left ear

noStroke();

fill(210);

ellipse(220, 210, LeftEarW, LeftEarH);

fill(252, 225, 225);

ellipse(220, 210, LeftEarW/1.5, LeftEarH/1.5); //inner ear

//face

noStroke();

fill(210);

ellipse(300, 220, faceD, faceD);

//eye

fill(50, 32, 32);

ellipse(345, 220, eyeW, eyeH);

//cheek

fill(R - 20, G - 20, B - 50);

ellipse(330, 250, cheekW, cheekH);

//nose

fill(210);

beginShape();

curveVertex(350, 200);

curveVertex(355, 190);

curveVertex(375, 180);

curveVertex(x, y);

curveVertex(x - 5, y +15);

curveVertex(x - 5, y + 45);

curveVertex(400, 195);

curveVertex(390, 220);

curveVertex(365, 255);

curveVertex(365, 260);

endShape();

//bubble

fill(R, G, B);

ellipse(x + 25, y + 20, BubbleD, BubbleD); //large bubble

fill(255);

ellipse(x + 25, y + 20, BubbleD*.7, BubbleD*.7); //white part

fill(R, G, B)

ellipse(x + 22, y + 17, BubbleD*.7, BubbleD*.7); //to cut white part

}

I wanted to make an elephant whose ear size, nose length and cheek color changes. In this project I tried out the curveVertex function. It was very confusing at first but I gradually learned how it works.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)