InferKit:

I go to school today wearing two pair of shoes.

I’m wearing the one pair with the cuter toes and the less funky colors (neon pink with a green swoosh, and green and orange with leopard stripes).

I’m also wearing one pair of sneakers that I can play basketball with.

I don’t think any of the students or professors have noticed my footwear yet, which is a little sad.

The fashion of the faculty, such as it is, seems to be sweaters and the occasional pair of well worn jeans and / or skivvies that could be described as casual.

None of them are stylish.

Most of them look like they got dressed that morning and just threw their stuff together.

Most of them have also learned how to leave the house without looking like they’ve just got out of bed.

They know that if they look so worn out that you don’t know if they’re awake or not, they don’t have any business leaving the house at all.

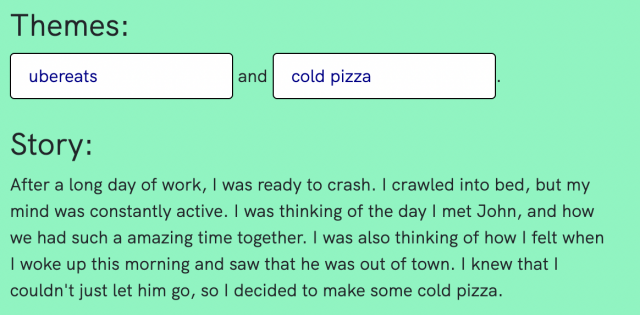

Narrative Device:

Master Yoda, the wrinkled old Jedi, looked up from his book and smiled at the two children. “Coca-cola, my young ones,” he said, “would make you very happy.”

Both the two tools are really cool to play with. I like InferKit better as the text it generated has more variety than the Narrative Device.

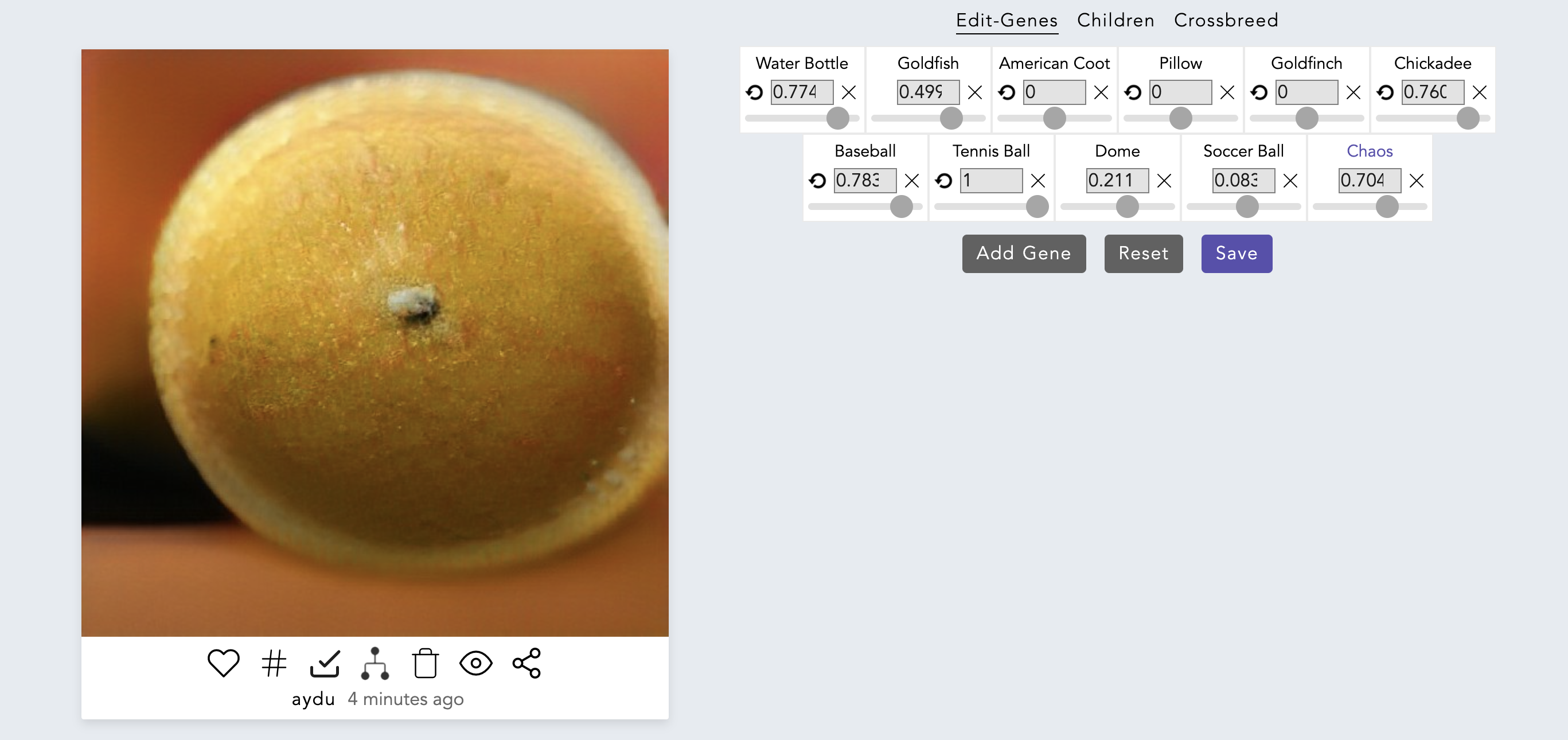

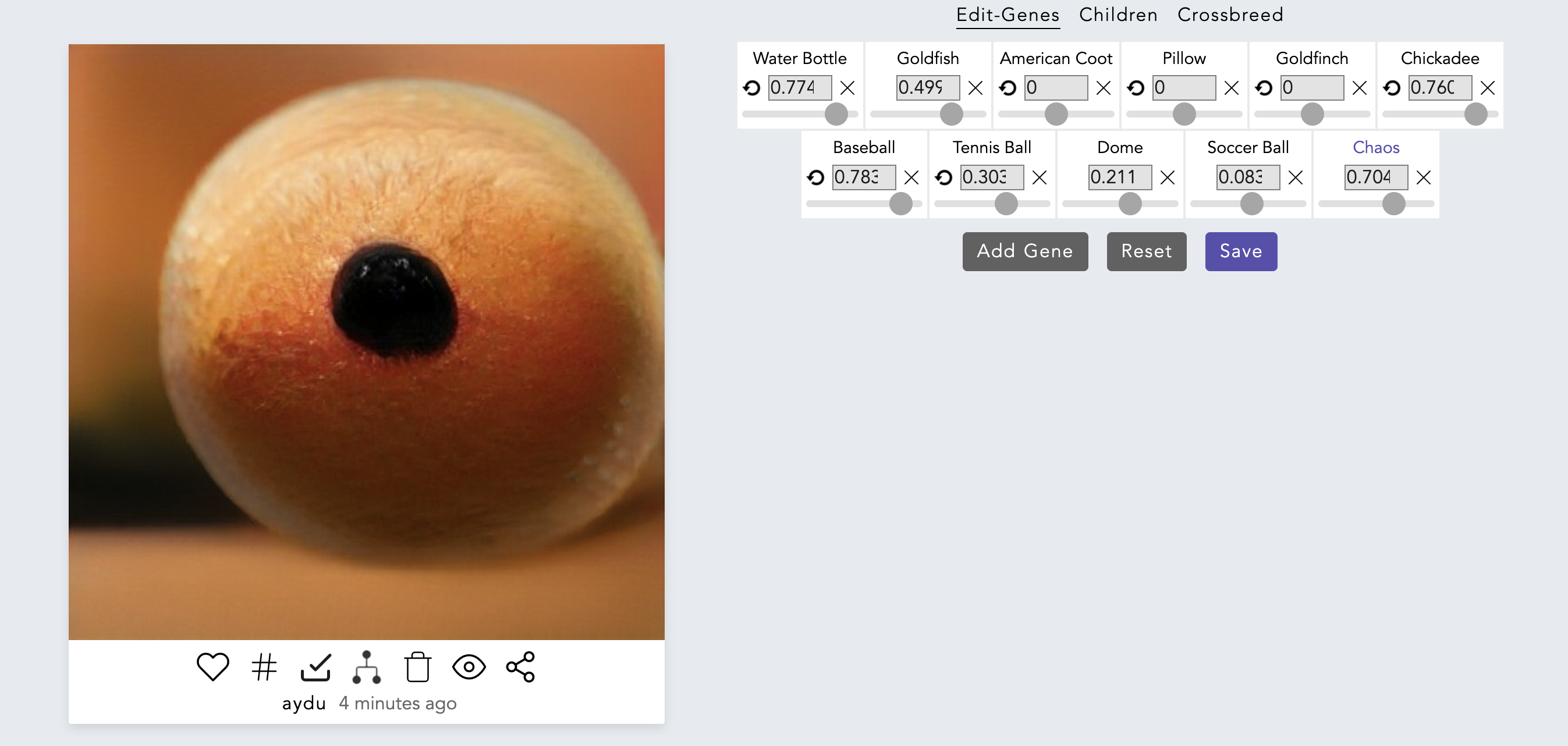

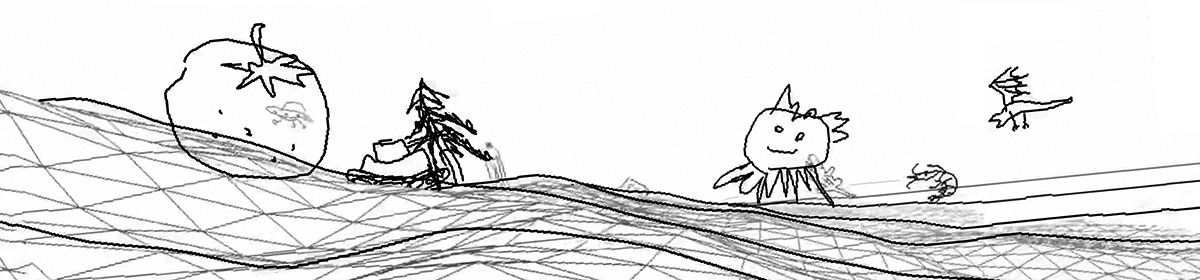

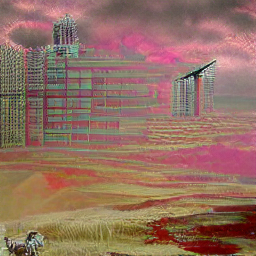

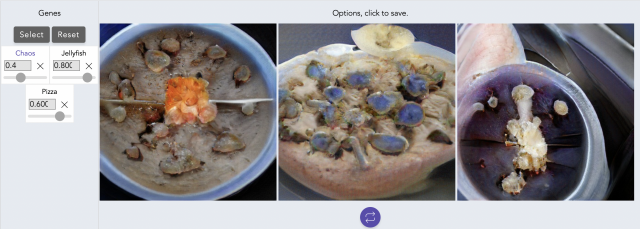

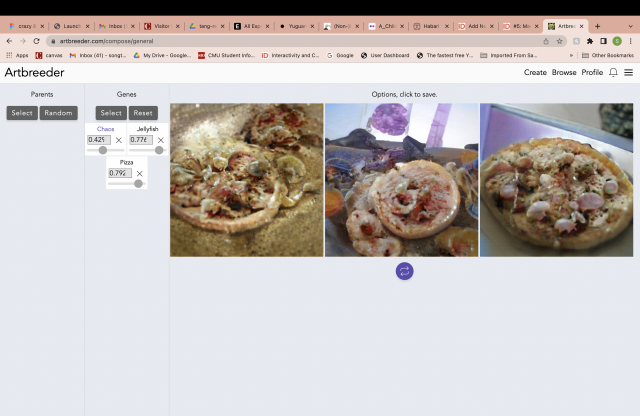

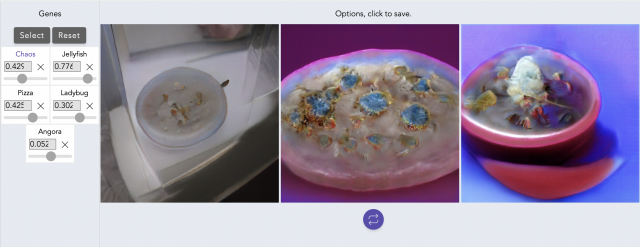

It’s actually really hard to imagine what the outcome will be just based on the sliders. The resulted images aren’t really what I was expecting, but they do have somewhat distinguishable features from the genes.

It’s actually really hard to imagine what the outcome will be just based on the sliders. The resulted images aren’t really what I was expecting, but they do have somewhat distinguishable features from the genes.