This set of deliverables is a breather, and requires no coding. The projects are due at the beginning of class on Wednesday, March 2nd.

The purpose of this week’s assignment is to familiarize you with the ‘grain of the material’ of neural image synthesis, through immersive experience using fun, lightweight readymades. There are six parts:

- Looking Outwards #03: Machine Learning Arts (30 minutes)

- 5A. Image-to-Image Translation: Pix2Pix (20 minutes)

- 5B. Image Synthesis with ArtBreeder (20 minutes)

- 5C: Text Synthesis with GPT-3 (20 minutes)

- 5D. Image Synthesis with VQGAN+CLIP (60 minutes)

- Purchase/obtain a three-button mouse! (You’ll need it for Unity next month.)

The last one, 4D, takes 60 minutes mostly because there is a lot of slow downloading and slow processing; you’ll be able to do other stuff while the image is in the oven. If you happen to have used all of these tools previously and want a new challenge, take a look at this extended list of tools here for some alternates.

Looking Outwards #03: Machine Learning Arts

(30 minutes). Spend about 30 minutes browsing the following online showcases of projects that make use of machine learning and ‘AI’ techniques. Look beyond the first page of results! More than 700 projects are indexed across these sites.

- MLArt.co Gallery (a collection of Machine Learning experiments curated by Emil Wallner).

- AI Art Gallery (online exhibition of the 2019 NeurIPS Workshop on Machine Learning for Creativity and Design). Note: this site also hosts the exhibitions for the 2018 and 2017 conferences.

- Chrome Experiments: AI Collection (a showcase of experiments, commissioned by Google, that explore machine learning through pictures, drawings, language, and music)

Now:

- After considering a few dozen projects, select one to feature in a Looking Outwards blog post. (Restriction: You may not select a project by your professor.)

- Create a blog post on this site. Please title this blog post nickname-LookingOutwards03, and categorize the project LookingOutwards-03.

- As usual: include an image of the project you selected; link to information about it, and write a couple of sentences describing the project and why you found it interesting.

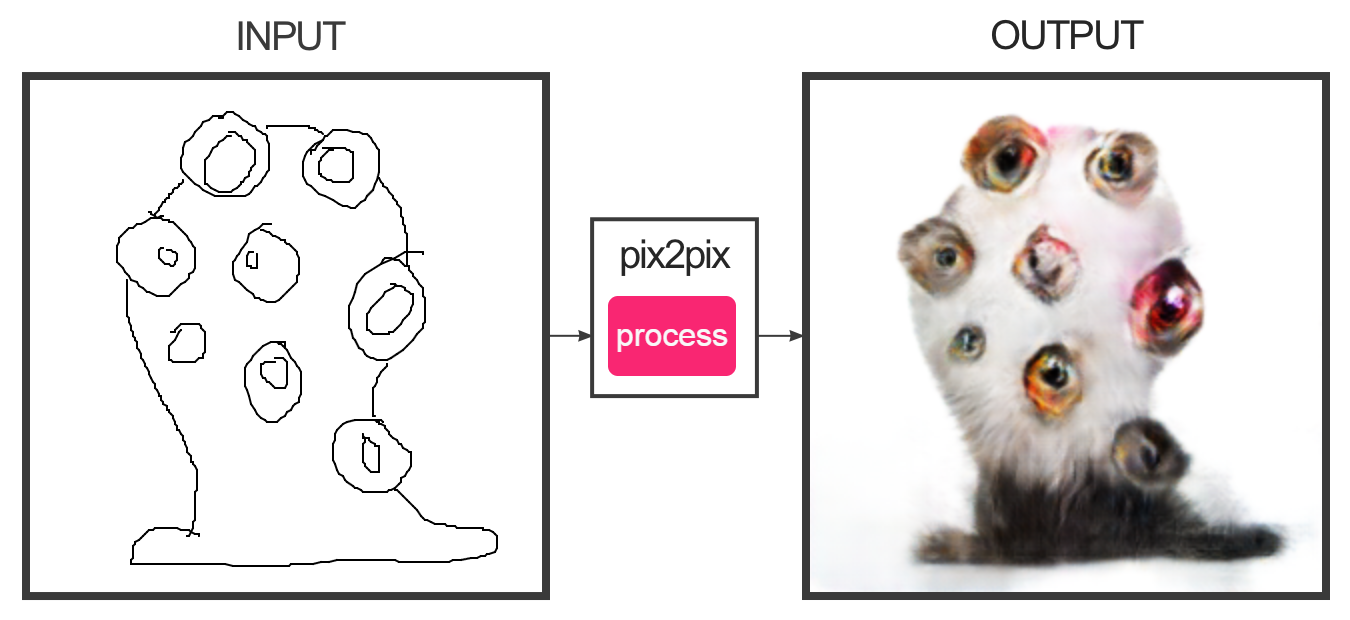

5A. Image-to-Image Translation: Pix2Pix

(20 minutes) Spend some time with the Image-to-Image (Pix2Pix) demonstration page by Christopher Hesse. Experiment with edges2cats and some of the other interactive demonstrations (such as facades, edges2shoes, etc.). You are asked to:

- Create at least 2 different designs. Screenshot your work so as to show both your input and the system’s output. Embed these screenshots into a blog post.

- Write a reflective sentence about your experience using this tool.

- Title your blog post nickname-Pix2Pix, and categorize your blog post as 05-Pix2Pix.

5B. Image Synthesis with ArtBreeder

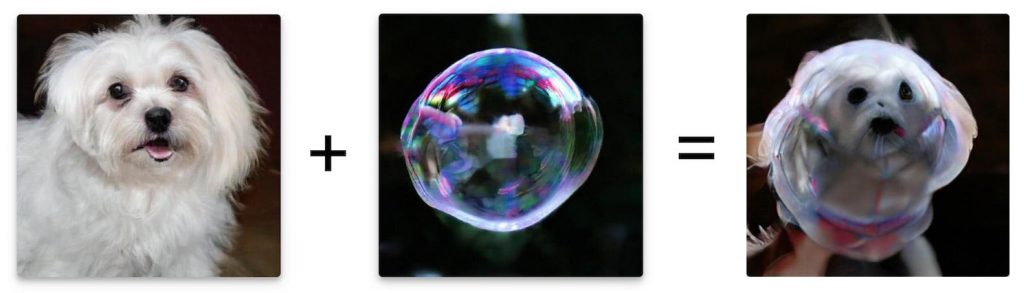

(20 minutes) ArtBreeder is an interactive and participatory machine-learning based tool developed by CMU BCSA alumnus, Joel Simon. It provides ways of ‘breeding’ images together, and sliders for adjusting the influence of high-level concepts that contribute to them.

- If you have used ArtBreeder before, please try a different tool instead, such as Joel’s new project, ProsePainter.

- Create an account (or login via Google) on ArtBreeder.

- Spend 15-20 minutes tinkering and zoning out with ArtBreeder. Please use ArtBreeder’s “General” model (the dogs etc. — not the faces, please) to breed (create) an image.

- Embed your results in a blog post entitled nickname-ArtBreeder and Categorized, 05-ArtBreeder.

- In your blog post, write a reflective sentence or two about your experience using this tool.

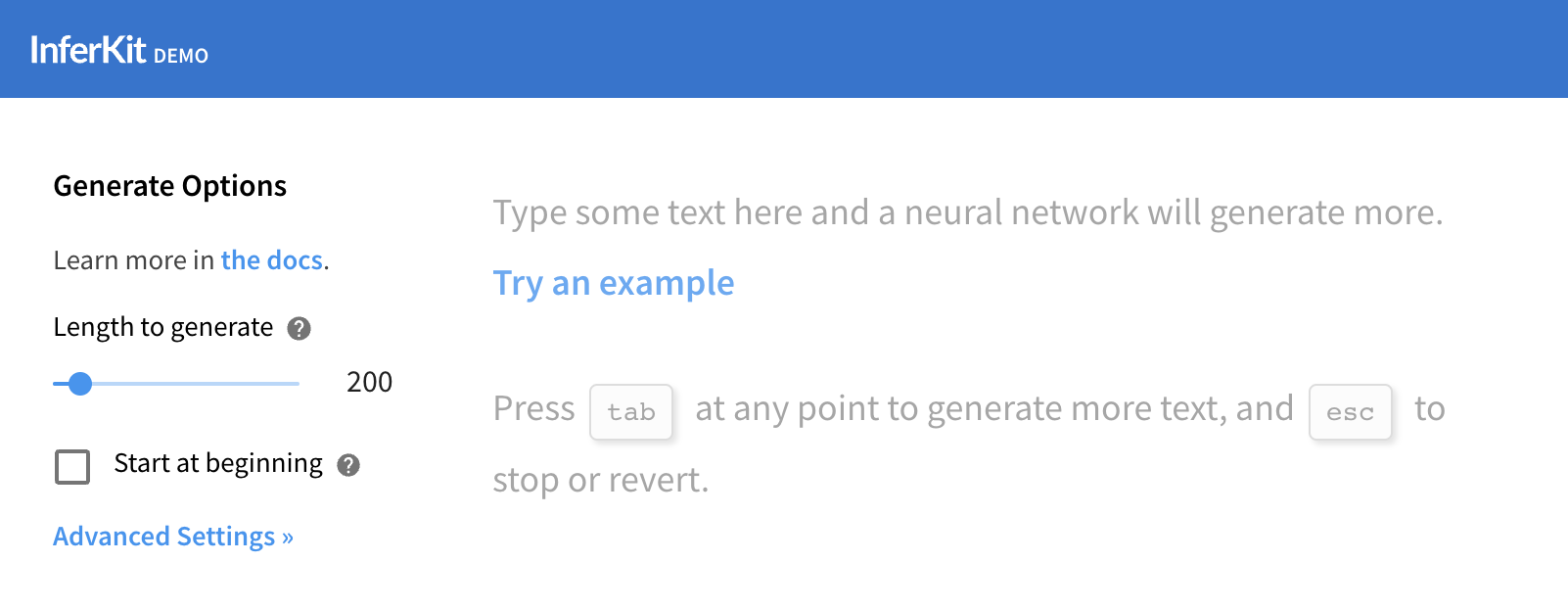

5C: Text Synthesis with GPT-3

(20 minutes) In this exercise, you will use some browser-based interfaces to Open AI’s GPT-3 language model in order to generate some samples of text which interest you. In particular, experiment with each of these tools until you produce two text fragments that you find interesting:

- Try the InferKit tool by Adam King

- Try the Narrative Device by Rodolfo Ocampo

Now:

- Embed your two text experiments in a blog post. Use boldface to indicate which words were provided by you as inputs to the system.

- Title your blog post nickname-TextSynthesis, and categorize your blog post with the WordPress category, 05-TextSynthesis.

- In your blog post, write a reflective sentence or two about your experience using these tool(s).

5D. Image Synthesis with VQGAN+CLIP

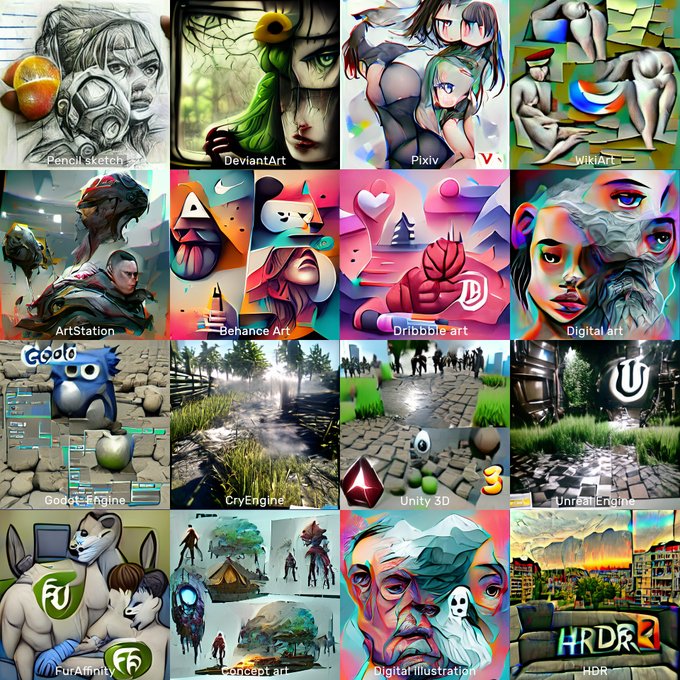

(60 minutes) In this exercise, you will experiment with ML-based tools for synthesizing images from text prompts, such as VQGAN+CLIP. Below: “Google Soup” by @0xCrung:

First: spend at least 10 minutes browsing work which is made this way. The main account on Twitter reposting this work is @images_ai, but other strong portfolios can be seen on the Twitter accounts of @RiversHaveWings, @advadnoun, @0xCrung, and @quasimondo. If you like this work a lot, you may also wish to check out @unltd_dream_co, @Somnai_dreams, @thelemuet, and @aicrumb.

We will be using a Google Colab notebook for VQGAN+CLIP synthesis originally developed by Katherine Crowson (@RiversHaveWings) and extended by @somewheresy. Follow all the steps and it should work for you ( — though it will take a loooong time to load, and you may need to use a smaller image size if you don’t have Colab Pro — I got it to work well with dimensions of 256×256 and 384×216). I’ll show how to use Colab briefly in class on Monday, but in case you miss this, there are lots of almost-relevant tutorials online. Here are a couple images I made, with the prompt “magical toad sandwiches”:

•

•

You may also find it helpful to use what Kate Compton has called “seasonings” (discussed in this Twitter thread) — extra phrases and hashtags (like “trending on ArtStation” that hint and inflect the image synthesis in interesting ways.

Now:

- Synthesize an image using this Google Colab notebook. (If the notebook fails for you, use the Pixray readymade instead, but note: in the long run, I do think it’s important for you to get experience using Colab.)

- Embed the image you synthesized in a blog post, along with the textual prompt that generated it.

- Title your blog post nickname-VQGAN+CLIP, and categorize your blog post with the WordPress category, 05-VQGAN+CLIP.

- In your blog post, write a reflective sentence or two about your experience using these tools.