I started this project pretty late, so I knew I had to stick with something pretty simple. I had a few ideas about making filters of School of Drama professors and their iconic caricatures, but I don’t really like them and hate the idea of idolizing them. Plus, it wouldn’t really land well with our class who doesn’t know them. I was pretty set on doing a filter, since I had a feeling it’d be simple, so I thought a little longer and landed on our adorable scotty dog mascot, keeping with the Carnegie Mellon theme I originally had!

Since I really want to learn how to make games (and also needed to add some complexity to a very simple idea), I added a little start screen and some bagpipe background music (that ended up stuck in my head for like a whole freaking day).

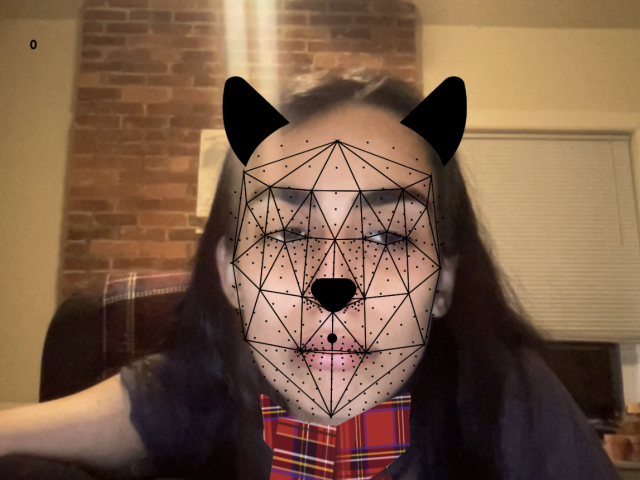

I also decided to add a bark sound every time the user opened their mouth, which I accomplished by calculating the distance between a point on the top lip and a point on the bottom lip. That’s definitely my favorite part of the project now.

Something I didn’t think about, and therefore did not give myself enough time to implement, was how to scale the filter based on the distance the user is to the screen. Also, if they turn their head, the filter does not rotate with them. When I got the feeling something was wrong, I opened Snapchat and experiemented with their filters, seeing how they scaled and rotated perfectly! That’s definitely where this project has fallen short, and I wish I started earlier to give myself that time.

Here is an early process photo where my scotty dog kind of looked more like a cat than a dog…