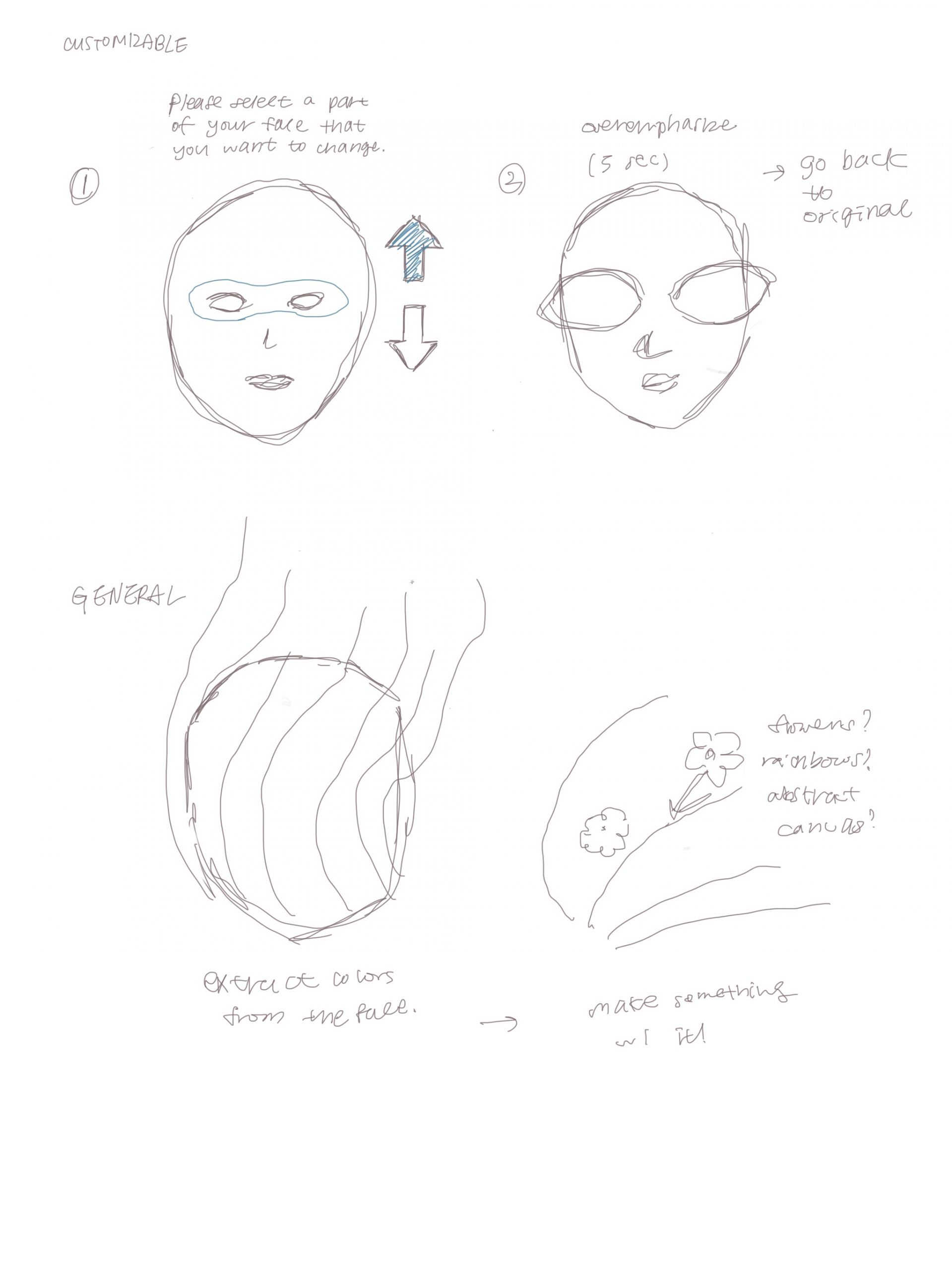

This final project is an attempt at using the Looking Glass HoloPlay Unity plugin to create an interactive animation/mini installation. The animation is of a character I created using Google’s TiltBrush application (that I made for an earlier project, found here) which I exported into Blender to rig and keyframe.

My concept was to create a story where the Looking Glass was a sort of window into a box that the character was trapped in. It sulks in the box alone until the viewer interacts with it by pressing a button–this causes the character to notice the existence of the viewer and to attempt to jump and attack the viewer, only to be stopped by the constraints of the box.

To break it down, I needed one idle animation, and one “reaction” animation that would occur with the button press. In this process, I made a preliminary walk cycle animation:

To give it more character, I added some random twitching/jerking:

I did these animations as practice, but also because I thought I would use them in my final project as part of my idle animation. I tried to mess around with Blender’s Action Editor, NLA Editor, etc. to piece together multiple animations but it didn’t come out the way I wanted to.

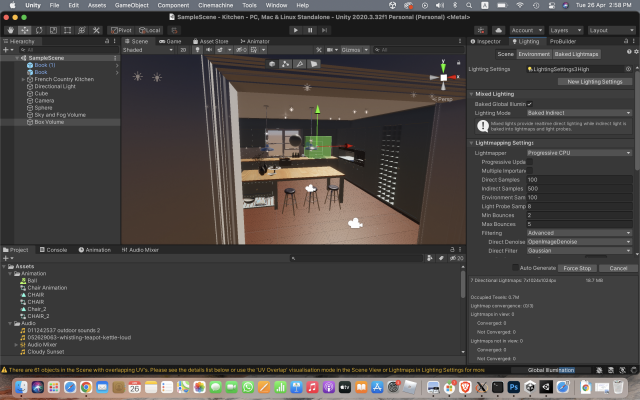

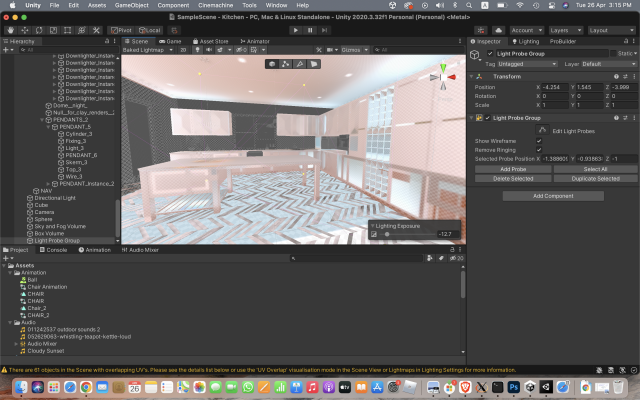

At the end, I was able to make an idle animation + reaction animation that I liked and that worked in Unity, so the only thing left was to put it on the Looking Glass. However, I ran into an issue immediately:

[Error] Client access error (code = hpc_CLIERR_PIPEERROR): Interprocess pipe broken. Check if HoloPlay Service is still running!

Looking up this issue led me to delete and reinstall the HoloPlay plugin, restart Unity, duplicate my project, delete certain files off of my computer, and many other things that other suggested online. However, nothing worked. I did see a comment from someone who I believe worked on the Looking Glass which said that they would be fixing this problem in a new update.

Without the Looking Glass, here is my final project:

https://youtube.com/shorts/MKlnXfXNzsc?feature=share

Just the idle state:

Just the reaction: