The main creator I have been looking at as I am ironing out the kinks of Unity is the YouTuber Sebastian Lague. He has a ton of videos that detail how he creates small but interesting projects in Unity, such as creating a flight simulator that uses real-word Earth data (such as Earth depth data to create a normal map for the globe model), or creating a barebones yet interconnected simulated ecosystem. I found his series on creating procedural plants particularly helpful. I don’t think any of these projects would necessarily be super engaging to play or are super artistically interesting, but I don’t think they aren’t really meant to be. I think they function well to highlight what can be done in Unity and to get viewers excited to create, which I think is really all I need for this project. I have also been watching a few videos on crowd simulation in Unity because I have a few ideas on how I can use a lot of AI agents in my project, which has been useful, but most videos detail projects that are probably more complex than I’ll need.

Category: 08-FinalProject

bookooBread – 08FinalPhase02

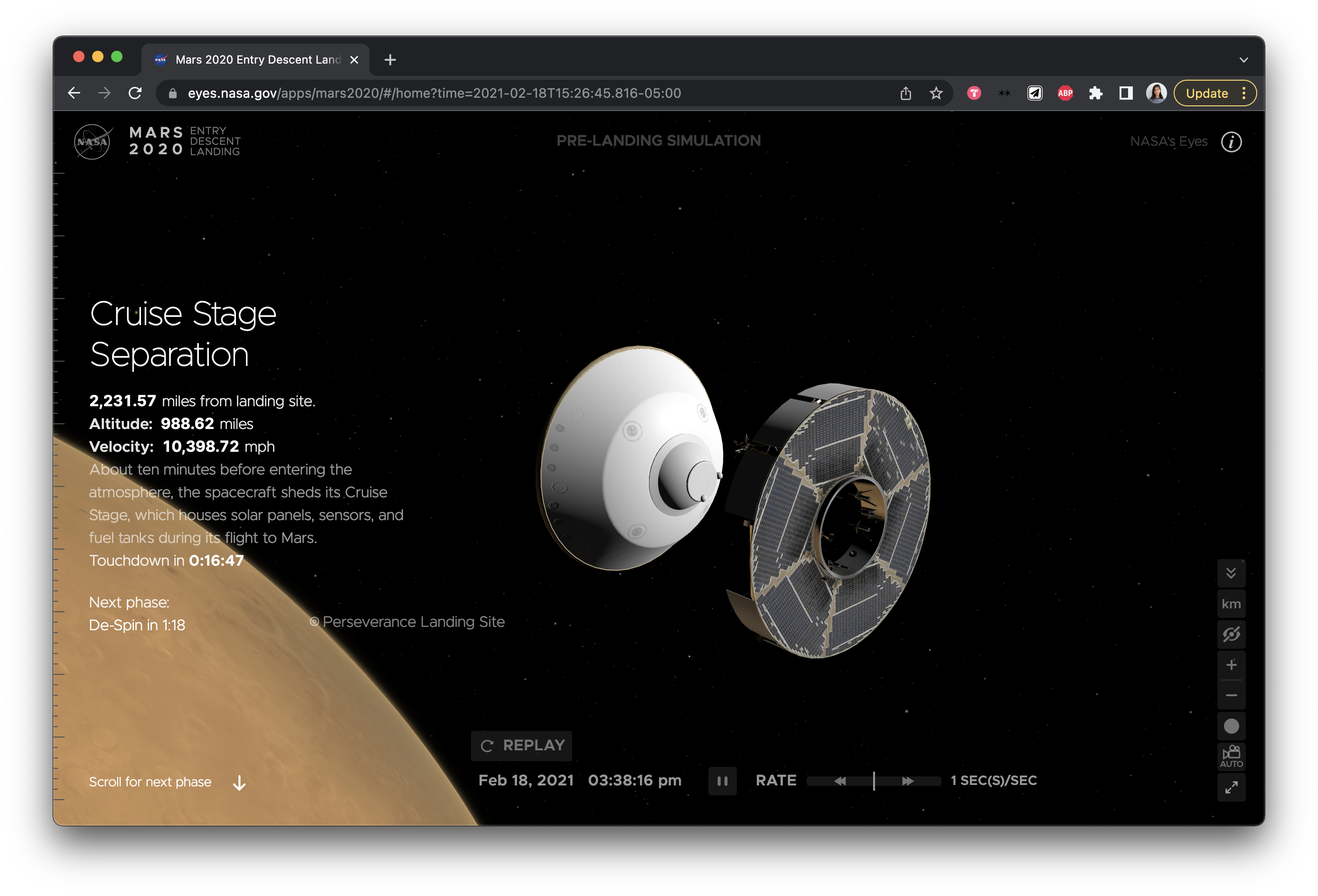

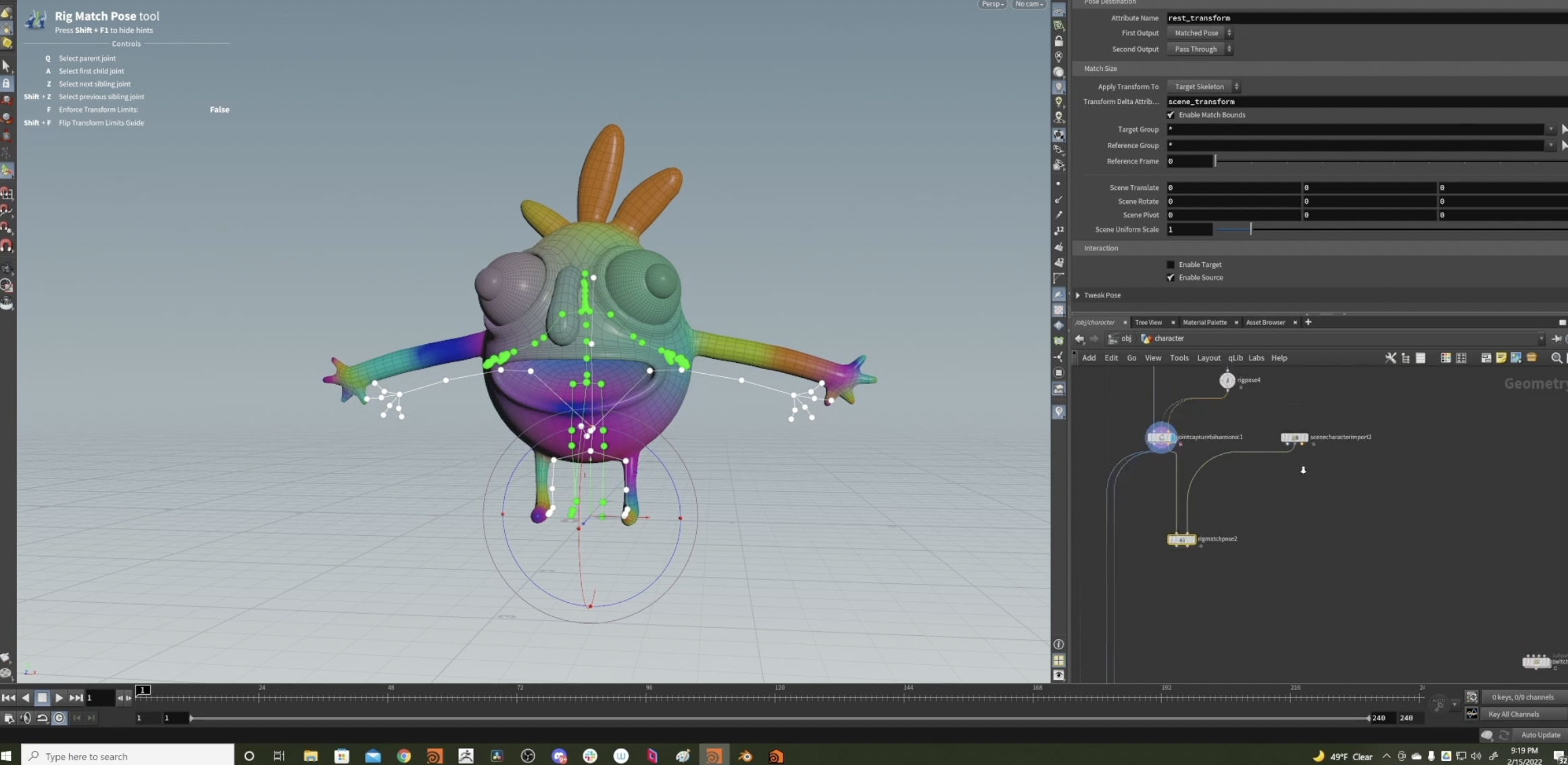

Right now I am taking this short project centered course taught by the animator Christopher Rutledge, who uses Houdini as his main tool for animation. The course just goes through the entire character development process specific to Houdini, which covers some things I didn’t know yet like KineFX, Houdini’s rigging toolset.

Creating a Mega Character – made in Houdini 19 for Intermediate by Christopher Rutledge at SideFX

He has also used the vr sculpting workflow for character creation.

The awesome thing about Houdini is I can do all of the normal character development process, but also use the simulation power of Houdini to computationally make the scenes/characters themselves more interesting and fun. For example this clip below showcases a really charming character design and added physics simulation to make the movement that much more appealing/charming, funny and unexpected.

View this post on Instagram

Another resource I use HEAVILY, is Entagma. Their tutorials are incredible. Here is an example of an effect I could create to add onto a character.

View this post on Instagram

starry – FinalPhase02

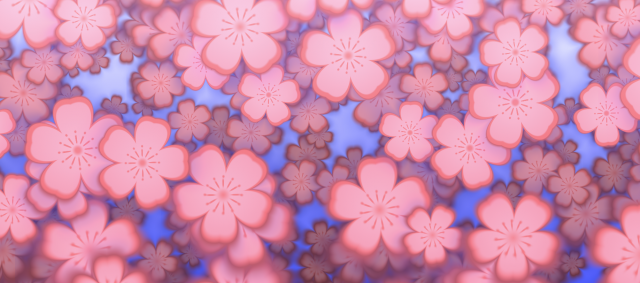

Relevant media – I’m interested in how to explore generativity within nature and how to achieve that using shaders. While the following results most likely aren’t achievable given my current knowledge and time frame of the project, I thought it was relevant to what I want to explore.

Technologies and techniques – I’m still unsure whether to pursue only learning GLSL or learning HLSL + Unity, so I included resources for both.

Freya Holmer – Shaders for Game Devs series – I’ve tried to watch this for the past year and I haven’t gotten past the first video

starry – FinalPhase1

I’m interested in learning how to write shaders as well as creating an immersive world using Unity and Blender. My three ideas are either one or the other or a combination of both. I’m not sure if I want to go with writing shaders in Unity however since it might be too difficult to tackle both learning HLSL and getting used to Unity.

Inspirational Projects & Useful Technologies

Scan of the Month features CT scans of common products, using 3D rendering + scrolling to highlight different components and stories behind their manufacture. It uses Webflow + Lottie but I think something like this would work well in three.js.

The NYTimes is naturally a source of inspiration, and they have done some helpful write-ups + demos using three.js and their own (public) libraries for loading 3D tiles and controlling stories with three.js.

More cool things made with three.js:

CrispySalmon-FinalPhase2.

- Project overview

- I want to train my own GAN with images from this Instagram account: subwayhands.

- Related work

- GANshare: Creating and Curating Art with AI for Fun and Profit

- Kishi Yuma: GAN trained on pictures of hands

-

- Mario Klingemann: Turn finger drawings into a GAN generated image

- Mario Klingemann: Turn finger drawings into a GAN generated image

- Technical Implementation:

- Instagram Scraping Tools/Tutorials

- Apify – Instagram Scraper: I played around with this tool for a bit. Overall, it’s really easy to use. Pros: fast, easy, I don’t need to do much other than inputting the Instagram page link. Cons: It crapes a lot of information, some of which are irrelevant to me. This requires me to filter/clean up the output file. And is doesn’t directly output the pictures, instead, it provides the display URL for each image, and the URL expires after a certain amount of time.

- GitHub – Instagram scraper :

- There is a youtube tutorial showing how to use this.

- I simply could not get this one to work on my laptop, though it sees to work for others. Need to play around with this a bit more.

- Python library – BeautifulSoup:

- stack overflow post

- Will need to parse the rendered with something like Selenium.

- Alternative method: Manually screenshot pictures

- Although this is boring, redundant work, it gives me more control on what pictures I want to use to train my GAN.

- Pix2Pix: If time allows, I want to look into using webcam to record hand posture and generate images based on realtime camera input.

- Instagram Scraping Tools/Tutorials

Sneeze-FinalPhase2

Because I am finishing up previous projects, this post will be about telematic experiences. I want to make a simple game or communication method for friends online, I looked at some apps and websites that I enjoy playing or using with my friends.

One game that me and my friends played a lot during quarantine was Drawphone. Basically, each person in the group draws a prompt given by another person in the group and it continues like the game telephone. A simple drawing game like this brings a lot of joy and laughter, which is something I want to emulate.

Also, I took some inspiration from upperclassmen in design who created a website/application called Pixel Push. The project allows for groups of friends to draw together using their webcams as the paintbrush. They used simple tools like HTML, p5.js, Socket.io, and Google teachable machine to achieve the end result. These are technologies we learned about in class, so I didn’t have to research about them.

kong-FinalPhase2

Inspirational Work

I find the textures used in Alpha’s work to be inspirational–and how the fluid yet superficial texture flows out of the eyes. I also noticed that the fluid sometimes disappears into the space and sometimes flows down along the surface of the face. It seems to differ depending on the angle of the face, and I wonder how Alpha achieves such an effect.

I found this project captivating as it had similar aspects to my initial ideas in that it places patches of colors on the face (it reminded me of how paint acts in water). Further, this filter was created using Snap Lens Studio, which led me to recognize the significant capabilities of this tool.

Snap Lens Studio

To implement my ideas, I’m planning on utilizing Snap Lens Studio, which I was able to find plenty of YouTube tutorials for. Though I will have to constantly refer back to the tutorials throughout my process, I was able to learn the basics of Snap Lens Studio, how to alter the background, and how to place face masks by looking through the tutorials. I also found a tutorial regarding the material editor that Aaron Jablonski referred to in his post. I believe this feature would allow me to explore and incorporate various textures into my work.

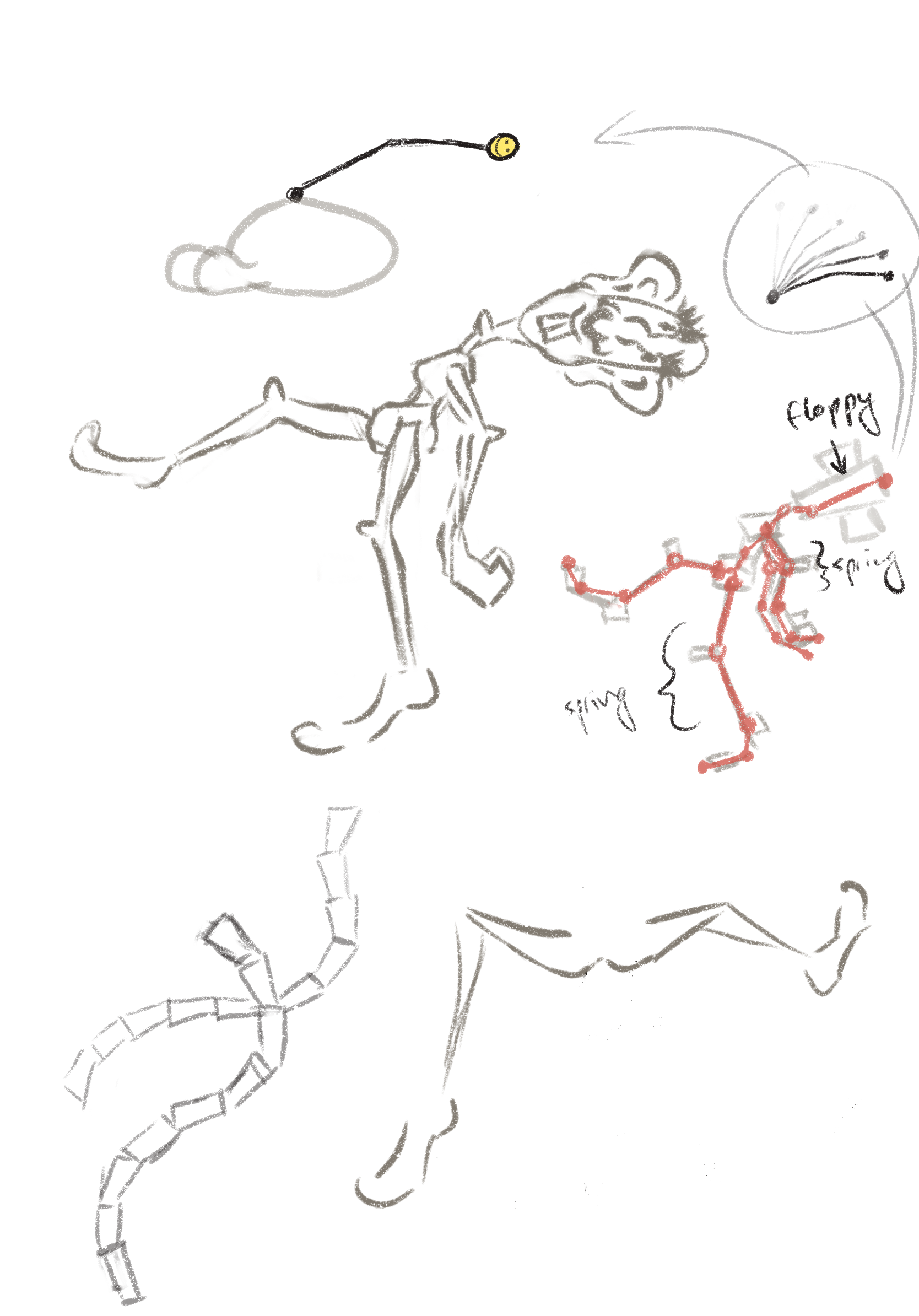

bookooBread – 08FinalPhase1

For my final project, I am going to use Houdini to make a very short animated scene that involves a character. I hope to use the VR headset as well to be able to quickly sculpt some 3D characters that I can use in the short. I haven’t completely decided yet if I want to bring my Houdini stuff into Unity to make it an interactive project or simply render it in Houdini (maybe both?). I think the most important thing I want to focus on with this project, is just having fun. I’m thinking I’ll end up with something kinda goofy, but we’ll see. At the moment, I want to repurpose this idea I came up with earlier in the semester for this class and make something fun out of it. It will involve using a lot of softbody, springy simulation. I’m not completely sure though if I’m set on this idea.

hunan-FinalPhase1

I’m thinking about making a transformation pipeline that can turn a set of GIS data into a virtual scene, not too different from procedurally generated scenes in games. This will consist of two parts: a transformation that is only done once to generate an intermediate set of data from the raw dataset and another set of code that can render the intermediate data into an interactive scene. I’ll call then stage 1 and stage 2.

To account for the indeterministic nature of my schedule, I want to plan different steps and possible pathways, which are listed below.

Stage 1:

- Using google earth’s GIS API, start with the basic terrain information for a piece of land and sort out all the basic stuff (reading data into python, python libs for mesh processing, output format, etc.) and test out some possibilities for the transformation.

- Start to incorporate the RGB info into the elevation info. See what I can do with those new data.

- Find some LiDAR datasets if point clouds can give me more options.

Stage 2:

- Just take the code I used for SkyScape (the one with 80k ice particles) and modify it to work with the intermediate format instead of random data.

- Make it look prettier by using different meshes, movements, and postprocessings that work with the overall concept.

- Using something like oimo.js or enable3D, add physics to the scene to allow for more user interactions and variabilities of the scene.

- Enhance user interaction by enhancing camera control, polishing the motions, adding extra interaction possibilities, etc.

- (If I have substantial time or if Unity is just a better option for this) Learn Unity and implement the above using Unity istead.

I’ll start with modifying the SkyScape code to work with a terrain mesh, which would give me a working product quickly, and go from there.