//Elizabeth Wang

//Section E

//elizabew@andrew.cmu.edu

//Project 11

var myTurtle = [];//array to store turtles

var movingTurtle = []; //array to store moving turtle

var d = 100; //degrees to keep movingTurtle moving

var dl = 50;//degrees to keep movingTurtle moving

function setup() {

createCanvas(480, 480);

background(32,50,103);

frameRate(10);

}

function draw() {

for(var i = 0; i < movingTurtle.length; i++) {

//moving "bats"

movingTurtle[i].setColor(color(25,61,mouseX));

movingTurtle[i].setWeight(.5);

movingTurtle[i].penDown();

movingTurtle[i].forward(10);

movingTurtle[i].right(d);

movingTurtle[i].forward(2);

movingTurtle[i].left(dl);

movingTurtle[i].forward(15);

d = d + 5; //makes the turtle move around the canvas continuously

dl = dl + 2;

}

for(var i = 0; i < myTurtle.length; i++) {

myTurtle[i].setColor(color(255,248,193));

myTurtle[i].setWeight(2);

myTurtle[i].penDown();

myTurtle[i].forward(20); //flower/star shape

myTurtle[i].right(90);

myTurtle[i].forward(40);

myTurtle[i].right(45);

if (i % 10 == 0) { //every 10, large star

myTurtle[i].setColor(color(255,224,9));

myTurtle[i].forward(50);

myTurtle[i].left(180);

}

}

}

function mousePressed() {

myTurtle.push(makeTurtle(mouseX, mouseY));//turtle appears when mouse is pressed

}

function mouseDragged(){

movingTurtle.push(makeTurtle(mouseX, mouseY)); //turtle follows mouse dragging

}

function turtleLeft(d) {

this.angle -= d;

}

function turtleRight(d) {

this.angle += d;

}

function turtleForward(p) {

var rad = radians(this.angle);

var newx = this.x + cos(rad) * p;

var newy = this.y + sin(rad) * p;

this.goto(newx, newy);

}

function turtleBack(p) {

this.forward(-p);

}

function turtlePenDown() {

this.penIsDown = true;

}

function turtlePenUp() {

this.penIsDown = false;

}

function turtleGoTo(x, y) {

if (this.penIsDown) {

stroke(this.color);

strokeWeight(this.weight);

line(this.x, this.y, x, y);

}

this.x = x;

this.y = y;

}

function turtleDistTo(x, y) {

return sqrt(sq(this.x - x) + sq(this.y - y));

}

function turtleAngleTo(x, y) {

var absAngle = degrees(atan2(y - this.y, x - this.x));

var angle = ((absAngle - this.angle) + 360) % 360.0;

return angle;

}

function turtleTurnToward(x, y, d) {

var angle = this.angleTo(x, y);

if (angle < 180) {

this.angle += d;

} else {

this.angle -= d;

}

}

function turtleSetColor(c) {

this.color = c;

}

function turtleSetWeight(w) {

this.weight = w;

}

function turtleFace(angle) {

this.angle = angle;

}

function makeTurtle(tx, ty) {

var turtle = {x: tx, y: ty,

angle: 0.0,

penIsDown: true,

color: color(128),

weight: 1,

left: turtleLeft, right: turtleRight,

forward: turtleForward, back: turtleBack,

penDown: turtlePenDown, penUp: turtlePenUp,

goto: turtleGoTo, angleto: turtleAngleTo,

turnToward: turtleTurnToward,

distanceTo: turtleDistTo, angleTo: turtleAngleTo,

setColor: turtleSetColor, setWeight: turtleSetWeight,

face: turtleFace};

return turtle;

}

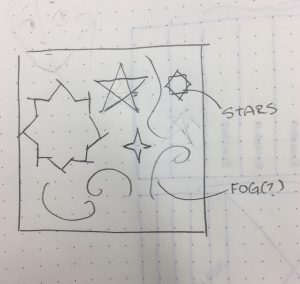

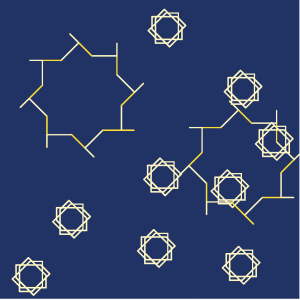

For this project, I wanted to create turtles that look like stars in the night sky, while also adding texture that could imitate fog. I made it so that the fog would change color as well in order to somewhat mirror the way fog changes tone depending on where the light source is.

At first I drew different star patterns on paper and then transferred my two favorite into the program. I made it so that smaller stars appear more often than the larger stars in an effort to imitate the “north star” with smaller stars surrounding it. I’m fairly happy with how it turned out, but if I could, it might have been fun to play with adding more visually different stars into the mix.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2020/08/stop-banner.png)