sketch

//Yugyeong Lee

//Section B

//yugyeonl@andrew.cmu.edu

//Project-10

var stars = []; //array of stars

var clouds = []; //array of clouds

var landscapes = []; //array of landscapes

var camera;

function preload() {

//loading previously-made (through code) camera image

var cameraImage = "https://i.imgur.com/5joEquu.png"

camera = loadImage(cameraImage);

}

function setup() {

createCanvas(480, 360);

//create initial set of stars

for (var i = 0; i < 100; i++) {

var starX = random(width);

var starY = random(3*height/4);

stars[i] = makeStars(starX, starY);

}

//create initial set of clouds

for (var i = 0; i < 4; i++) {

var cloudX = random(width);

var cloudY = random(height/2);

clouds[i] = makeClouds(cloudX, cloudY);

}

//create mountain

makeLandscape(height-100, 120, 0.0001, .0075, color(20));

//create ocean

makeLandscape(height-50, 20, 0.0001, .0005, color(42, 39, 50));

}

function draw() {

//gradient background

var from = color(24, 12, 34);

var to = color(220, 130, 142);

setGradient(0, width, from, to);

//stars

updateAndDisplayStars();

removeStars();

addStars();

//moon

makeMoon();

//clouds

updateAndDisplayClouds();

removeClouds();

addClouds();

//landscape

moveLandscape();

//reflection of moon on ocean

ellipseMode(CENTER);

fill(243, 229, 202, 90);

ellipse(3*width/4, height-50, random(50, 55), 4);

ellipse(3*width/4, height-35, random(35, 40), 4);

ellipse(3*width/4, height-26, random(25, 30), 4);

ellipse(3*width/4, height-17, random(10, 15), 4);

ellipse(3*width/4, height-8, random(35, 40), 5);

fill(204, 178, 153, 50);

ellipse(3*width/4, height-50, random(70, 80), 8);

ellipse(3*width/4, height-35, random(50, 60), 8);

ellipse(3*width/4, height-26, random(70, 80), 8);

ellipse(3*width/4, height-17, random(30, 40), 8);

ellipse(3*width/4, height-8, random(60, 70), 10);

//camera LCD display

push();

translate(65, 153);

scale(.475, .46);

var from = color(24, 12, 34);

var to = color(220, 130, 142);

setGradient(0, width, from, to);

//stars

updateAndDisplayStars();

removeStars();

addStars();

//moon

makeMoon();

//clouds

updateAndDisplayClouds();

removeClouds();

addClouds();

//landscape

moveLandscape();

//reflection

ellipseMode(CENTER);

fill(243, 229, 202, 90);

ellipse(3*width/4, height-35, random(50, 55), 6);

ellipse(3*width/4, height-28, random(35, 40), 4);

ellipse(3*width/4, height-19, random(25, 30), 4);

ellipse(3*width/4, height-10, random(10, 15), 4);

fill(204, 178, 153, 50);

ellipse(3*width/4, height-35, random(70, 80), 8);

ellipse(3*width/4, height-28, random(50, 60), 8);

ellipse(3*width/4, height-19, random(70, 80), 8);

ellipse(3*width/4, height-10, random(30, 40), 8);

pop();

//camera

image(camera, 0, 0);

//camera crosshair

noFill();

strokeWeight(.25);

stroke(235, 150);

rect(75, 163, 200, 140);

rect(85, 173, 180, 120);

line(170, 233, 180, 233);

line(175, 228, 175, 238);

//battery symbol

strokeWeight(.5);

rect(94, 279, 18.25, 6);

noStroke();

fill(235, 150);

for (i = 0; i< 4; i++) {

rect(95+i*4.25, 280, 4, 4);

}

rect(112.25, 280, 2, 3);

//REC text

fill(235);

textSize(7);

text("REC", 245, 184);

fill(225, 100, 0);

ellipse(238, 182, 7, 7);

//camera tripod

fill(30);

rect(width/2-50, height-26, 100, 20);

fill(25);

rect(width/2-75, height-16, 150, 50, 10);

}

function setGradient (y, w, from, to) {

// top to bottom gradient (background)

for (var i = y; i <= height; i++) {

var inter = map(i, y, y+w, 0, 1);

var c = lerpColor(from, to, inter);

stroke(c);

strokeWeight(2);

line(y, i, y+w, i);

}

}

function makeMoon() {

ellipseMode(CENTER);

for (var i = 0; i < 30; i++) {

//glowing gradient moonlight through array & randomizing value

var value = random(7, 8);

var transparency = 50-value*i;

var diam = 80;

fill(243, 229, 202, transparency);

ellipse(3*width/4, 90, diam+10*i, diam+10*i);

}

//the moon

fill(204, 178, 153);

ellipse(3*width/4, 90, diam, diam);

}

function makeLandscape(landscapeY, landscapeR, landscapeS, landscapeD, landscapeC) {

var landscape = {ly: landscapeY, //locationY

range: landscapeR, //range of how far landscape goes up

speed: landscapeS, //speed of the landscape

detail: landscapeD, //detail (how round/sharp)

color: landscapeC, //color of the landscape

draw: drawLandscape}

landscapes.push(landscape);

}

function drawLandscape() {

//generating landscape from code provided

fill(this.color);

beginShape();

vertex(0, height);

for (var i = 0; i < width; i++) {

var t = (i*this.detail) + (millis()*this.speed);

var y = map(noise(t), 0,1, this.ly-this.range/2, this.ly+this.range/2);

vertex(i, y);

}

vertex(width, height);

endShape(CLOSE);

}

function moveLandscape() {

//move the landscape

for (var i = 0; i < landscapes.length; i++) landscapes[i].draw();

}

function updateAndDisplayStars() {

//update the stars' position & draw them

for (var i = 0; i < stars.length; i++) {

stars[i].move();

stars[i].draw();

}

}

function makeStars(starX, starY) {

var star = {x: starX, //locationX of star

y: starY, //locationY of star

speed: -random(0, .005), //speed of the star

move: moveStars,

draw: drawStars}

return star;

}

function drawStars() {

noStroke();

//setting transparency at random to have twinkling effect

var transparency = random(50, 200);

fill(255, transparency);

ellipse(this.x, this.y, 1.25, 1.25);

}

function moveStars() {

//move stars by updating its x position

this.x += this.speed;

}

function removeStars() {

var keepStars = []; //array of stars to keep

for (var i = 0; i < stars.length; i++) {

if (0 < stars[i].x < width) {

keepStars.push(stars[i]);

}

}

stars = keepStars; //remember the surviving stars

}

function addStars() {

//new stars from the right edge of the canvas

var newStarsProbability = 0.0025;

//likliness of new stars

if (random(0, 1) < newStarsProbability) {

var starX = width;

var starY = random(3*height/4);

stars.push(makeStars(starX, starY));

}

}

//clouds

function updateAndDisplayClouds() {

//update the clouds' position & draw them

for (var i = 0; i < clouds.length; i++) {

clouds[i].move();

clouds[i].draw();

}

}

function makeClouds(cloudX, cloudY) {

var cloud = {x: cloudX, //locationX of cloud

y: cloudY, //locationY of the cloud

breadth: random(200, 300), //width of the cloud

speedC: -random(.3, .5), //speed of the cloud

nFloors: round(random(2,6)),//multiplier that determines the height of the cloud

transparency: random(20, 60),//transparency of the cloud

move: moveClouds,

draw: drawClouds}

return cloud;

}

function drawClouds() {

var multiplier = 5; //multiplier that determines the height of the cloud

var cloudHeight = this.nFloors*multiplier;

ellipseMode(CORNER);

noStroke();

fill(255, this.transparency);

push();

translate(this.x, height/2-80);

ellipse(0, -cloudHeight, this.breadth, cloudHeight/2);

pop();

push();

translate(this.x, height/2-100);

ellipse(30, -cloudHeight, this.breadth, cloudHeight);

pop();

}

function moveClouds() {

//move stars by updating its x position

this.x += this.speedC;

}

function removeClouds() {

var keepClouds = []; //array of clouds to keep

for (var i = 0; i < clouds.length; i++) {

if (clouds[i].x + clouds[i].breadth > 0) {

keepClouds.push(clouds[i]);

}

}

clouds = keepClouds; //remember the surviving clouds

}

function addClouds() {

//new clouds from the right edge of the canvas

var newCloudsProbability = 0.005;

//likliness of new clouds

if (random(0, 1) < newCloudsProbability) {

var cloudX = width;

var cloudY = random(height/2);

clouds.push(makeClouds(cloudX, cloudY));

}

}

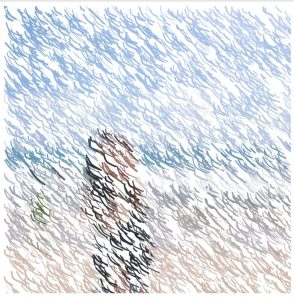

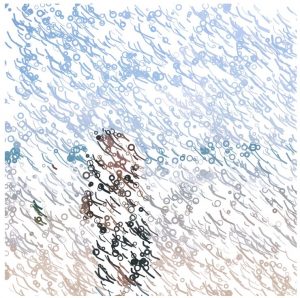

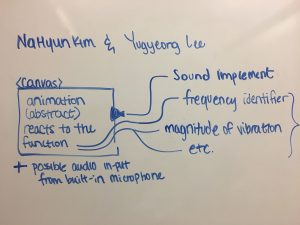

I first visualized the generative landscape through the lens of a camera. As if to record the moving landscape on the background, the LCD display shows the night sky with twinkling stars as well as clouds, mountains, and flowing body of water. I created the camera through code but in realizing that I cannot make a transparent LCD display, I took a screenshot of the camera I generated and created a transparent layer in Photoshop and included it in my code as an image. I focused on creating depth with this generative landscape project through different layers and wanted to make sure that even the objects with subtle movement such as the moon and the stars have movement through having blinking and glowing effect.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)