Interactive Music Media Installation

This is a project using Kinect connecting with a website which was developed by P5.js. Therefore, this project cannot be run on WordPress. We recorded the video for our project and hope it can be a good demonstration of the whole concept.

For this project, Nayeon and I worked together to create a new media installation in which people can interact with music by hitting virtual buttons to generate sounds and visual effects. People can follow the music from the background and have fun with moving their body to play music.

I learned a lot and a lot and a lot from this final project and I am so proud that it finally has been done. For this project, I was responsible for the Kinect part, which included making sure the connection between Kinect and the website (or we can say the Javascript files) work well and also create User Interface (the virtual buttons, particle effects, and sound effects) for the website. So, the biggest challenge for me at the beginning was figuring out how to connect Kinect and Javascript. I did a lot of research and finally decided to use the Node-Kinect2 library to achieve what I needed.

(Information about Node-Kinect library: https://github.com/wouterverweirder/kinect2)

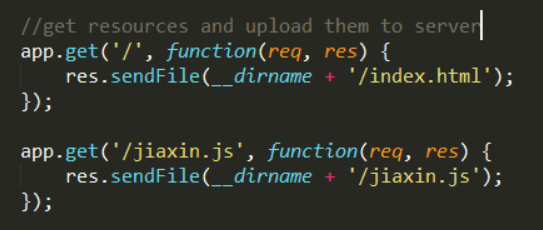

I followed the instructions of the library, installed all the modules and packages that I needed (which took me a long time to figure out how to properly install the node.js and all kinds of modules. 😉 ). After it, I looked into the sample files carefully, analyzed the logic inside to figure out how I can access the data of Kinect skeleton. I figured out, for using this library to access data, I needed to set up a local server and point it to my local index, uploading my resources and files to the server through the node.js. At last, I need to use the command prompt to open the js file by using node.js. Whoo… to be honest, it was not easy to figure out the whole process clearly, but once I understood the logic behind it, the whole thing went more fluently.

The next step I did was designing how the interface looks like. After communication with Nayeon, we decided to create a project with a Neon art style. I created a mood board for myself to figured out the feelings of the visual part.

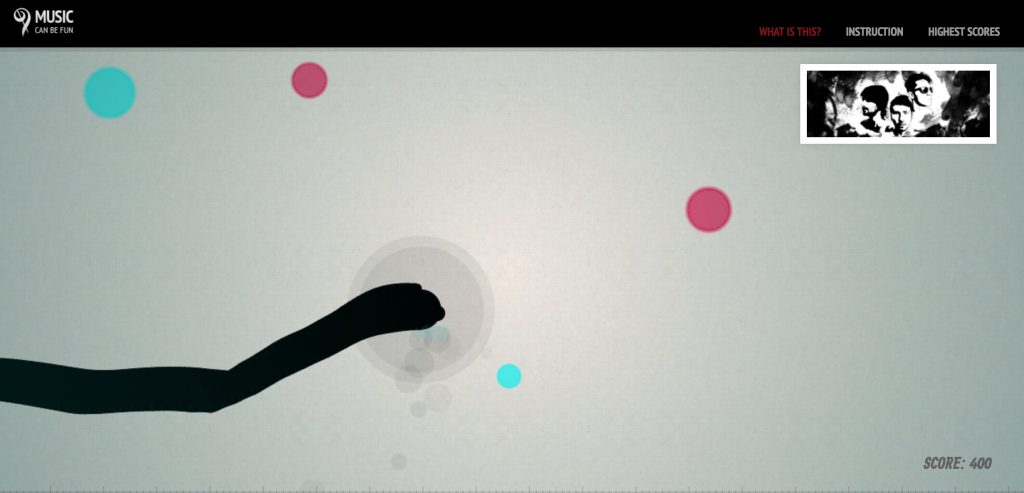

I also looked into a very inspiring and amazing project which is The V Motion Project and decided to do a circling interface for people to play with.

(For more information about V Motion Project: https://vimeo.com/45417241)

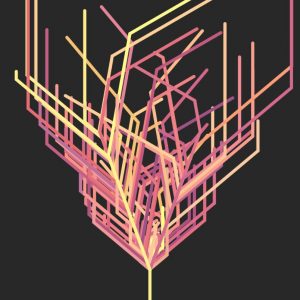

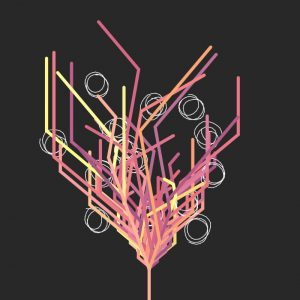

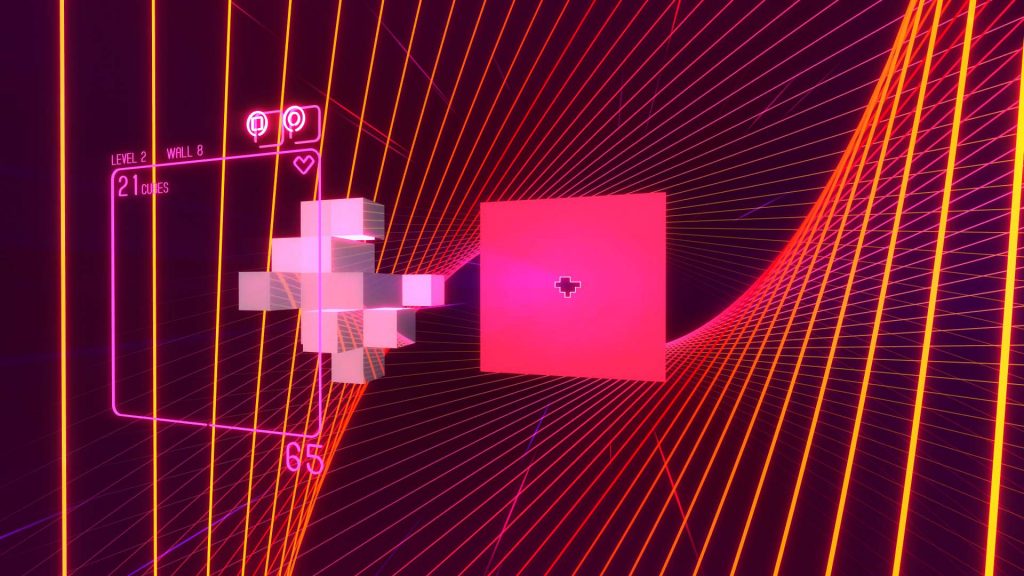

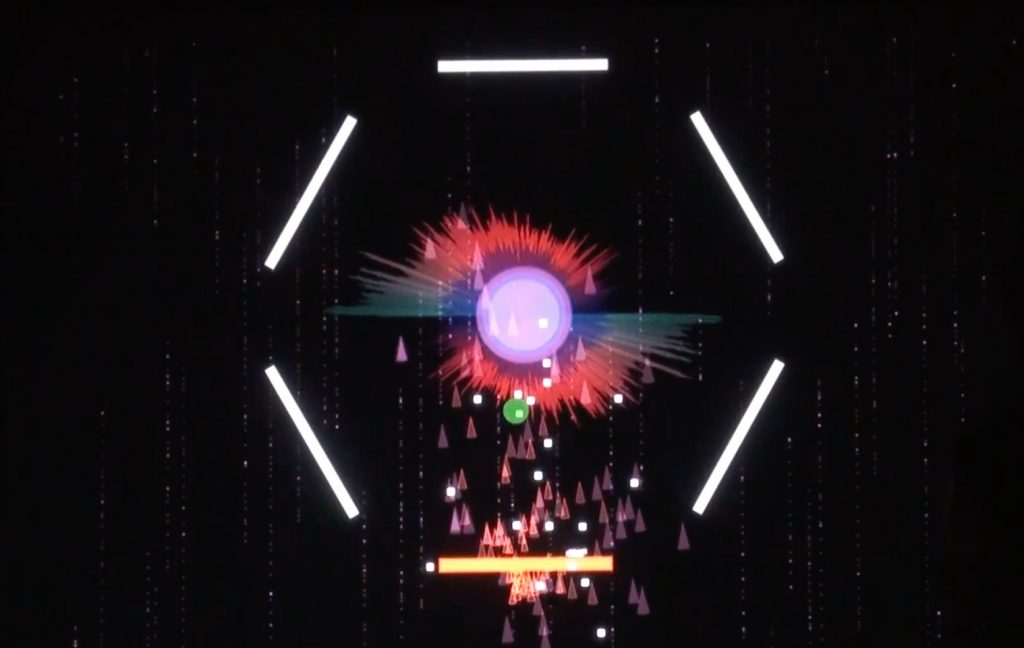

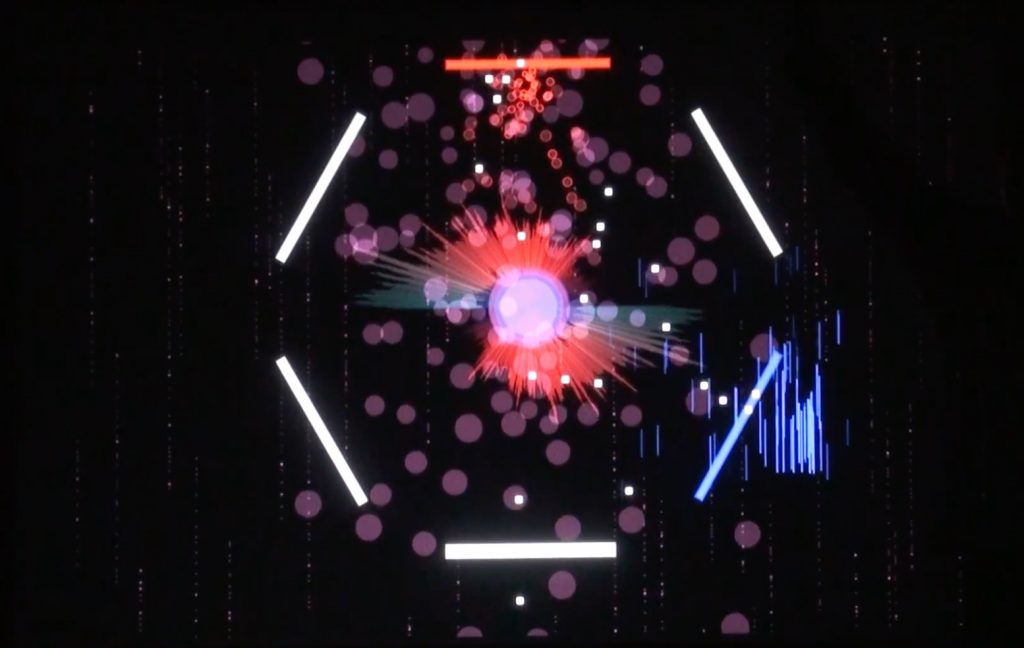

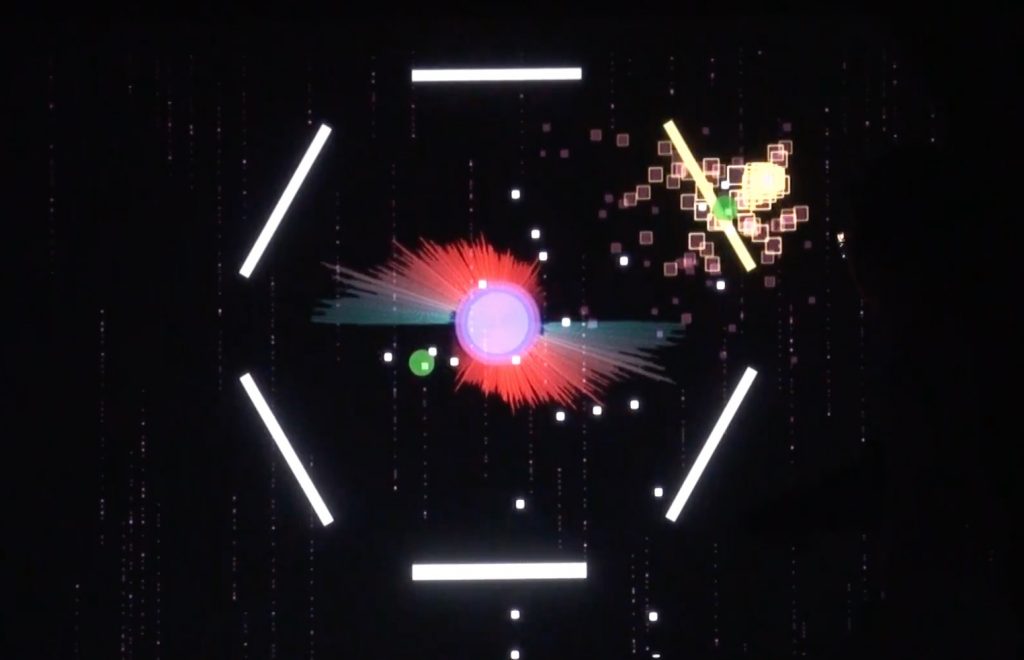

I created 6 virtual buttons on the canvas, and when people hit any of them, a particle effect will be generated along with a sound effect. By standing in front of the Kinect, people can move their hands to touch the buttons and create their own music and visual effects.

One more thing I have learned from this project is about how to access JS file outside Node.js through Node.js. The trick is you need to follow a very strict syntax to get your directory of the file and upload your resources to it.

Anyway, it is so excited that I have finished this project with Nayeon! We definitely went through some difficulties and I guess this is why I feel very fulfilled when I finished creating everything! Here are some screenshots of the project! Hope you Enjoy 🙂

Finally here is the source code (a zip file) we created, please feel free to check it. I was responsible for the Kinect and interface (jiaxin.js) part.

Instruction for opening our project:

- Go to https://github.com/wouterverweirder/kinect2 download the Kinect node.js library.

- Follow the instruction to install node.js

- Connect your Kinect to your PC and make sure it works 🙂

- Extract the project zip file, and open your Command Prompt (for windows), go to the directory that you put our project folder. (use “cd + the directory “)

- And then use node.js to open the kinect.js file. (simply type “node kinect”)

- Open a browser and go to the local server : http://localhost:8000

- Stand in front of your Kinect, and have fun with our project!

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2020/08/stop-banner.png)