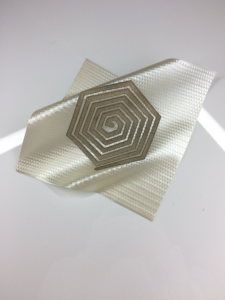

Soft Sound is using textiles as transducers. The cloth is woven with shapes of flat copper and silver coils and there is running and alternating currents that run through it, which creates soft speakers. They are connected to an amplifier and there is a magnet in close proximity to the coils, in order to force coils to go back and forth to induce sound waves. This inspires me because I love to sew and create soft sculpture, but I never imagined putting sounds or technology into the fabric. The project is effective in integrating the coils into the fabric- the coils are nicely designed into patterns rather than just put on, however, I’d like to see it to be more elaborate and create songs and clothing out of this innovative technology. EJ tech, made by Esteban de la Torre and Judit Eszter Karpati, wanted to innovate for contemporary interior design.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2020/08/stop-banner.png)

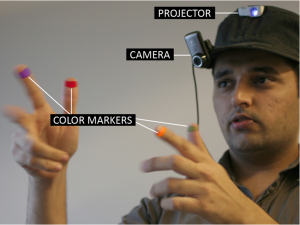

One interactive project that really stuck to me is the SixthSense project. I first came across this project in a TEDx video. SixthSense is a prototype consisting of a projector, mirror and camera. It allows the user to have an interactive projection/screen on various surfaces in everyday life, using simple hand gestures to control it.

One interactive project that really stuck to me is the SixthSense project. I first came across this project in a TEDx video. SixthSense is a prototype consisting of a projector, mirror and camera. It allows the user to have an interactive projection/screen on various surfaces in everyday life, using simple hand gestures to control it.