katieche-10

/*

katie chen

katieche@andrew.cmu.edu

project 10

section e

*/

var terrainSpeed = 0.0002;

var terrainDetail = 0.002;

var backdetail = 0.003;

var clouds = [];

var cacti = [];

var camelfr = [];

var cam = [];

function preload() {

var camelfile = [];

camelfile[0] = "https://i.imgur.com/bDUcYTm.png";

camelfile[1] = "https://i.imgur.com/6dVVrob.png";

camelfile[2] = "https://i.imgur.com/hbSKaEk.png";

camelfile[3] = "https://i.imgur.com/7mLCzwN.png";

camelfile[4] = "https://i.imgur.com/ajswkv9.png";

camelfile[5] = "https://i.imgur.com/5PYiIL8.png";

camelfile[6] = "https://i.imgur.com/izwJZyn.png";

camelfile[7] = "https://i.imgur.com/bHlNbyH.png";

for(var i =0; i < 8; i++){

camelfr[i] = loadImage(camelfile[i]);

}

}

function setup() {

createCanvas(480, 480);

// making initial collection of objects

for (var i = 0; i < 10; i++){

var rx = random(width);

clouds[i] = makeCloud(rx);

cacti[i] = makeCactus(rx);

cam[i] = makeCam(rx);

}

frameRate(10);

}

function draw() {

background(185, 174, 176);

push();

noStroke();

fill(188, 177, 178);

rect (0, 140, width, height-140);

fill(195, 180, 176);

rect (0, 170, width, height-170);

fill(200, 185, 176);

rect (0, 230, width, height-230);

fill(207, 187, 172);

rect (0, 260, width, height-260);

pop();

ground();

updateAndDisplayCacti();

cactusAdd();

updateAndDisplayCam();

camAdd();

camDisplay();

updateAndDisplayClouds();

cloudAdd();

}

function ground() {

// background

push();

beginShape();

noStroke();

fill(200, 164, 140);

for (var x = 0; x < width; x++) {

var t = (x * backdetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,1, 200, height-50);

vertex(0,480);

vertex(480,480);

vertex(x, y);

}

endShape();

pop();

// foreground

push();

beginShape();

noStroke();

fill(181, 121, 78);

for (var x = 0; x < width; x++) {

var t = (x * terrainDetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,1, 270, height);

vertex(0,480);

vertex(480,480);

vertex(x, y);

}

endShape();

pop();

}

function updateAndDisplayClouds(){

// Update the clouds' positions, and display them.

for (var i = 0; i < clouds.length; i++){

clouds[i].move();

clouds[i].display();

}

}

function cloudAdd() {

// With a very tiny probability, add a new cloud to the end.

var newcloudLikelihood = 0.02;

if (random(0,1) < newcloudLikelihood) {

clouds.push(makeCloud(width));

}

}

// moving the clouds

function cloudMove() {

this.x += this.speed;

}

// drawing the clouds

function cloudDisplay() {

push();

translate(this.x, 50);

noStroke();

if (this.z < 30) {

fill(225, 210, 192);

}

if (this.z > 30 & this.z < 50) {

fill(222, 202, 182);

}

if (this.z > 50) {

fill(218, 194, 174);

}

rect(23+this.x, 50+this.z, 100+this.l, 25+this.h, 200, 200, 200, 200);

rect(60+this.x, 25+this.z, 50, 50, 200, 200, 200, 200);

rect(50+this.x, 35+this.z, 30, 30, 200, 200, 200, 200);

pop();

}

// making the clouds

function makeCloud(cx) {

var cloud = {x: cx,

z: random(0, 150),

l: random(0,20),

h: random(0,20),

speed: -1.0,

move: cloudMove,

display: cloudDisplay

}

return cloud;

}

// CACTUS

function updateAndDisplayCacti(){

// Update the cacti positions, and display them.

for (var i = 0; i < cacti.length; i++){

cacti[i].tmove();

cacti[i].tdisplay();

}

}

function cactusAdd() {

// With a very tiny probability, add a new cactus to the end.

var newcactusLikelihood = 0.02;

if (random(0,0.5) < newcactusLikelihood) {

cacti.push(makeCactus(width));

}

}

// moving the cactus

function cactusMove() {

this.mx += this.mspeed;

}

// draw the cactus

function cactusDisplay() {

push();

noStroke();

translate(this.mx, 200);

fill(131-this.cr, 170, 124-this.cr);

rect(50+this.mx,50+this.cacter,25+this.wid, 90+this.hei, 200, 200, 0,0);

rect(50+this.mx+this.wid,80+this.cacter,40,10,200,200,200,200);

rect(80+this.mx+this.wid,60+this.cacter,10,30,200,200,200,200);

rect(30+this.mx,90+this.cacter,40,10,200,200,200,200);

rect(30+this.mx,70+this.cacter,10,30,200,200,200,200);

pop();

}

// making the cacti

function makeCactus(tx) {

var cactus = {mx: tx,

mspeed: -2.5,

hei: random(-10,20), // tallness of main cactus body

wid: random(0,5), // fatness of main cactus body

cr: random(0,50), // color

cacter: random(70, 180), // y value

tmove: cactusMove,

tdisplay: cactusDisplay

}

return cactus;

}

// CAMEL

function updateAndDisplayCam(){

// Update the camel positions, and display them.

for (var i = 0; i < cam.length; i++){

cam[i].cmove();

cam[i].cdisplay();

}

}

function camAdd() {

// With a very tiny probability, add a new camel to the end.

var newcamLikelihood = 0.02;

if (random(0,1) < newcamLikelihood) {

cam.push(makeCam(width));

}

}

// moving the camel

function camMove() {

this.camx += this.cspeed;

}

function camDisplay() {

push();

noStroke();

scale(0.3);

for (var i = 0; i < 10; i++) {

var num = frameCount % 8;

image(camelfr[num],600, 900);

}

pop();

}

// making the camel

function makeCam(ax) {

var camel = {camx: ax,

cspeed: -1.0,

cmove: cactusMove,

cdisplay: cactusDisplay

}

return camel;

}

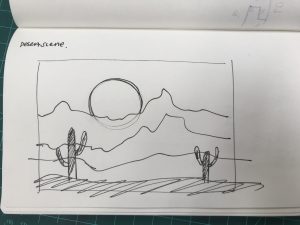

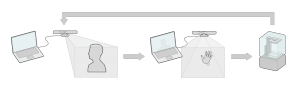

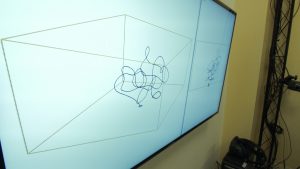

I had a lot of fun working on this project. I wanted to create a desert landscape, so I selected colors from an image of the grand canyon. I made the background sort of have a gradient to give more of a “never ending stretch of land” horizon line feeling. The cactuses were a fun addition that I created in p5.js before realizing that I could’ve made them in illustrator and uploaded them as image files. Upon realization, I decided to add the moving camel. The original gif is from here, but I took each frame and edited it in illustrator to make it look more uniform in my code.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2020/08/stop-banner.png)