mixing photogrammetry with style transfer pic.twitter.com/wg6X9UOaCP

— lulu xXX (@luluixixix) November 4, 2019

This project uses Style Transfer software algorithms and Optical Flow to texturize an environment with the style of Noj Barke’s dot paintings. The environment itself is created with photogrammetry. This project interested me because it showed me how machine learning can be used to create an immersive 3D environment, not just 2D images. I did a little bit of photogrammetry in a 3D printing class last semester and I really enjoyed it, but this project used photogrammetry in a way that I hadn’t considered before. This project is a video, but I think it would be interesting to explore an environment created in this way with VR.

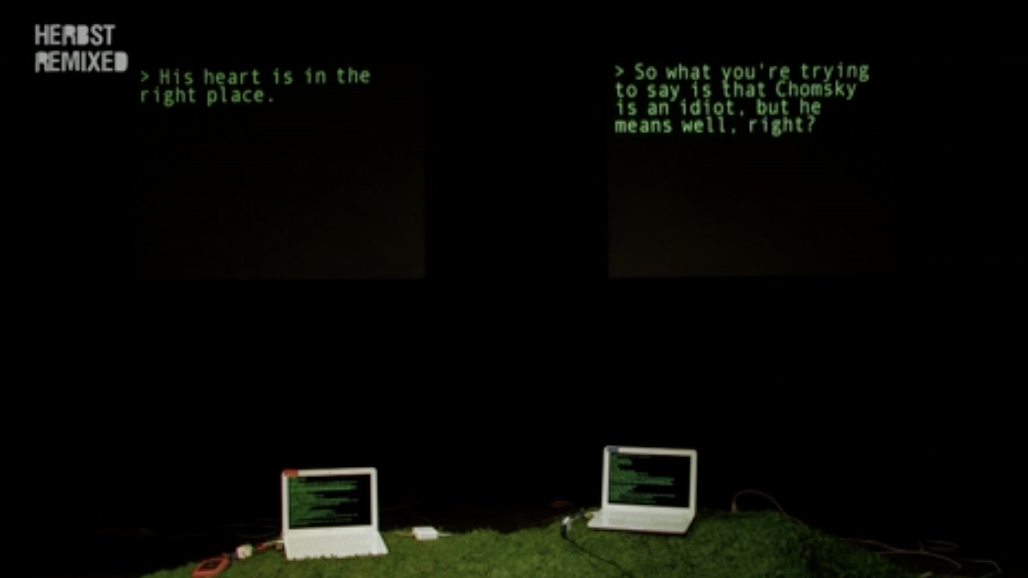

Hello Hi There

Hello Hi There