Fluid Leaves – Reinoud van Laar

Fluid Leaves is a project made by Reinoud van Laar for tea boutique, ‘Tee & Cupp’ in Xian. This project generates prints of non overlapping leaves in a fluid pattern similar to that of tea leaves floating in water onto paper cups.

The elegance of each print initially drew me into the project such as the delicate shapes and simplistic color palette. I also found the application of generating patterns that are not each perceptually unique while also having perceptual difference as a great means of branding for the tea shop since the shop could maintain a uniform look while ensuring each customer has a drink that is special in its own way.

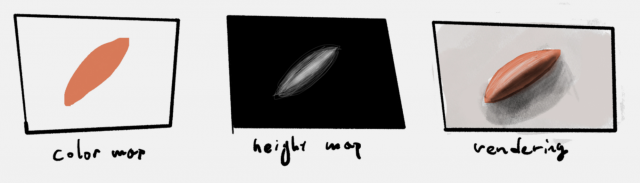

This project uses programs such as Geomerative, ControlP5,

Toxiclibs, and Mesh and applies topics such as Perlin noise, displacement maps, and fluid dynamics to create the water like movements. Each leaf is made in Processing by randomly varying certain characteristics of each leaf such as the traits of each vein and stem to create unique leaf shapes. Additionally, the liquid feel of the pattern is created by fluid dynamics mapped to vector paths.