Dog Lullaby

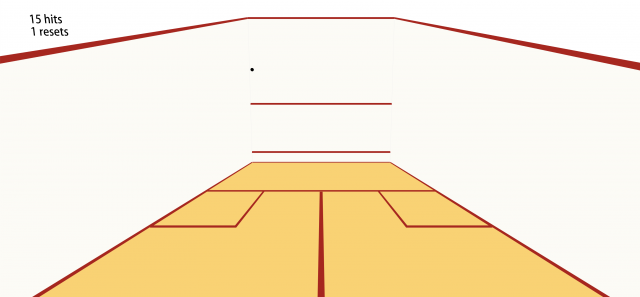

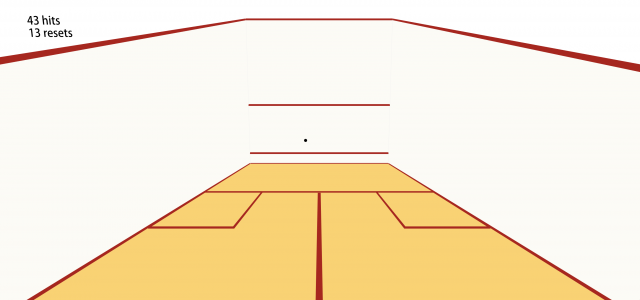

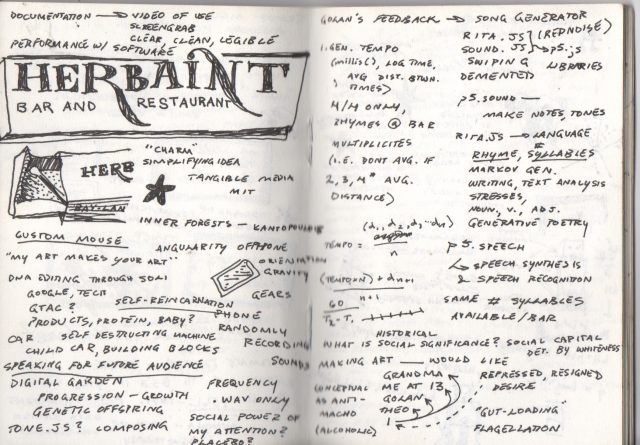

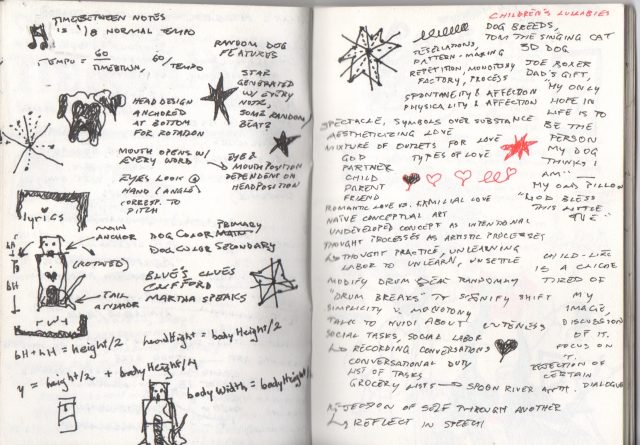

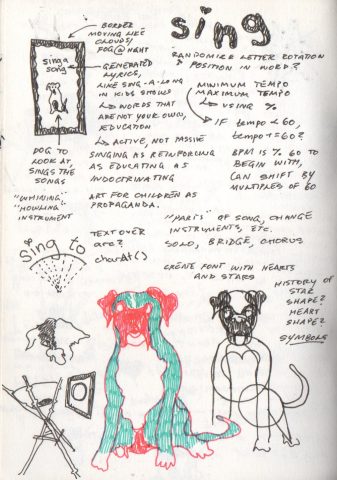

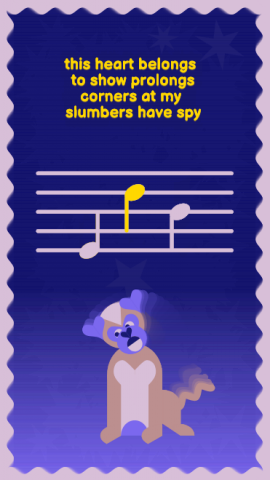

I have created a lullaby generator for children to feel loved and lulled as they fall quickly asleep. It features an animated dog, generated lullaby lyrics with rhymes, and a generated melody that children can alter using the special Soli sensor.

Above is a concise demo of the app in use. It normally runs for a random number of lyrics from 35 to 45 lyrics, but for the purposes of a short video, only a few lines are shown.

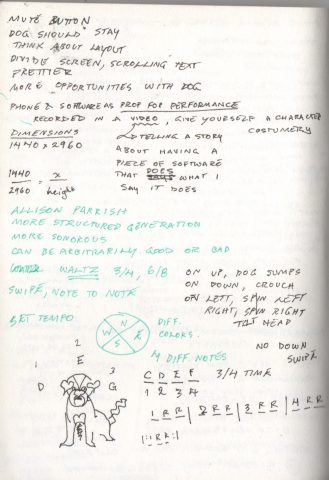

Swiping left will increase the pitch of the left note, swiping up for the center note, and right for the right. A tapping gesture will mute the song and another will un-mute. If you feel inclined to test the app on desktop, the swipes map to arrow keys (left key press is equivalent to left swipe, etc.).

Swiping left will increase the pitch of the left note, swiping up for the center note, and right for the right. A tapping gesture will mute the song and another will un-mute. If you feel inclined to test the app on desktop, the swipes map to arrow keys (left key press is equivalent to left swipe, etc.).

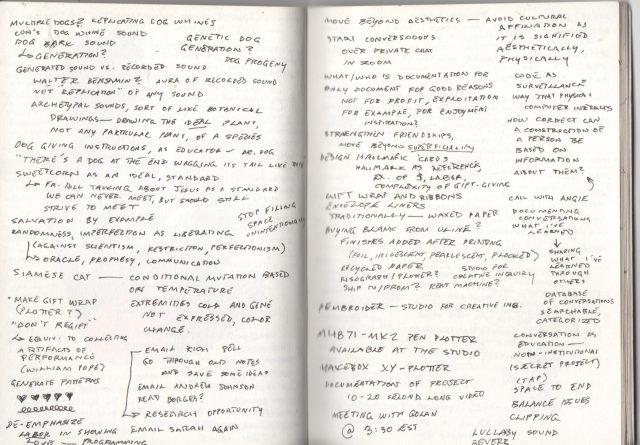

The dog and border are animated using p5.js, the lyrics are generated using RiTa.js, and the song itself is generated using Tone.js. It was a pleasure becoming more familiar with the latter two. RiTa.js is an excellent tool for generative poetry, and I’ve only just begun to experiment with it. I had originally been using p5.Sound to generate the song, but found the quality terrible and switched to Tone.js. My life instantly became easier, and I look forward to experimenting more with it as well.

The song is composed of one enveloped oscillator that creates a bell-like melody, one modulated oscillator that plays a random harmony based on the melody, and a synthesizer that plays a random bass sequence based on the melody as well. It runs in 3/4 time at 90 bpm, as similar to a lullaby as I could get.

In the future I hope to animate the tail and eyes of the dog more specifically. I also hope to refine the sounds—adding more dimension and nuance to something that should sound simple and lovely. A lovely thing I feel obligated to note: I experimented with this app around my dog (his name is Scout) and he immediately ran toward it. I suppose my “whine” instrument was more accurate than I had expected ;~0. I have yet to test the app with cats and children, but I have high hopes!

Good night,

sleep tight!

Don’t let the bed bugs bite!

xoxoxo

~sweetcorn

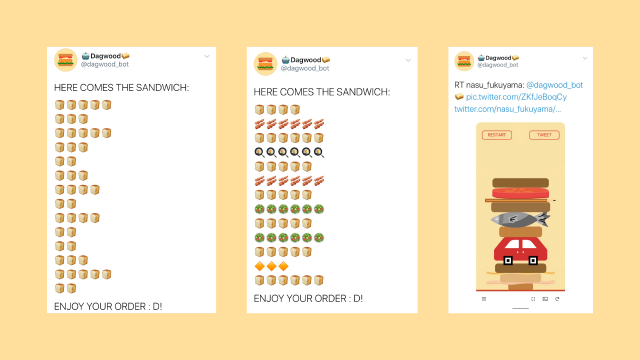

The main thing I learned about my process in making this is: It seems I am still a toddler without developed object permanence, because when I don’t see the console logs, I simply seem to completely forget they exist. That was my poor attempt at a joke, but I did realize I have bad debugging habits, and that cost me a lot of time while making this. Soli Sandbox also has some weird quirks in how it deals with data (images) downloaded from the app so for this reason you cant use Save() or SaveCanvas() to download an image (it seems to all go into app data folder, and I didn’t want to mess with folders I don’t have permission to view). To go around this I created a Twitter bot to post some images, though dealing with the Imgur API has been painful.

The main thing I learned about my process in making this is: It seems I am still a toddler without developed object permanence, because when I don’t see the console logs, I simply seem to completely forget they exist. That was my poor attempt at a joke, but I did realize I have bad debugging habits, and that cost me a lot of time while making this. Soli Sandbox also has some weird quirks in how it deals with data (images) downloaded from the app so for this reason you cant use Save() or SaveCanvas() to download an image (it seems to all go into app data folder, and I didn’t want to mess with folders I don’t have permission to view). To go around this I created a Twitter bot to post some images, though dealing with the Imgur API has been painful.