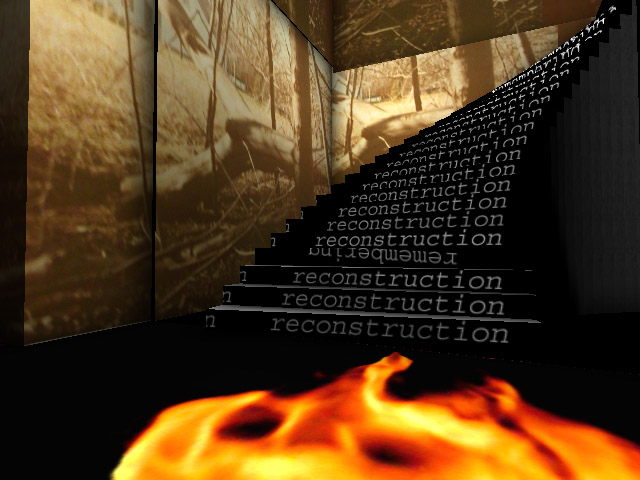

Mirages & miracles is an augmented reality exhibition created by Adrien M & Claire B, and was first displayed in 2017. It is meant as “a tribute to us humans as we cling to seemingly lifeless, motionless, inorganic things”.

Mirages & miracles – trailer

What fascinated me the most the first time I saw the project was how the AR technology was seamlessly integrated with the physical display. AR technology had used in many occasion, but too often do they feel detached from the physical world, and often somewhat unnecessary. This was the one project I saw and remembered where the technology was one with the art, and as we mentioned in the first class, it brings an emotional impact instead of merely leaving me to wonder how they did it. There were also VR and projection parts of the display, but it was the AR part that was most memorable to me.

To my knowledge the this project is done with a team of around 30 people (throughout the whole process, from concept to final set up). And I cannot find information on what it was created with.